All Topics

-

The Puzzler: A Tectonic Interactive Music System

An IMS inspired by lessons in architecture

-

TechnoBike

A pedal-powered interactive music system

-

The SlapBox: A DMI designed to last

A critical review of a durable digital musical instrument

-

Review of Sounding Brush: a graphic score IMS?

A critical review of a drawing-based interactive music system

-

KineMapper

A Max for Live device that maps motion data from a smartphone to controls in Ableton Live.

-

Playlist Data and Recommendations Using Artificial Neural Networks

a machine-learning algorithm that pairs independent artists, with curated playlists that best fit based on musical attributes

-

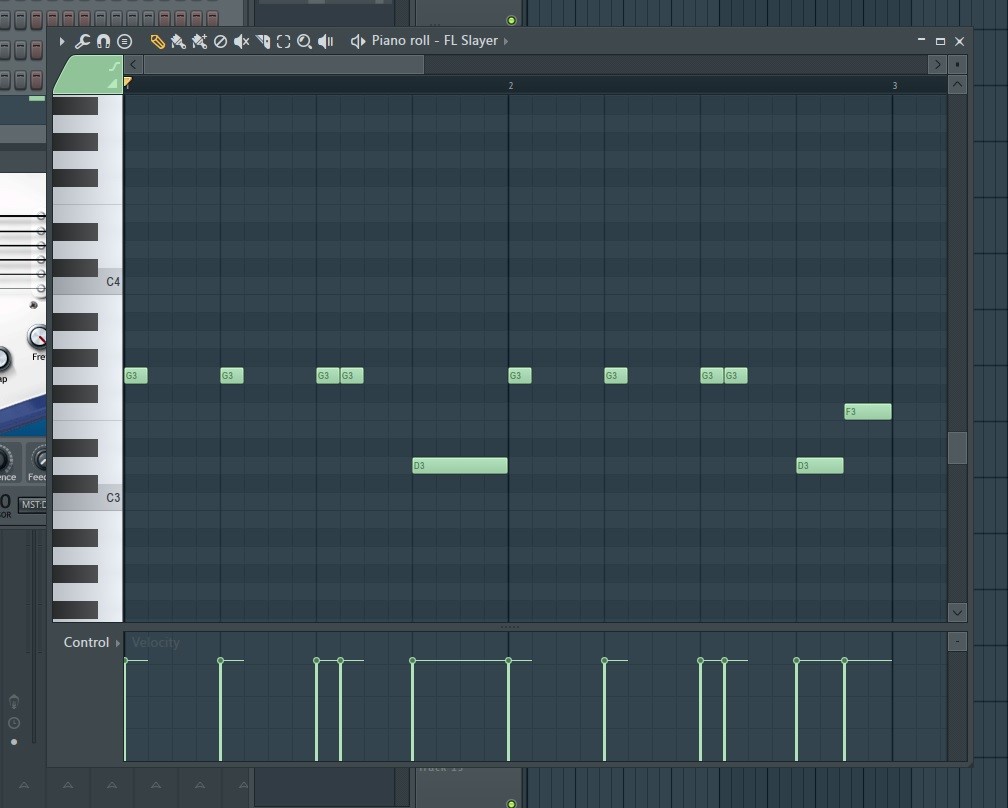

AcidLab: deep acid bass-line generation

Using machine learning to generate acid bass-line melodies with user-created datasets.

-

Connecting Eigenrhythms and Human movement

Connecting Machine Learning and Human understanding of rhythm.

-

The Chiptransformer

The Chiptransformer an my attempt at building a machine learning model using the transformer architecture to generate music based on a dataset of Nintendo NES music.

-

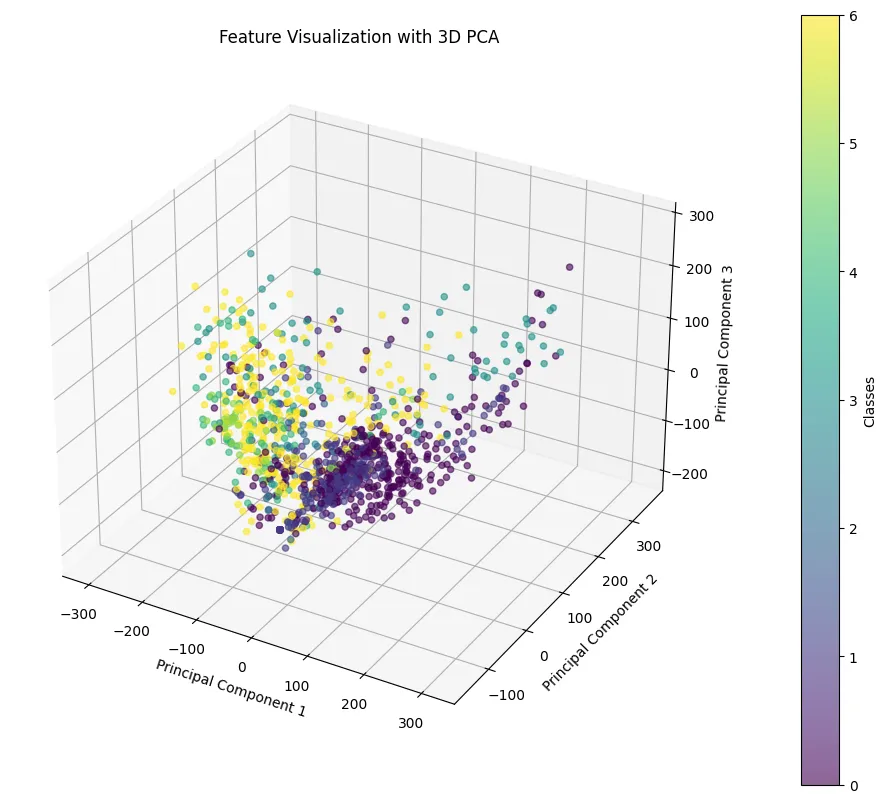

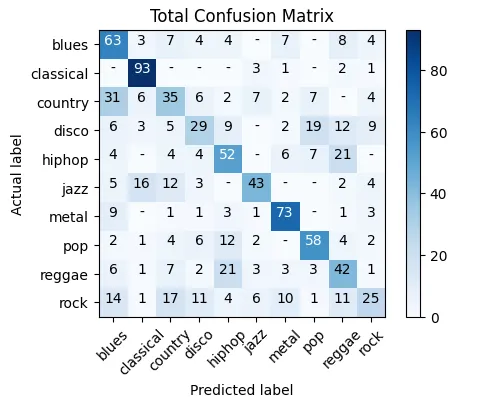

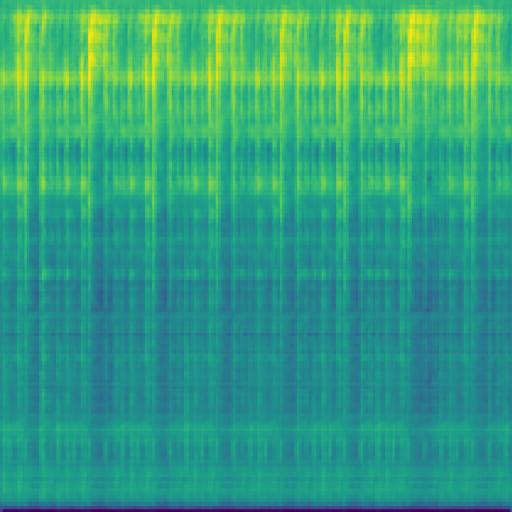

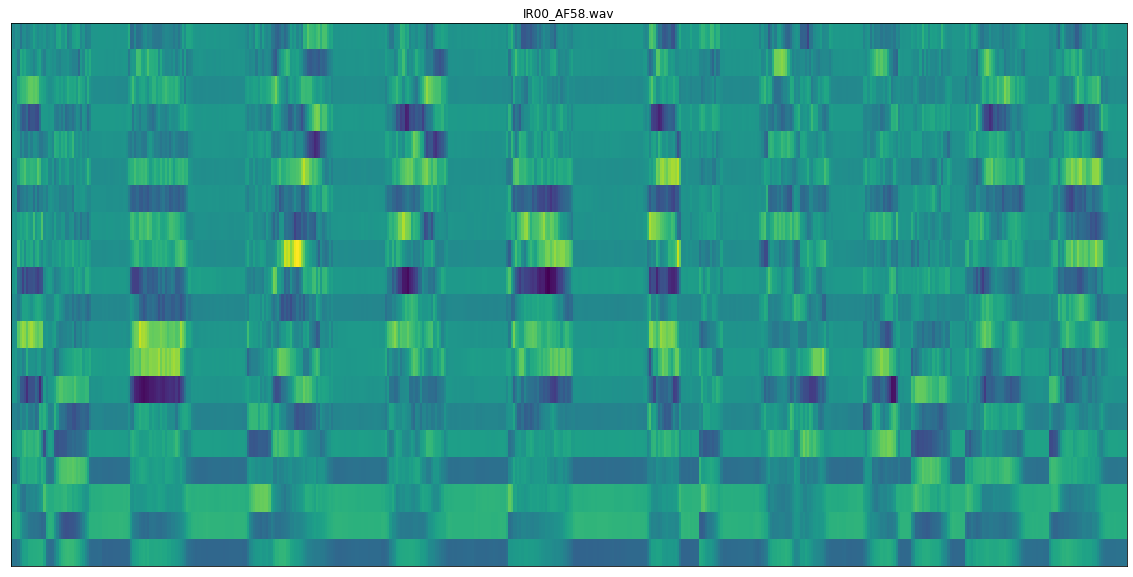

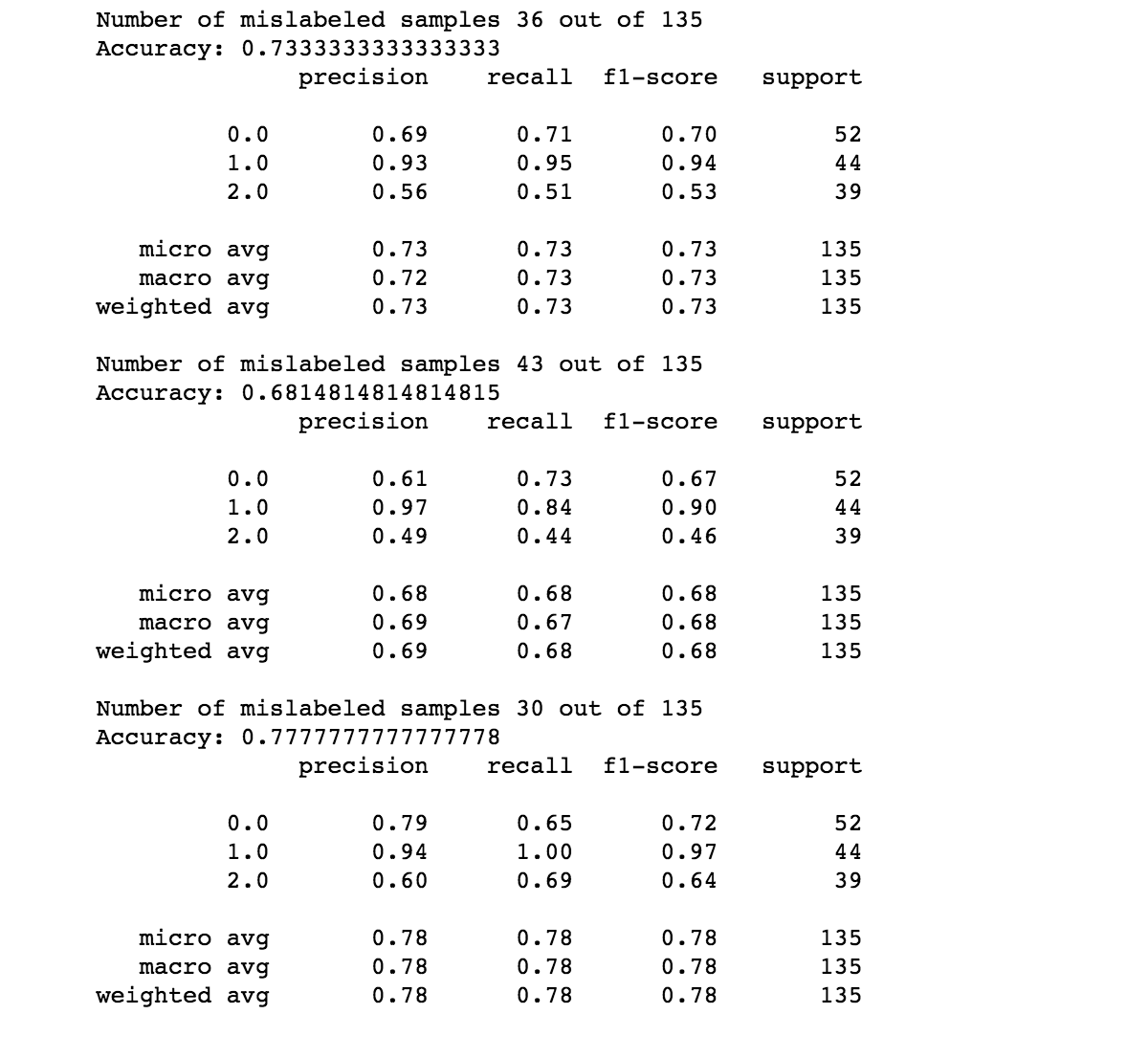

Using convolutional neural networks to classify music genres

When classifying genres in music, CNNs are a popular choice because of their ability to capture intricate patterns in data.

-

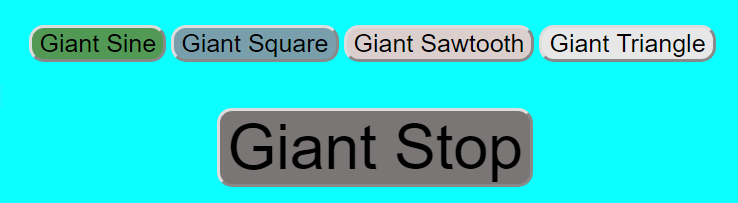

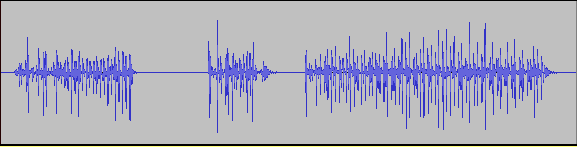

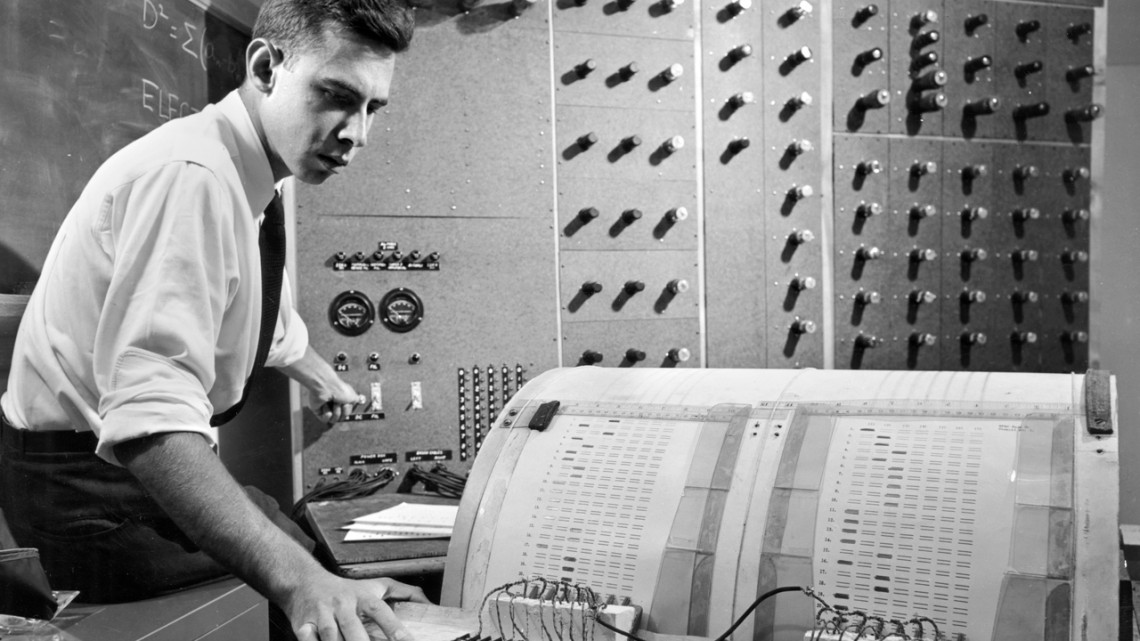

Digital Signal Processing Basics

Virtually any song you can listen to on the radio has examples of Digital Signal Processing.

-

Introduction to Open Sound Control (OSC)

This post contains a brief overview of OSC and a tutorial on how to make a connection and send data between devices.

-

Formatting WebPD Projects: An Introduction to WebPD, HTML and CSS Styling

Styling your WebPD application can lead to greater user experience and accessibility.

-

We Are Sitting In Rooms

Recreating the most famous piece by composer Alvin Lucier as a network music performance

-

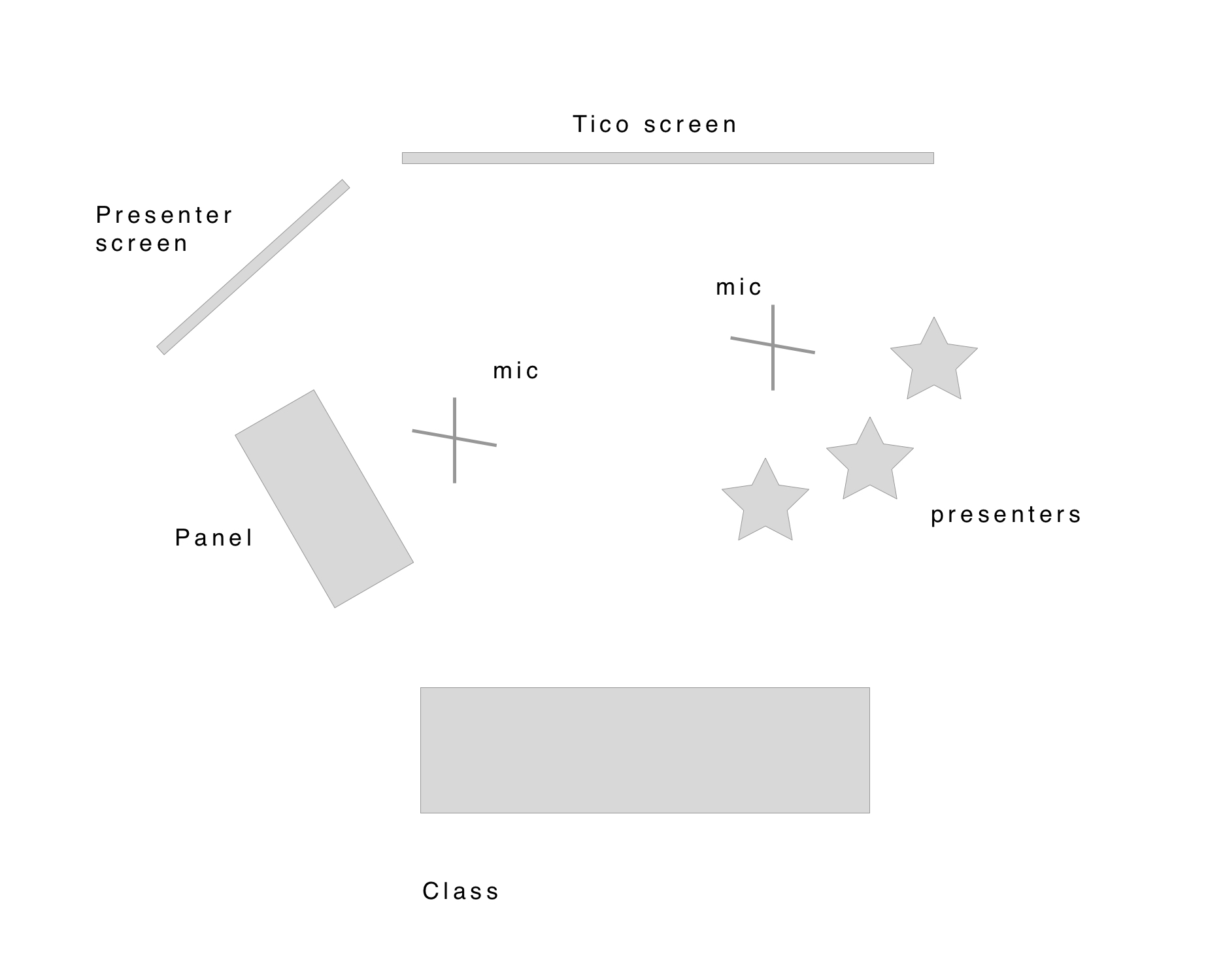

Documenting Networked Music Performances: Tips, Tricks and Best Practices

Effectively documenting networked music performances can lead to better experiences for physical and digital audiences, and your academic explorations.

-

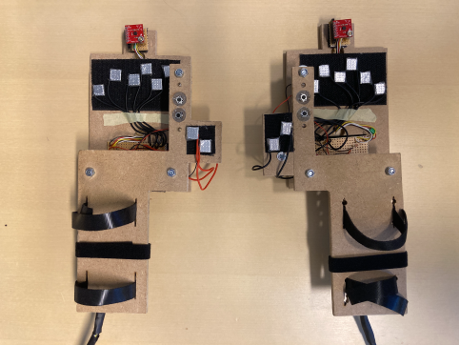

Xyborg 2.0: A Data Glove-based Synthesizer

Learn about my adventures in designing and playing a wearable instrument.

-

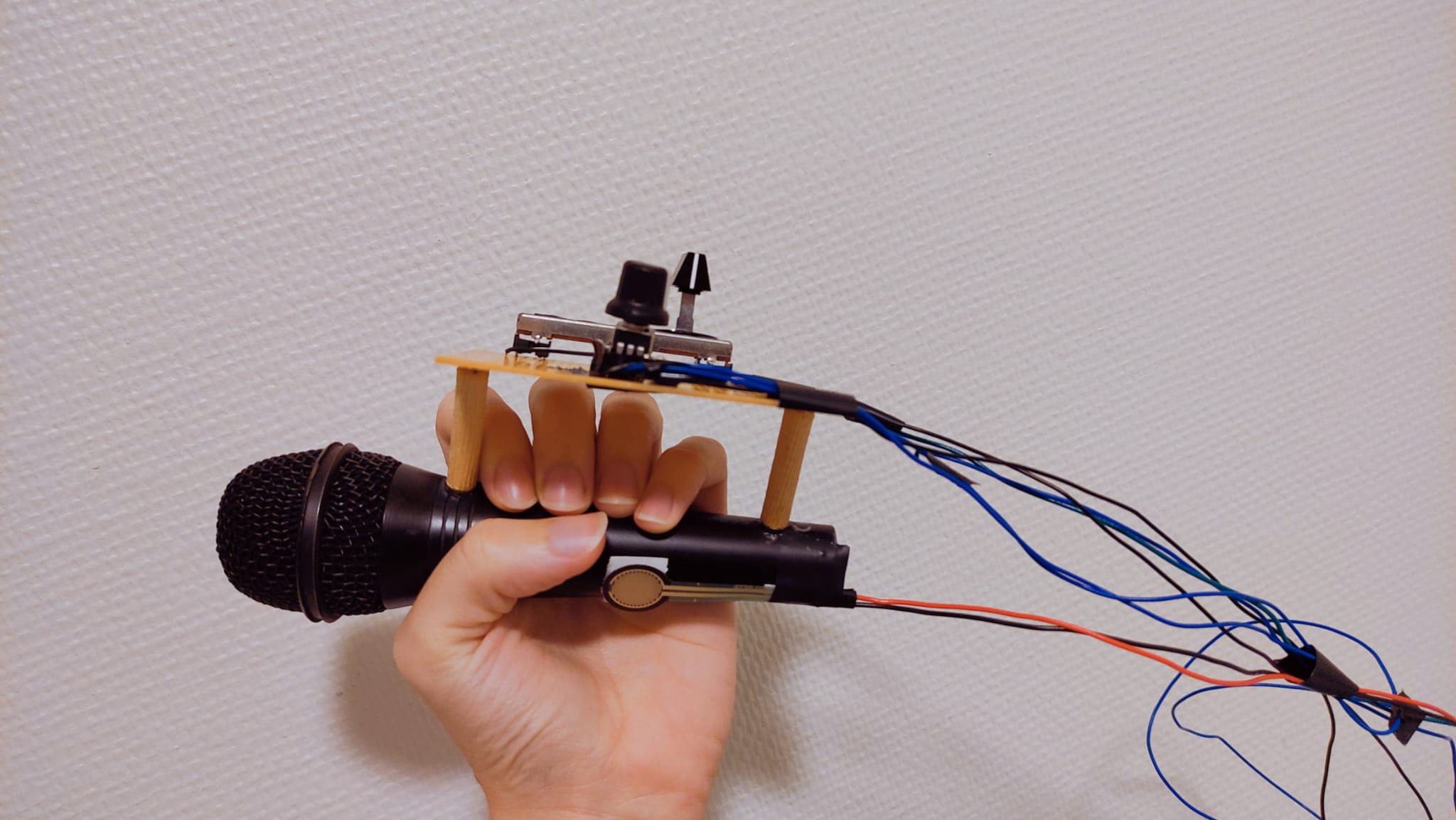

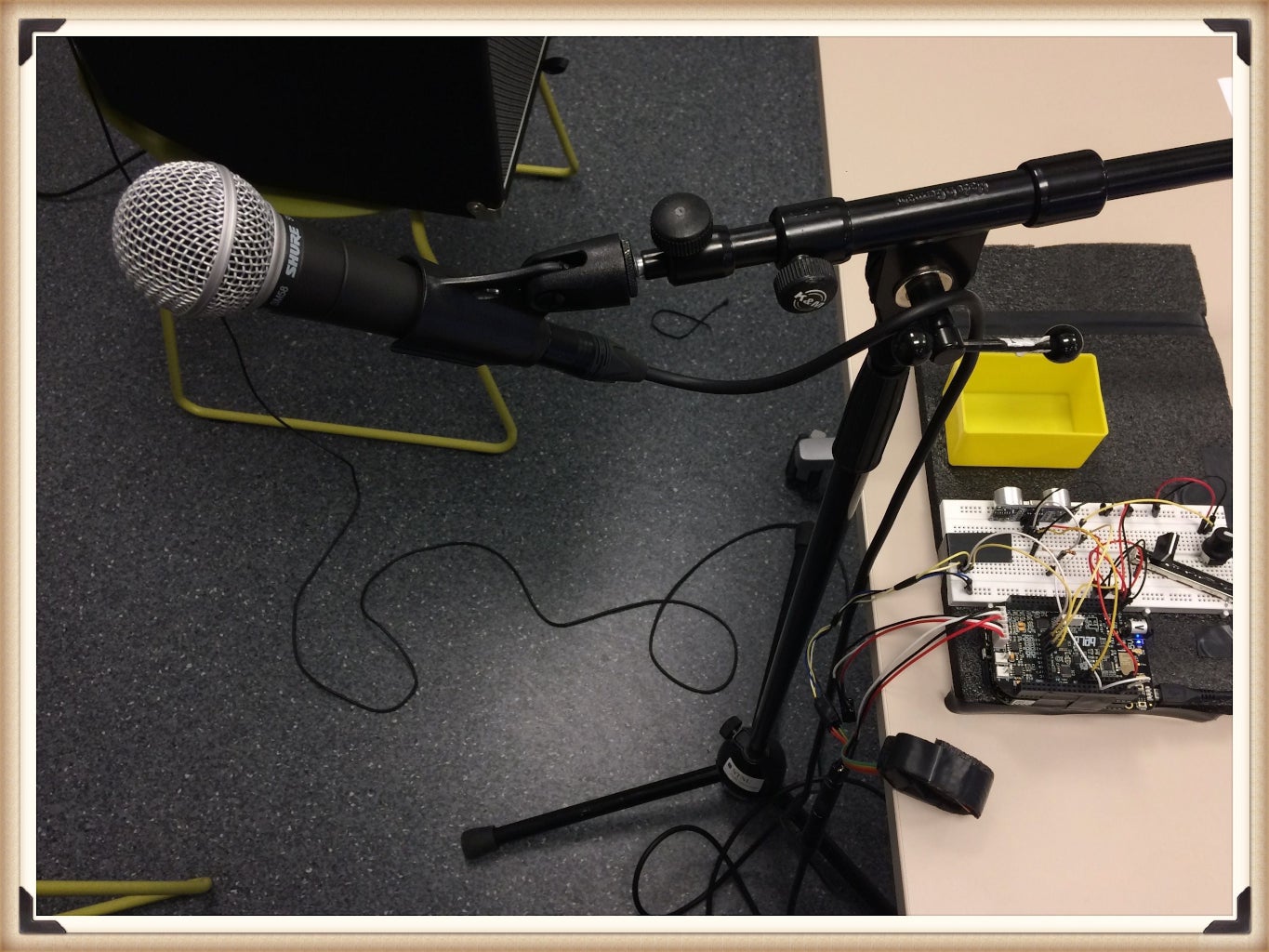

Voice/Bend

Microphone Gestural controller

-

The Hyper-Ney

Electrizing an ancient flute using capacitive and motion sensors

-

An Interactive Evening on Karl Johans Gate

What if everyday objects decide to kick it up a notch and embrace a life of their own?

-

CordChord - controlling a digital string instrument with distance sensing and machine learning

How can we use sensors to control a digital string instrument? Here's one idea.

-

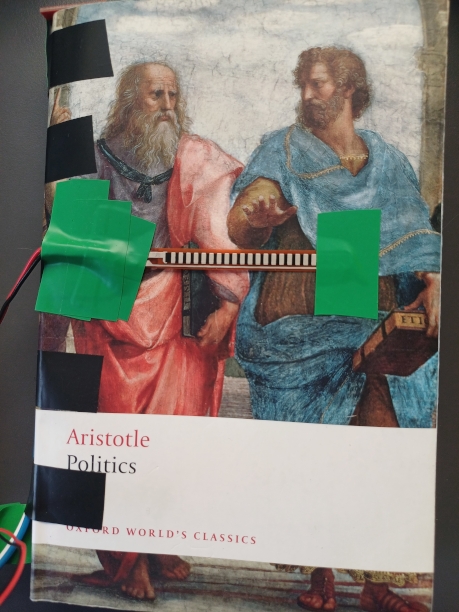

The Paperback Singer

An interactive granular audio book

-

Touch/Tap/Blow - Exploring Intimate Control for Musical Expression

Touch/Tap/Blow is, as its name suggests, an interactive music system which aims to combine three forms of intimate control over a digital musical instrument. Notes and chords can be played via the touch interface while bass accompaniment can be driven by the player’s foot tapping. Below are the details of it’s main elements.

-

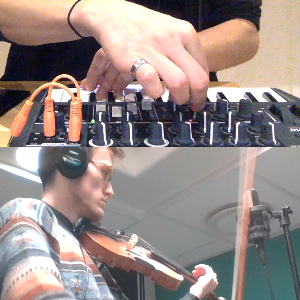

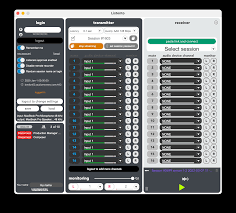

Sync your synths and jam over a network using Sonobus

A quick start guide to jamming over a network. Designed for instruments which can synchronize using an analog clock pulse.

-

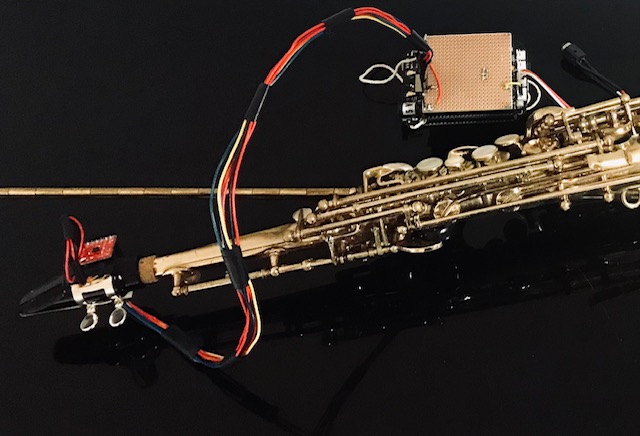

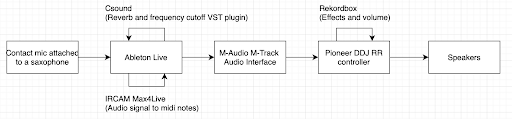

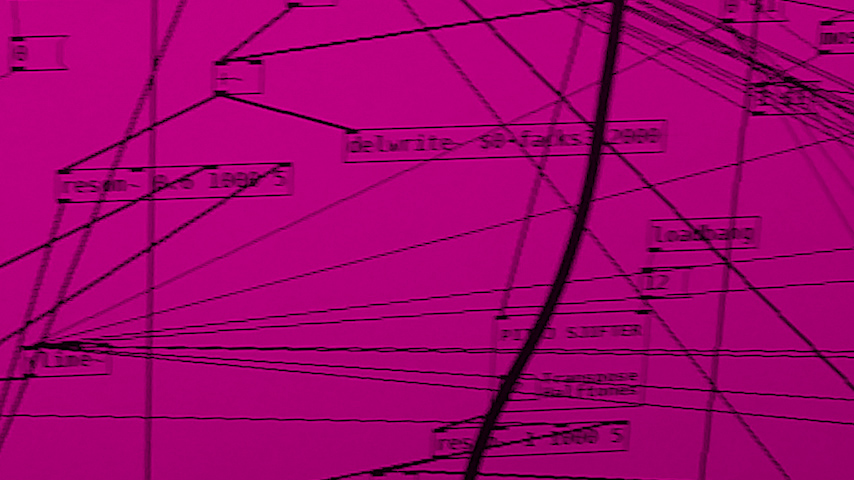

The Saxelerophone: Demonstrating gestural virtuosity

A hyper-instrument tracking data from a 3-axis accelerometer and a contact microphone to create new interactive sounds for the saxophone.

-

A Critical Look at Cléo Palacio-Quintin’s Hyper-Flute

A Boehm flute enhanced with sensors

-

The Daïs: Critical Review of a Haptically Enabled NIME

Is this a violin?

-

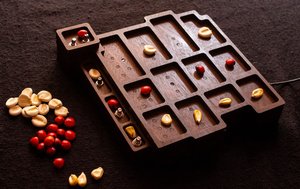

Shadows As Sounds

4-step sequencer using seeds and corns

-

The Augmented Violin: Examining Musical Expression in a Bow-Controlled Hyper-Instrument

A brief look at the affordance for musical expression in a violin-based interactive music system.

-

The Tickle Tactile Controller - Review

Like many digital instruments I have come across, the instrument design takes its initial inspiration from the piano, a fixed-key instrument.

-

Review of On Board Call: A Gestural Wildlife Imitation Machine

Critical Review of On Board Call: A Gestural Wildlife Imitation Machine

-

How to break out of the comping loop?

A critical review of the Reflexive Looper.

-

Exploring Breath-based DMIs: A Review of the KeyWI

The relationship between musician and instrument can be an extremely personal and intimate one

-

Controling Guitar Signals Using a Pick?

A deeper dive into the Magpick

-

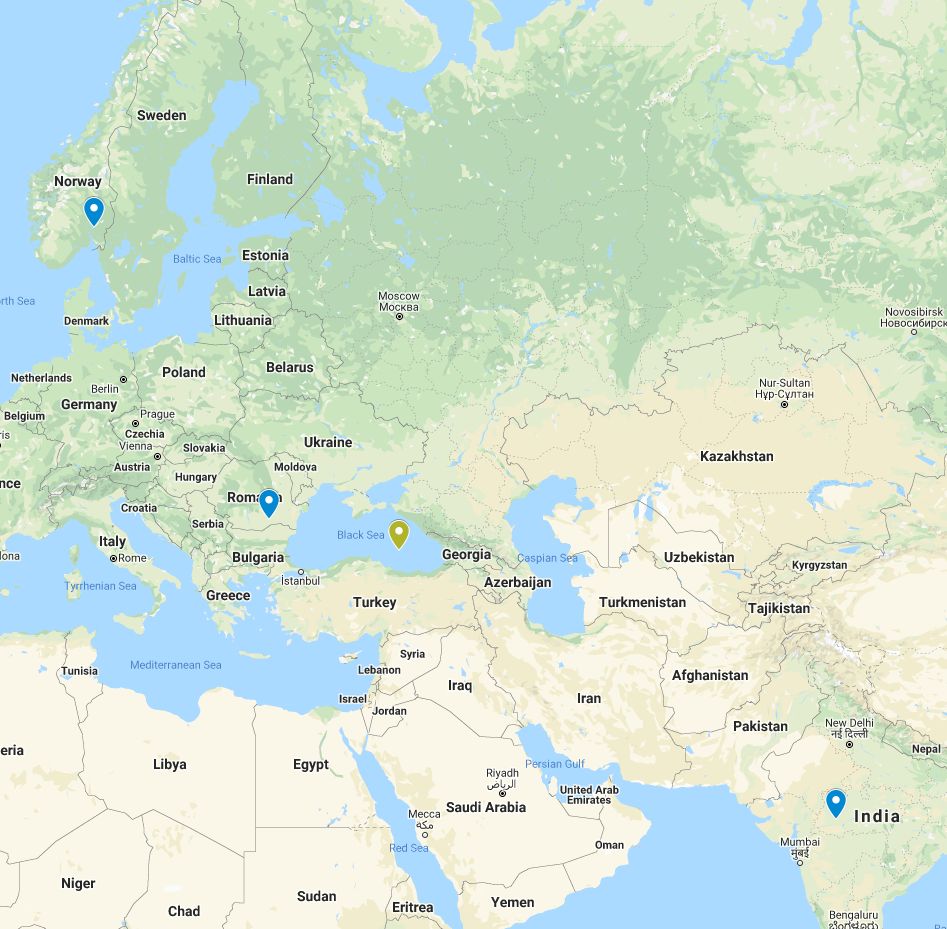

Music Between Salen and the World

We played in a global NMP concert. Check out our experiences.

-

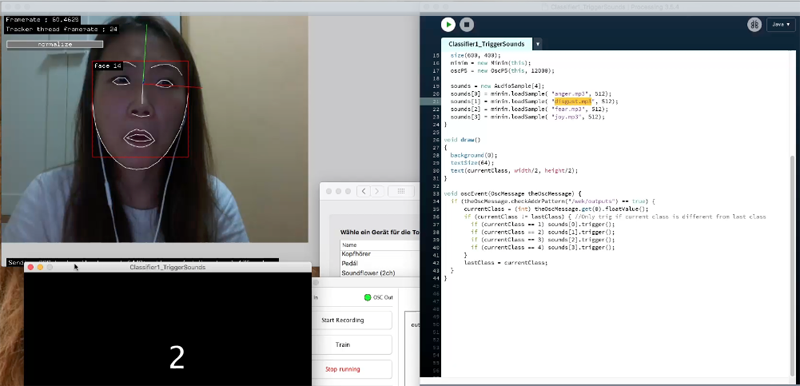

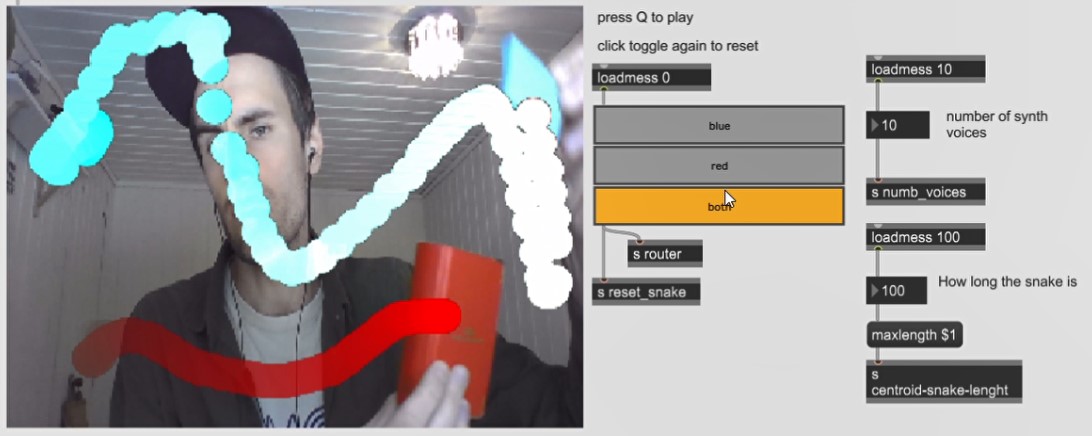

A Body Instrument: Exploring the Intersection of Voice and Motion

Manipulate your voice with your body

-

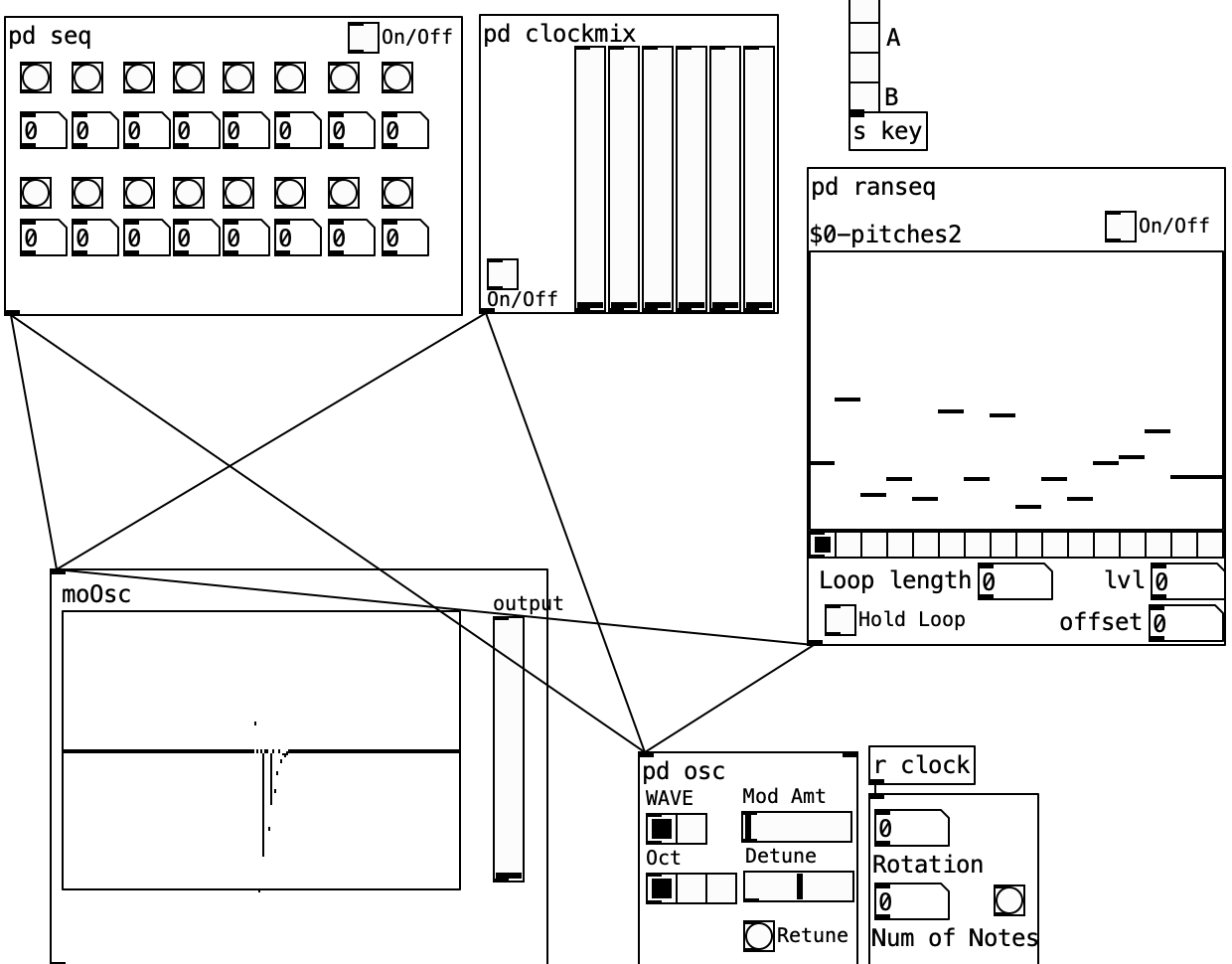

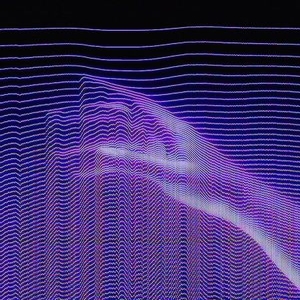

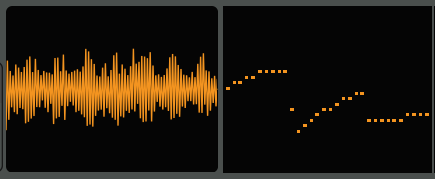

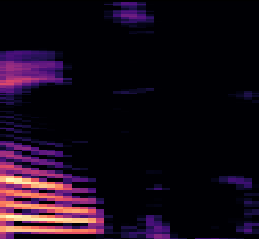

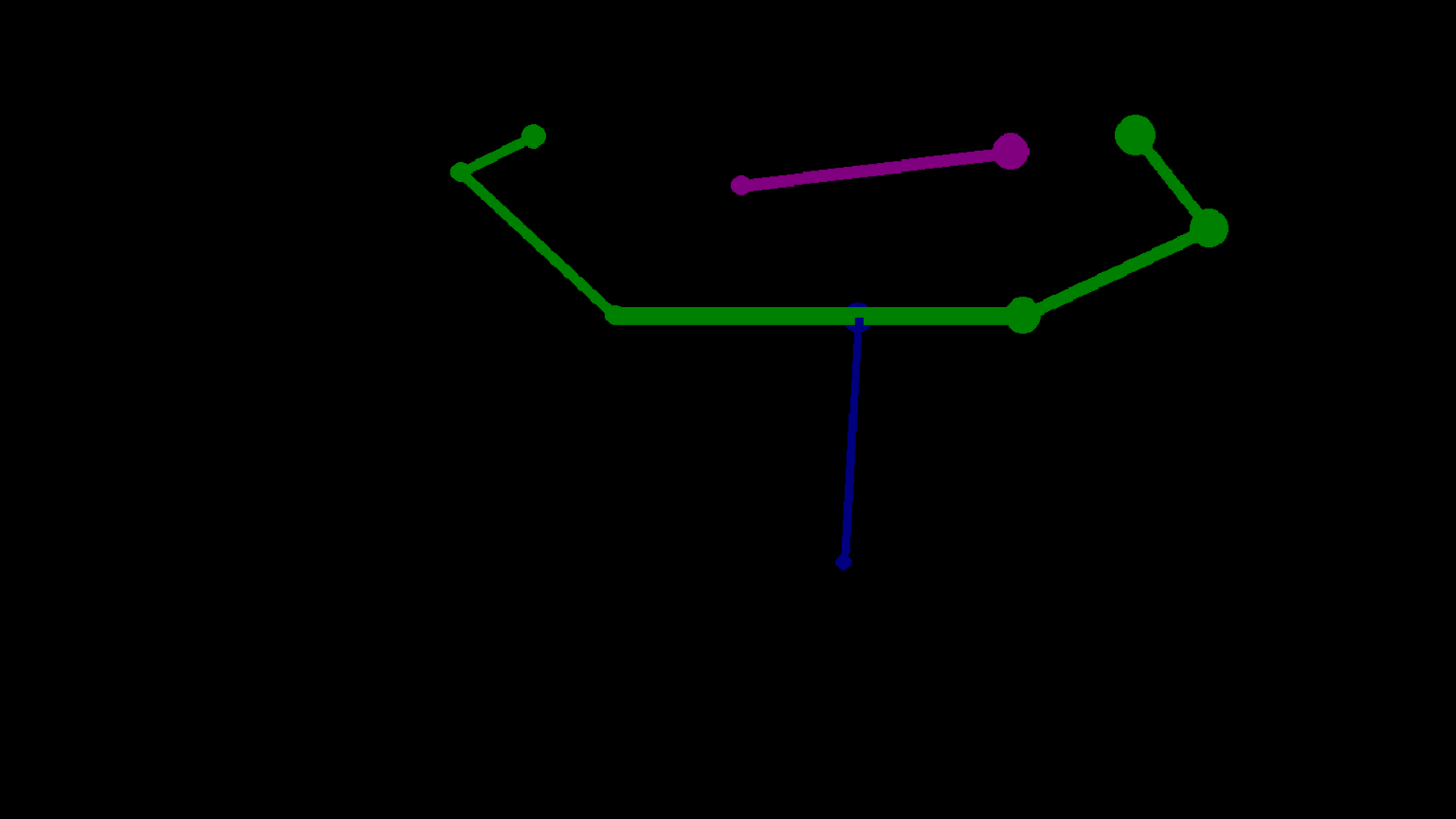

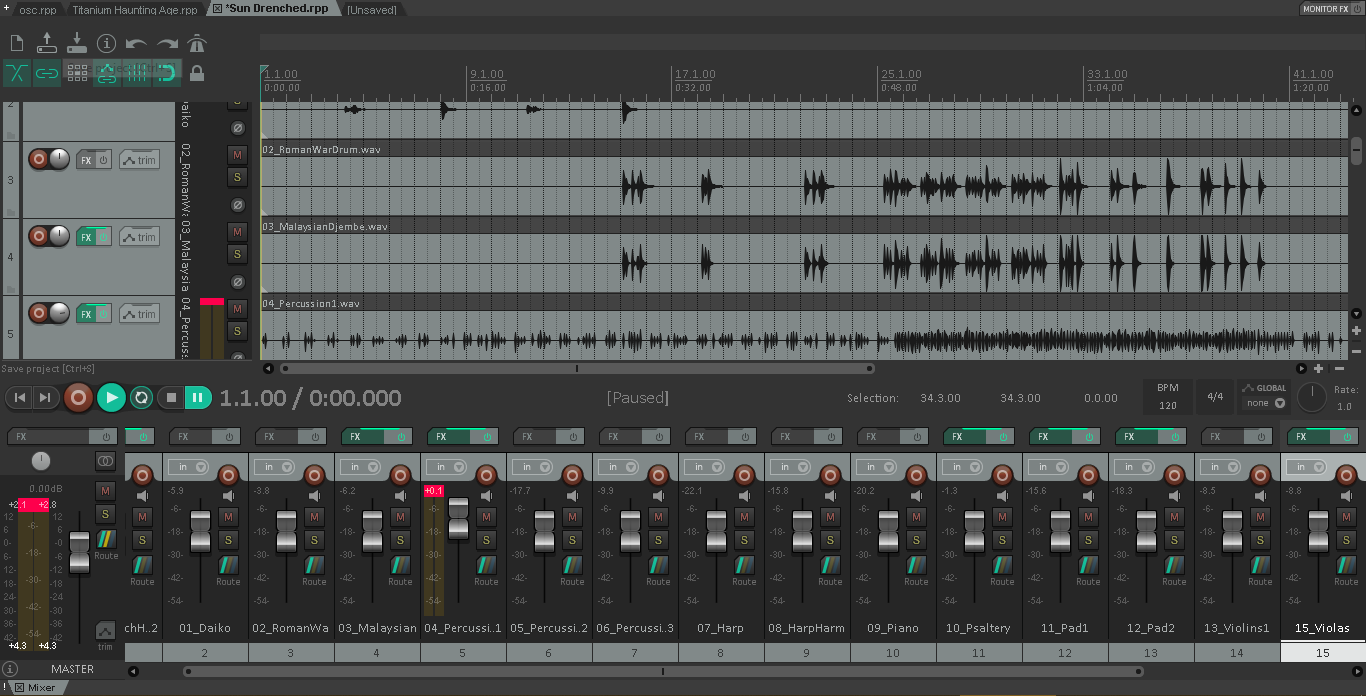

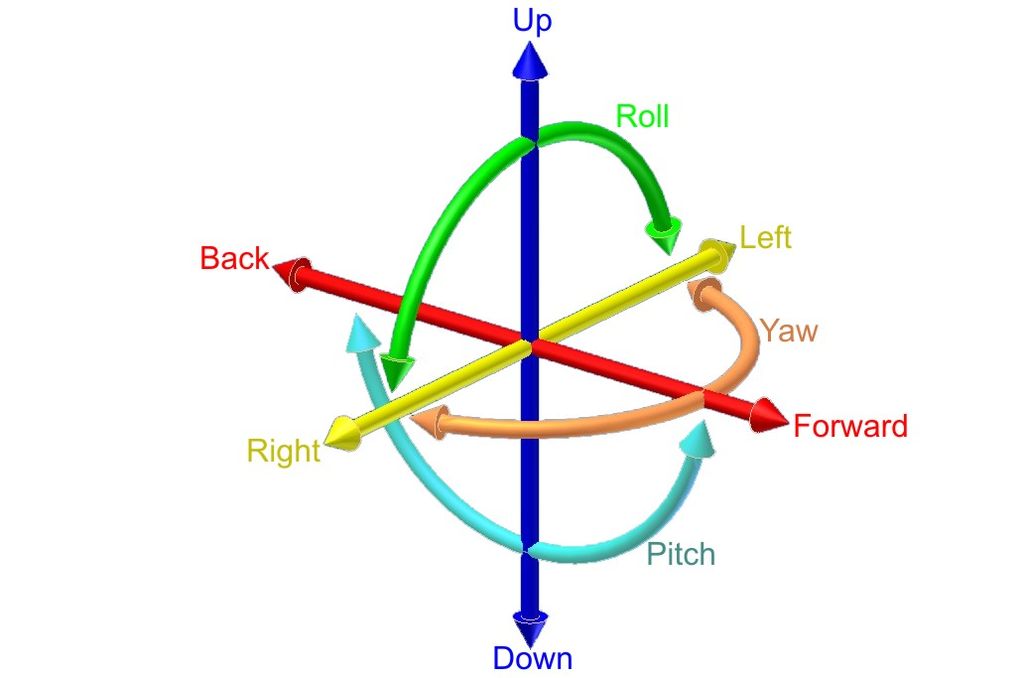

Generative Music with IMU data

Eight routes to meta-control

-

Simple yet unique way of playing music

Gestures can be more intuitive to play around with

-

Xyborg: Wearable Control Interface and Motion Capture System for Manipulating Sound

Witness my transition from human to machine - with piezo discs

-

Motion Controlled Sampler in Ableton

A fun and creative way of sampling

-

Scream Machine: Voice Conversion with an Artifical Neural Network

Using a VAE to transform one voice to another.

-

Generating music with an evolutionary algorithm

Looking at a theoretical and general implementation of an evolutionary algorithm to generate music.

-

Persian classical instruments recognition and classification

This blog post will go over various feature extraction techniques used to identify Persian classical music instruments.

-

Isn't Bach deep enough?

Deep Bach is an artificial intelligence that composes like Bach.

-

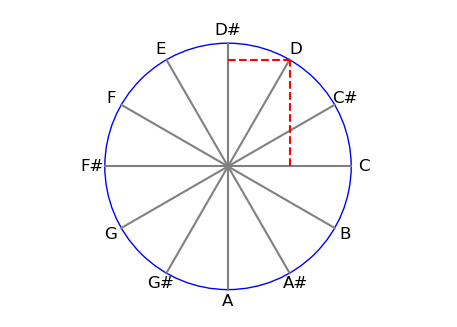

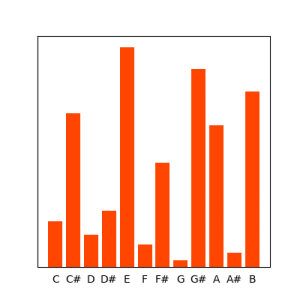

Recognizing Key Signatures with Machine Learning

The first rule of machine learning? Understand your data! A look into how music theory came to my rescue for classifying key signatures.

-

Breakbeat Science

AI-Generated amen breakbeats

-

Spotlight: AutoEncoders and Variational AutoEncoders

A simple generative algorithm

-

Music AI, a brief history

Chronicling the field of AI-generated music's start, where it went from there, and what you can expect from musical AI right now.

-

A caveman's way of making art

...and art in the age of complexity.

-

Clustering audio features

Music information retrieval(MIR)

-

What's wrong with singing voice synthesis

Dead can sing

-

The whistle of the autoencoder

How I used autoencoders to create whistling.

-

Chroma Representations of MIDI for Chord Generation

Understanding two ways of representing and generating chords in machine learning.

-

Generating Video Game SFX with AI

A first look at text-to-audio sound effect generation for video games.

-

Pytorch GPU Setup Guide

Having trouble getting Pytorch to recognize your GPU? Try this!

-

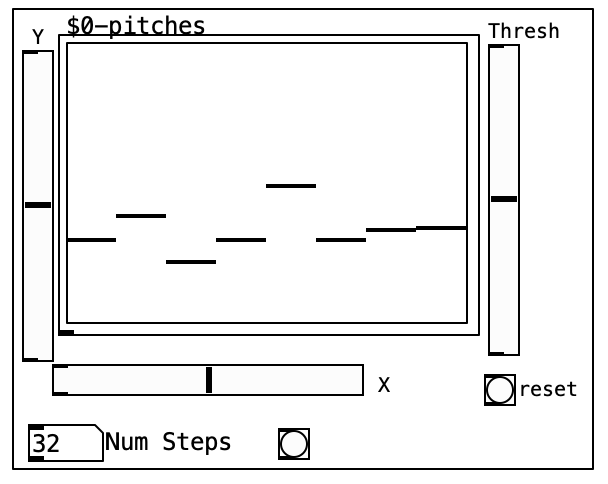

A Rhythmic Sequencer Driven by a Stacked Autoencoder

Sometimes you need to leave room for the musician

-

Comparing MIDI Representations: The Battle Between Efficiency and Complexity

A comparing of different MIDI representations for generative machine learning

-

Programming with OpenAI

How OpenAI solutions help us to program?

-

Challenges with Midi

Is it easy to create chord progression from midi files?

-

Basics of Computer Networks in NMPs

Crash course on computer network used in Network Music Performances.

-

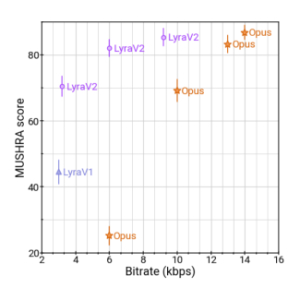

Audio Codecs and the AI Revolution

I dove headfirst into the world of machine learning-enhanced audio codecs. Here's what I found out.

-

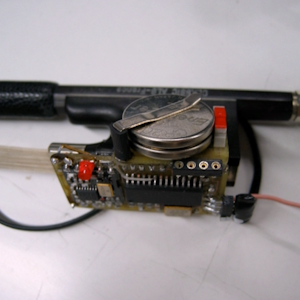

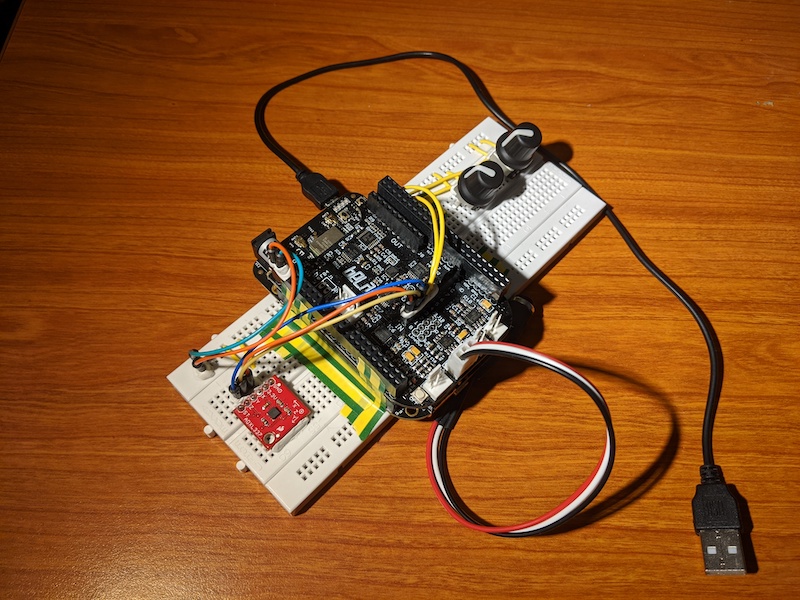

NMP kit Tutorial

A practical tutorial on the NMP kits.

-

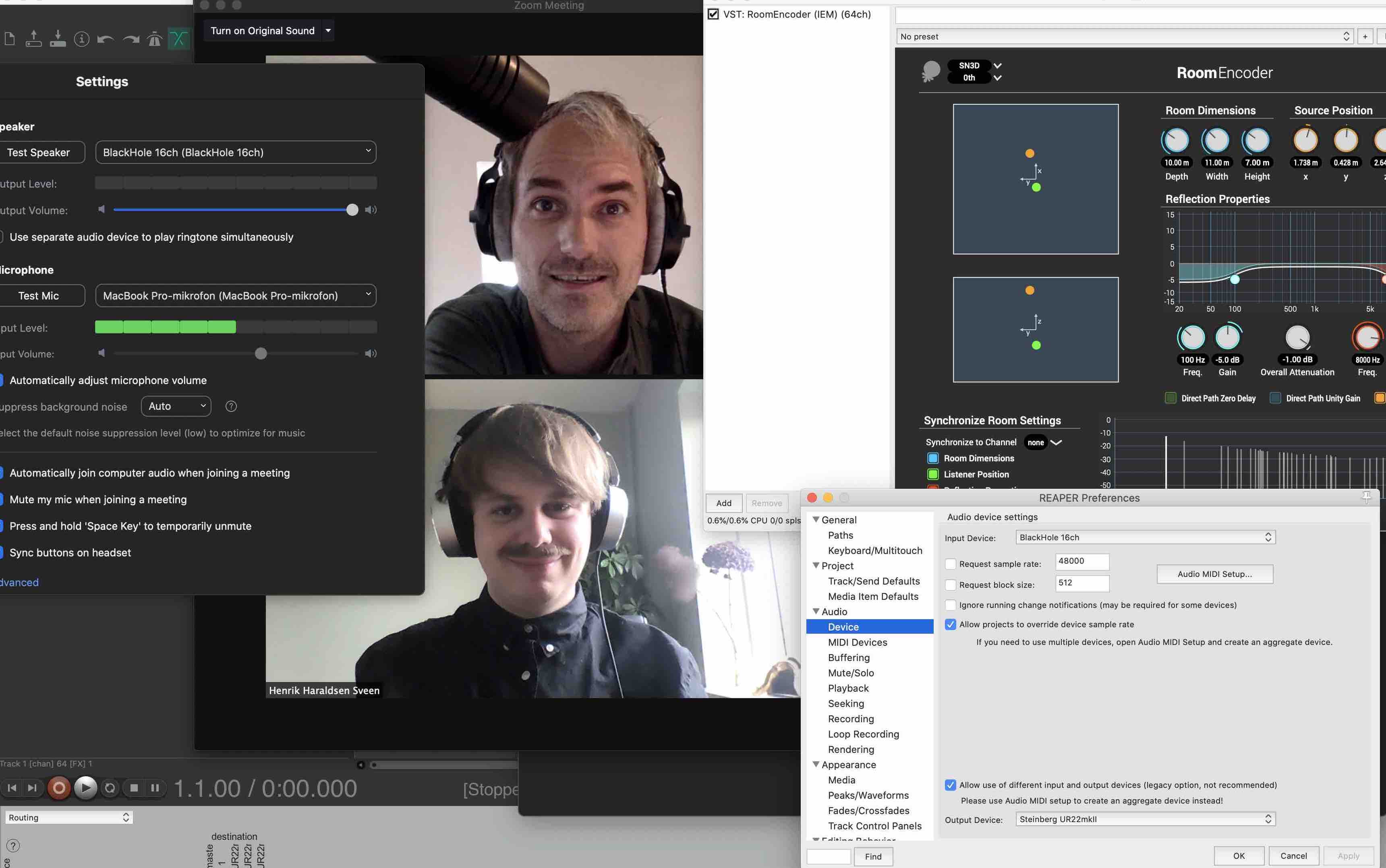

Spatial Audio with Max-Msp and Sonobus

This post's video tutorial aims to introduce readers to the many uses for which the Sonobus can be implemented as a VST in Max-Msp.

-

SonoBus setup - Standalone App and Reaper Plugin

Check out my tutorial on how to use SonoBus for your networked performance.

-

Integrating JackTrip and Sonobus in a DAW

How you can integrate both JackTrip and Sonobus into your DAW

-

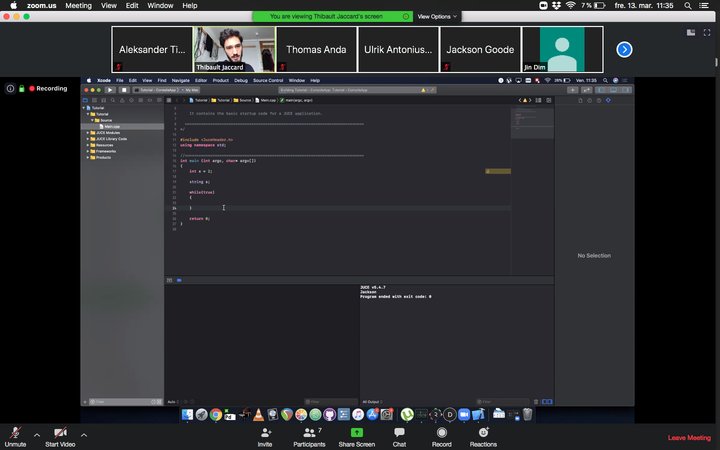

Pair Programming Over Network

Pair Programming from different locations, is it possible?

-

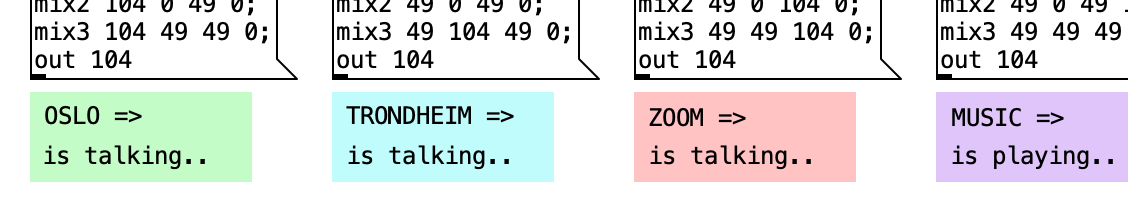

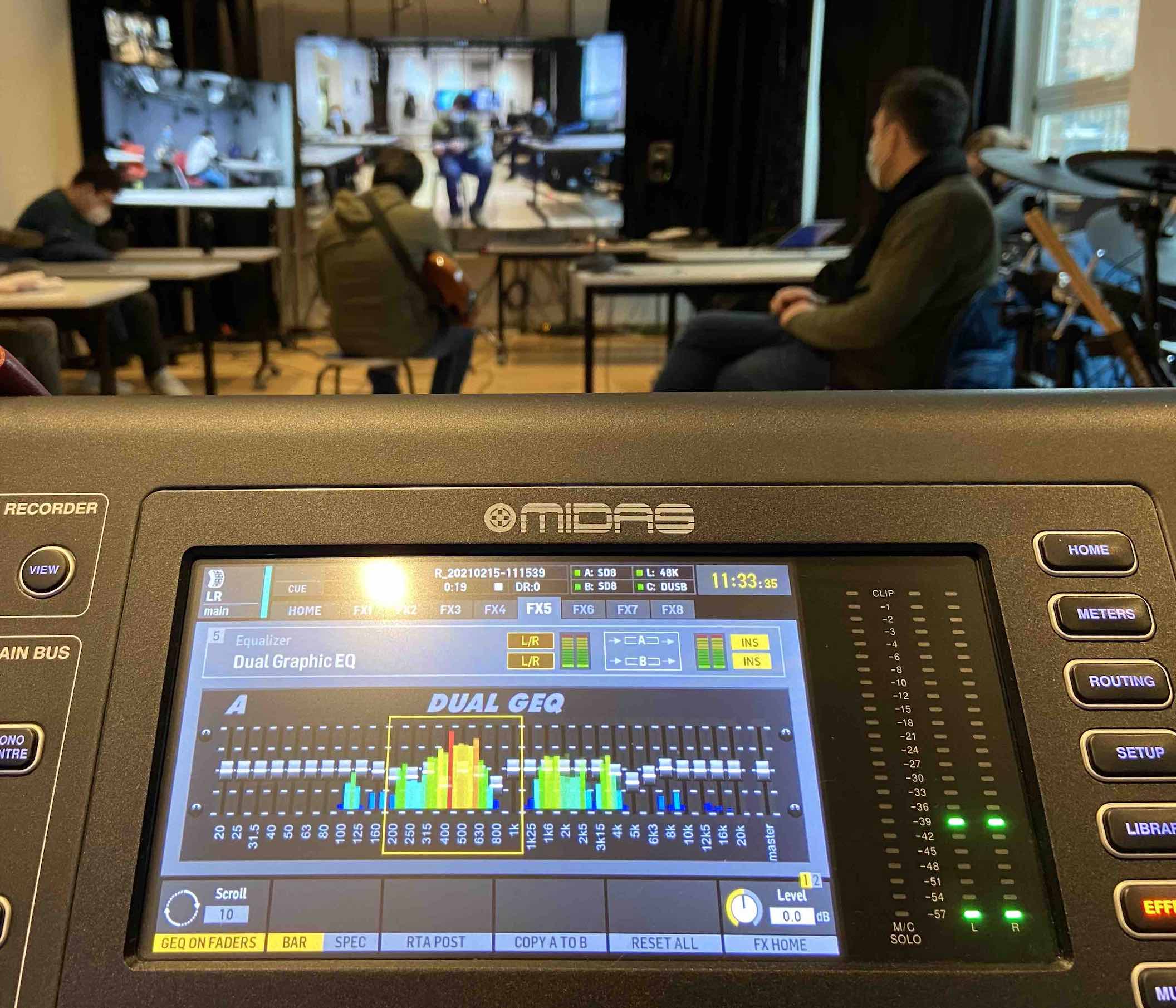

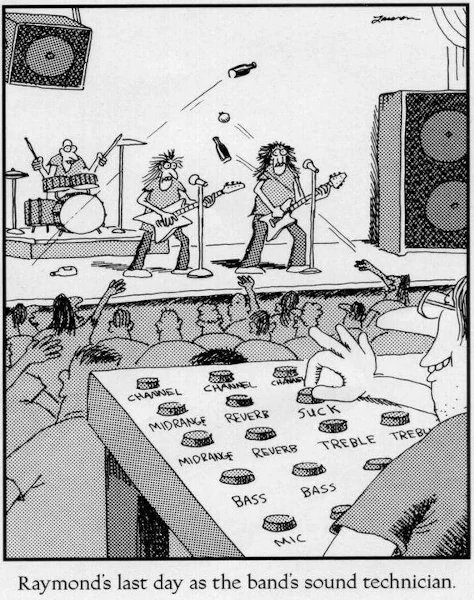

Audio Engineering for NMPs: Part 2

A deeper dive into mixer work for NMPs

-

MIDI music generation, the hassle of representing MIDI

A brief guide of the troubles with MIDI representation for generative AI

-

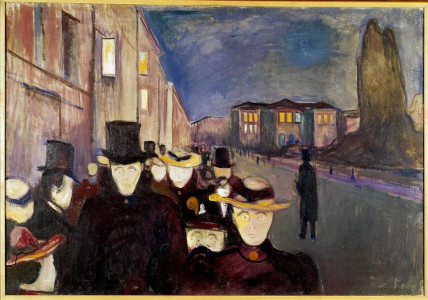

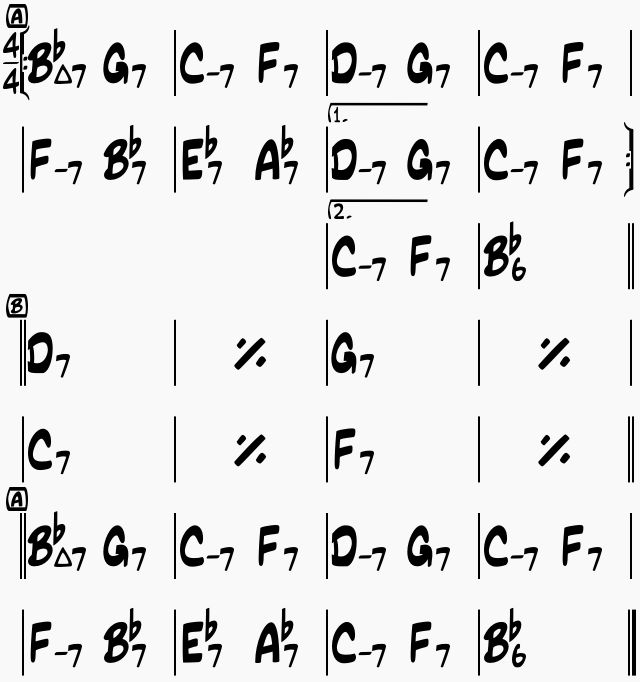

Playing Jazz Over Network

A live performance by Edvard Munch High School students with collaborative networked music technology.

-

Jazz Over the Network at 184,000km/h

We worked with local high school students to put together a jazz concert over the LOLA network. Here's a retrospective from Team RITMO.

-

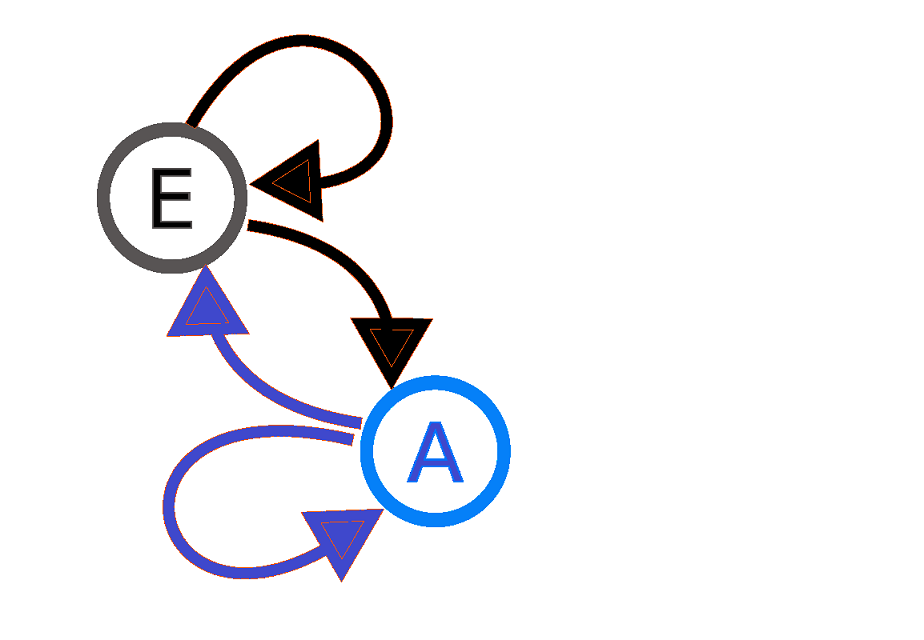

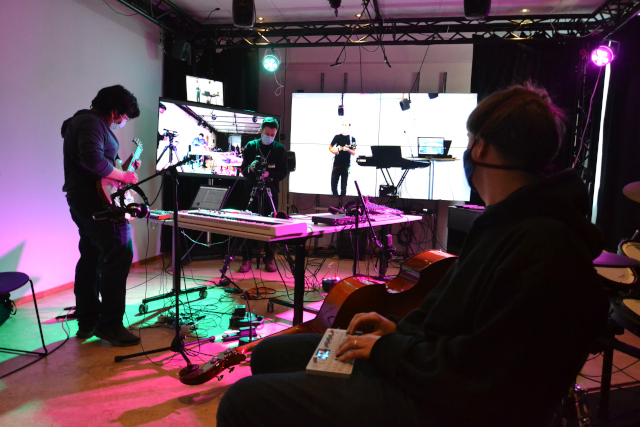

Testing Two Approaches to Performing with Latency

We tested two approaches to dealing with latency in network music. Read all about it!

-

Designing DFA and LAA Network Music Performances

Music Performances in High Latency

-

Markov Chain Core in PD

Markov Chain Core in PD

-

Make The Stocks Sound

Make The Stocks Sound

-

MCT Blog Sonified!

Making sound out of this blog.

-

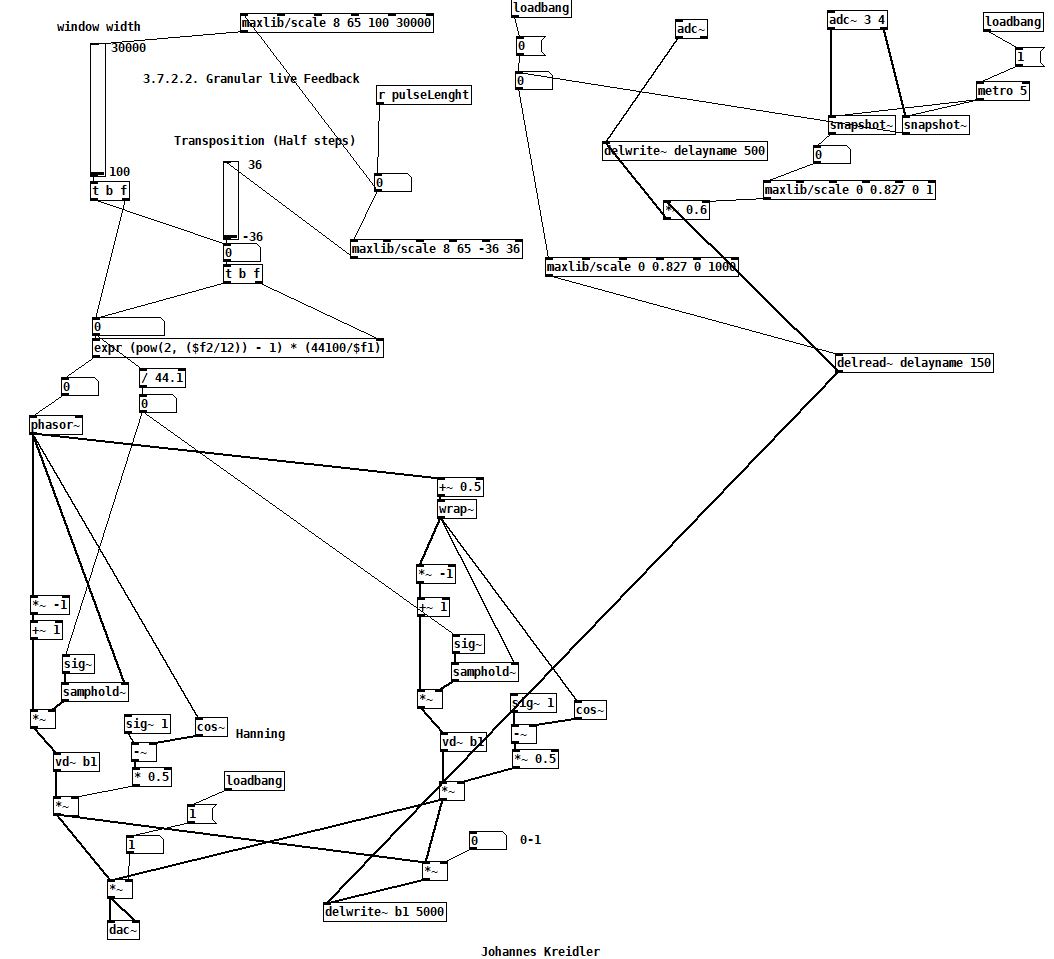

Sonifying the Northern Lights: Two Approaches in Python & Pure Data

Making 'music' from the Aurora Borealis.

-

Towards a Claptrap-Speaking Kastle Maus

Once upon a time, there was a maus living in a kastle..

-

Expressive Voice: an IMS for singing

Take a peak at my IMS and the reasoning behind its design.

-

Interactive Music Systems, Communication and Emotion

Talking about IMSs, expressing and even inducing emotions.

-

The sound of rain

How we sonified the rainfall data.

-

Pringles, I love You (not)

No, that is not true. I do not like Pringles. But I like the tube it comes with! That’s why I invited a friend over to eat the chips, so I could use the tube for my 4054 Interactive Music Systems project.

-

Three Takeaways as a musicologist

Recounting the experience of making an instrument from scratch for the first time.

-

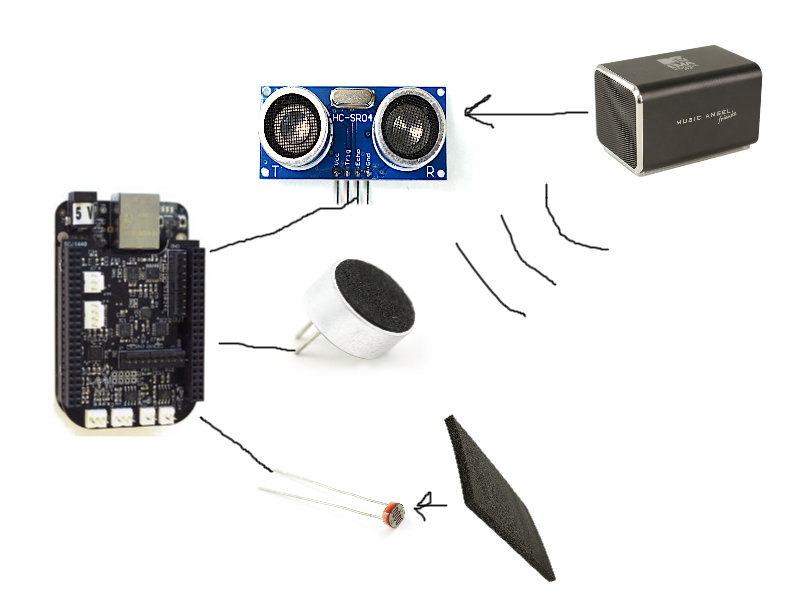

SR-01

The climate aware synthesizer. Based on using few components while reacting to changes in light and temperature around it, causing it to sound different today than in a changed climate.

-

How to make your screen time a natural experience

Learn how playing with mud could equate to playing with computer.

-

The Feedback Mop Cello: Making Music with Feedback - Part 2

Using a mop to play the feedback

-

Cellular Automata - Implementation in Pure Data

Check out the concept of Cellular Automata and my implementation in Pure Data.

-

Concussion Percussion: A Discussion

Whether it’s riding a bike or building an handpan-esque interactive music system, always remember to wear a helmet

-

Shimmerion - A String Synthesizer played with Light

Use your phone's flashlight to make music!

-

Clean code

Any fool can write code that a computer can understand. Good programmers write code that humans can understand.

-

A Christmas tale of cookie boxes and soldering

How I got the cookie box drum I never knew I wanted for Christmas

-

Creating Complex Filters in Pure Data with Biquad~

One approach to building/rebuilding complex filters in Pure Data, with a little help from Python.

-

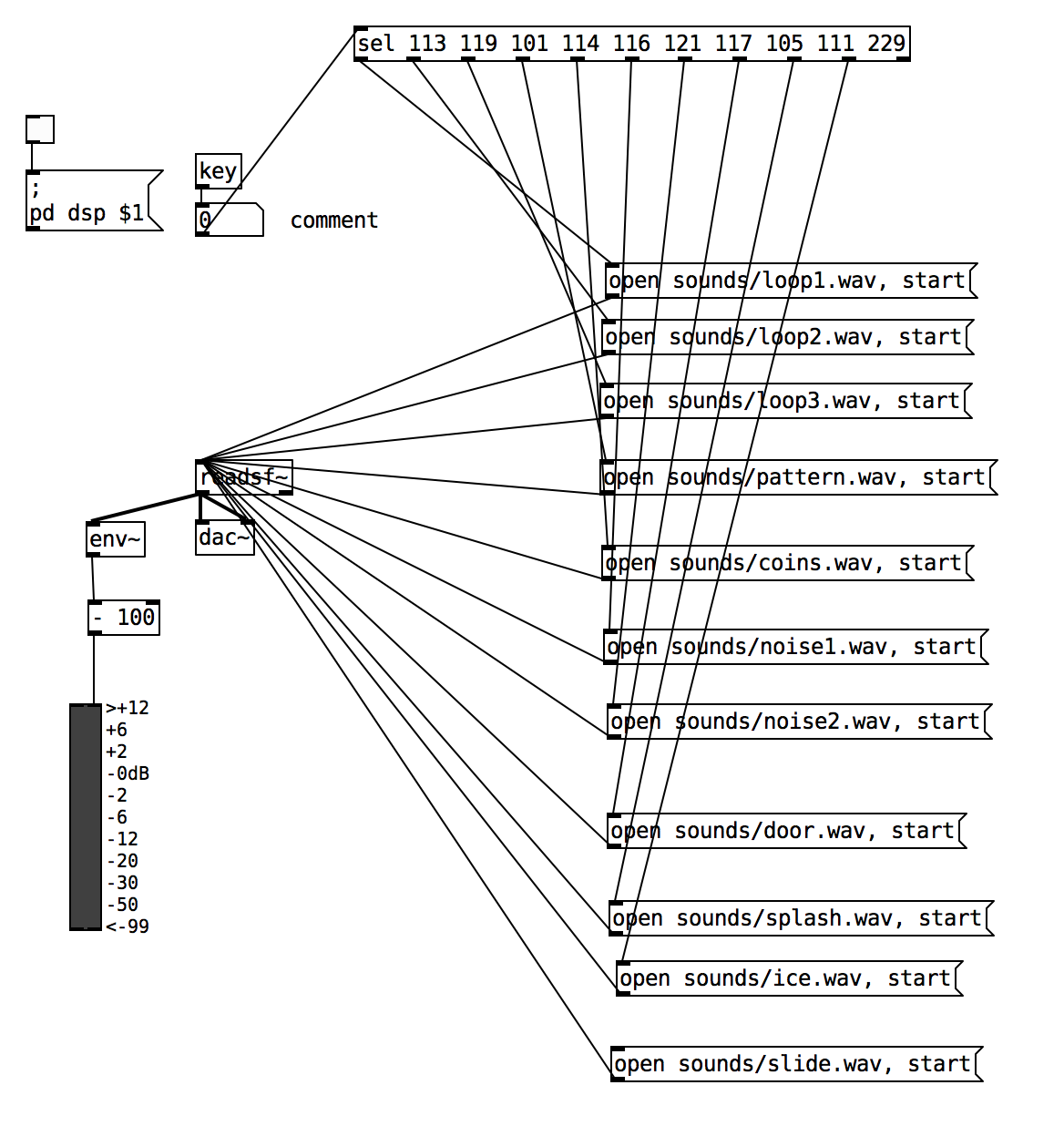

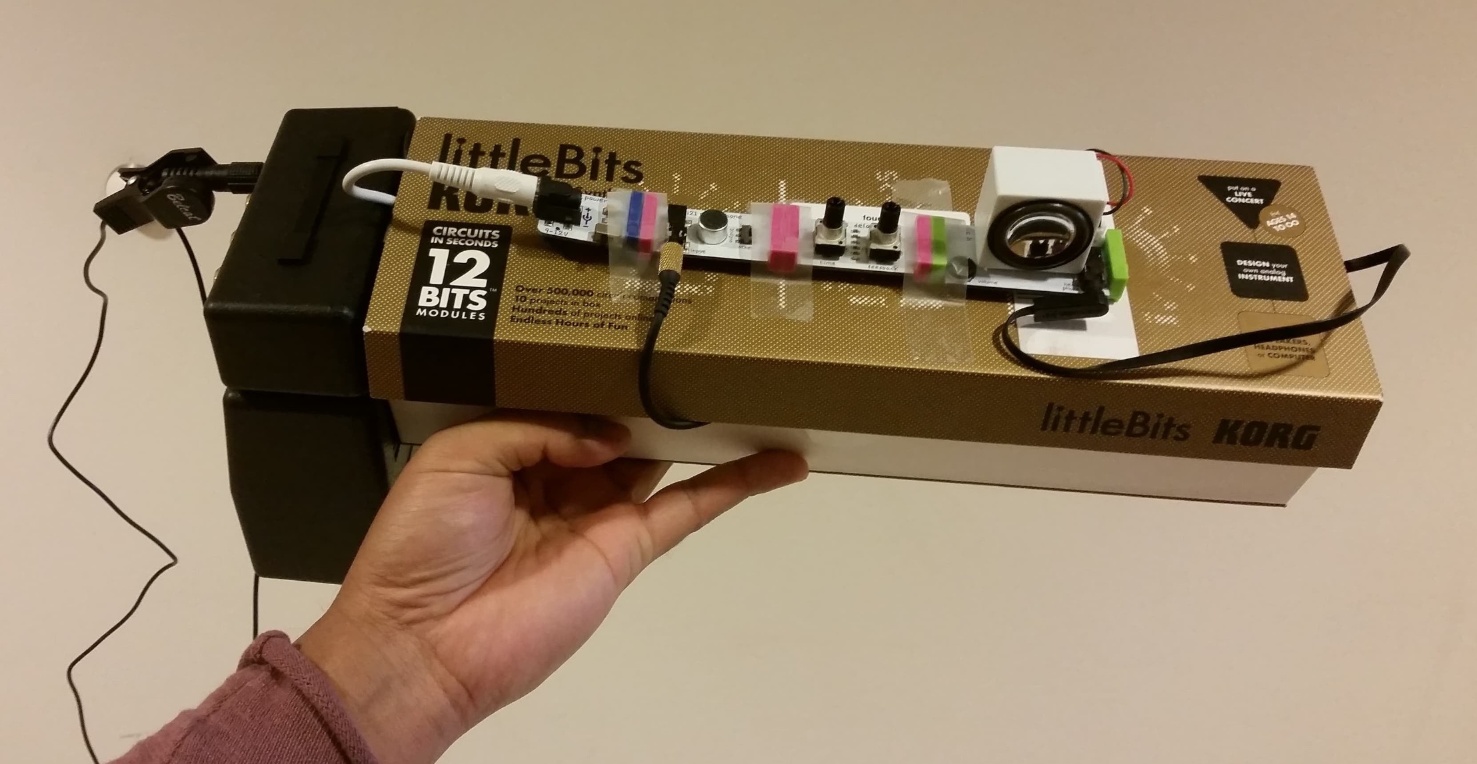

Out-Of-The-Box Sound Sources for your IMS

Exploring alternatives for generating sounds with your interactive music system.

-

The Feedbackquencer: Making Music with Feedback - Part 1

Using feedback in a sequencer

-

Wii controller as the Gestural Controller

Read this post to find information on a different use of a Wiimote than playing games on your Wii console.

-

Music By Laser: The Laser Harp

If you want to know how to play music with lasers, and maybe learn something about the laser harp, then you should give this a read.

-

JackTrip Vs Sonobus - Review and Comparison

Low-latency online music performance platforms

-

The MCT Audio Vocabulary

Click this post if you need some explanation on jargon, mainly related to the Portal and the NMP kits.

-

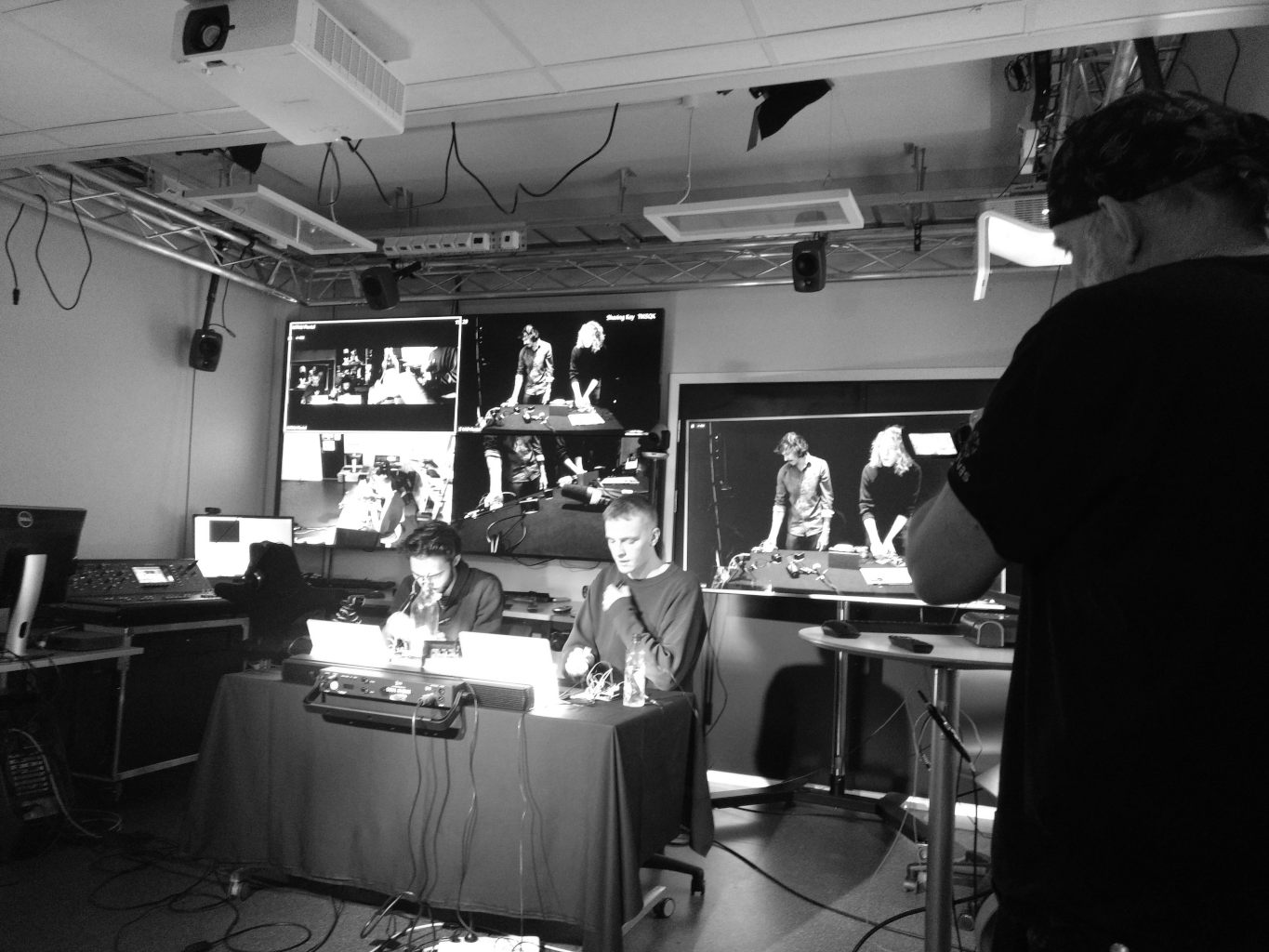

Making A Telematic Concert Happen - A Quick Technical Look

Background of A Telematic Experience

-

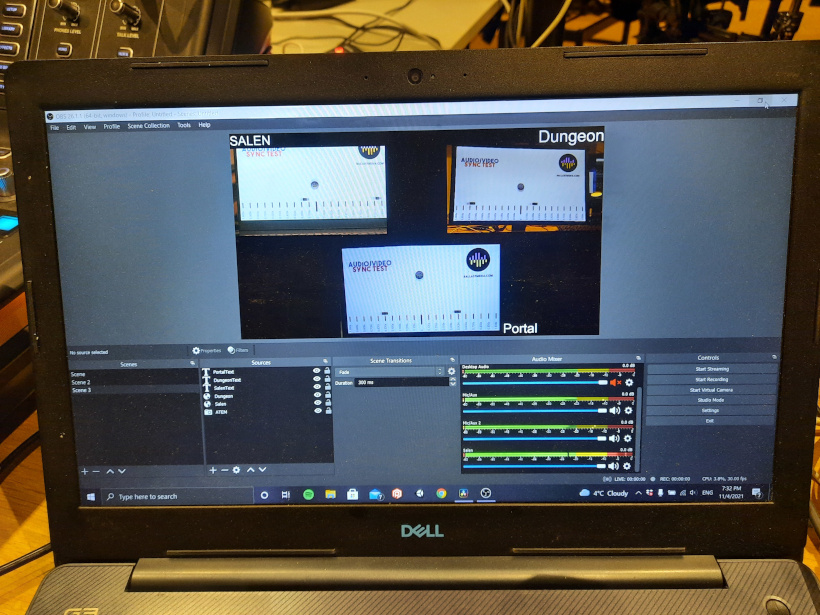

Live Streaming A Telematic Concert

Whys, How-tos and Life-Saving Tips on Telematic Concert Streaming.

-

Yggdrasil: An Environmentalist Interactive Music Installation

Plant trees and nurture your forest to generate sound!

-

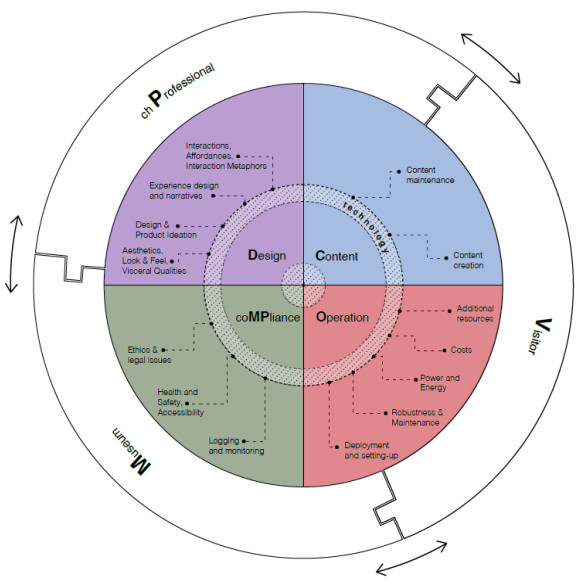

Why Your Exhibit Tech Failed (and how to fix it)

Why are visitors not using your installation? You might just disagree with yourself, the visitors, and your department or client about their needs and wants. The reason for this disagreement is always the same.

-

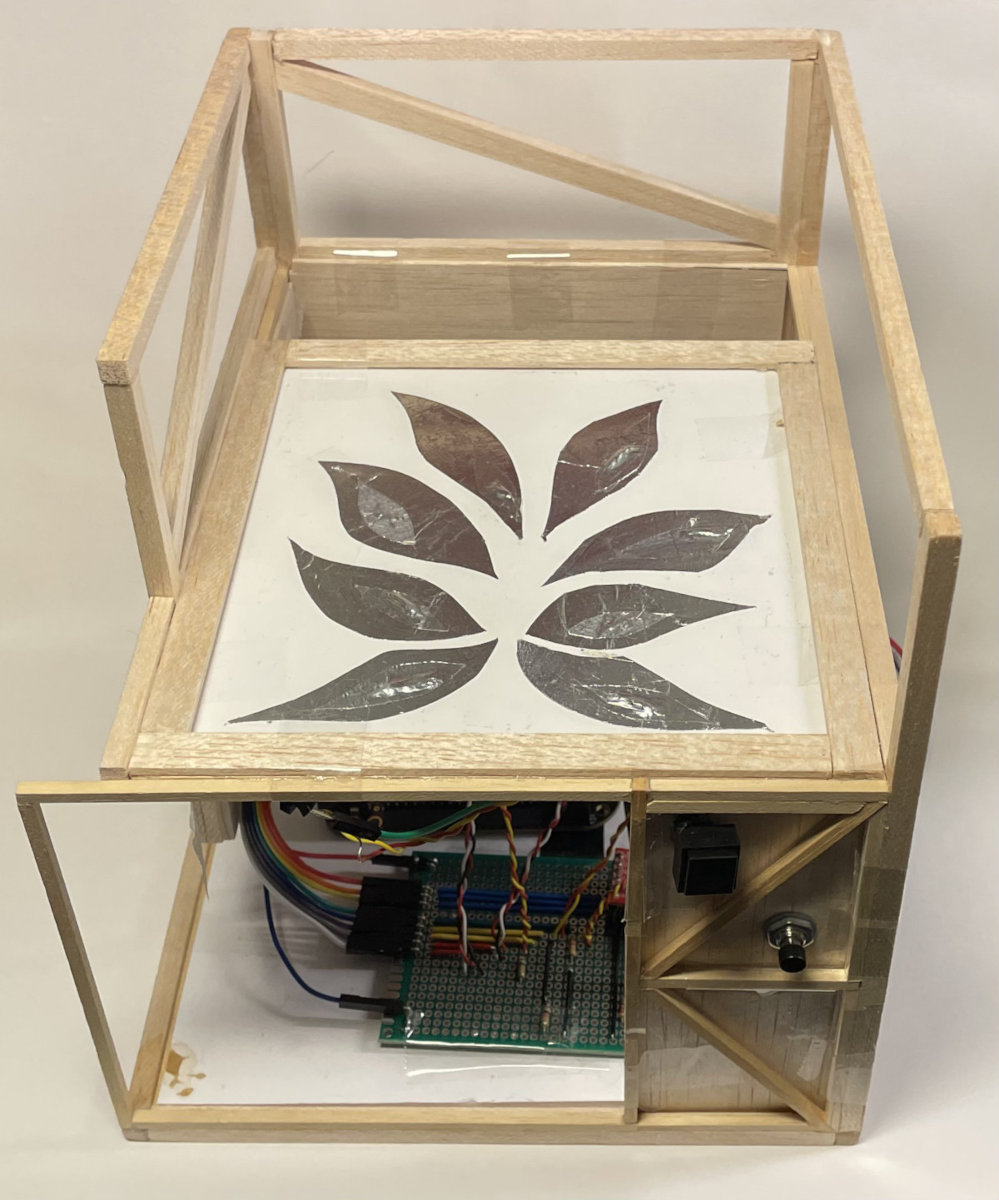

New Interface for Sound Evolution (NISE)

Robiohead is an attempt in a box to explore how an Interactive Music System (IMS) can offer ways to explore sound spaces by evolving sound producing genes in a tactile manner.

-

In search of sounds

Are you looking for sounds to inspire your next project? At synth.is you can discover new sounds by evolving their genes, either interactively or automatically.

-

An instrument, composition and performance. Meet the Transformer #1

A review of Natasha Barretts Transformer #1

-

Blackhole, Open Source MacOS Virtual Audio Device Solution for Telematic Performance

MacOS guide to Blackhole

-

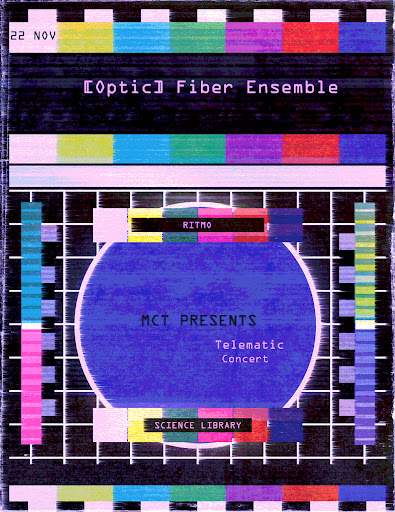

The Optic-Fibre Ensemble

Folk inspired soundscapes + No input mixing=True

-

Bringing the (Optic) Fibre Ensemble to Life - Behind the Scenes of a Telematic Music Performance

What does it take to put on a telematic music performance? Cable spaghetti of course!

-

Telematic Concert between Salen and Portal - Performers’ Reflections

Reflections on semester's first Telematic Concert

-

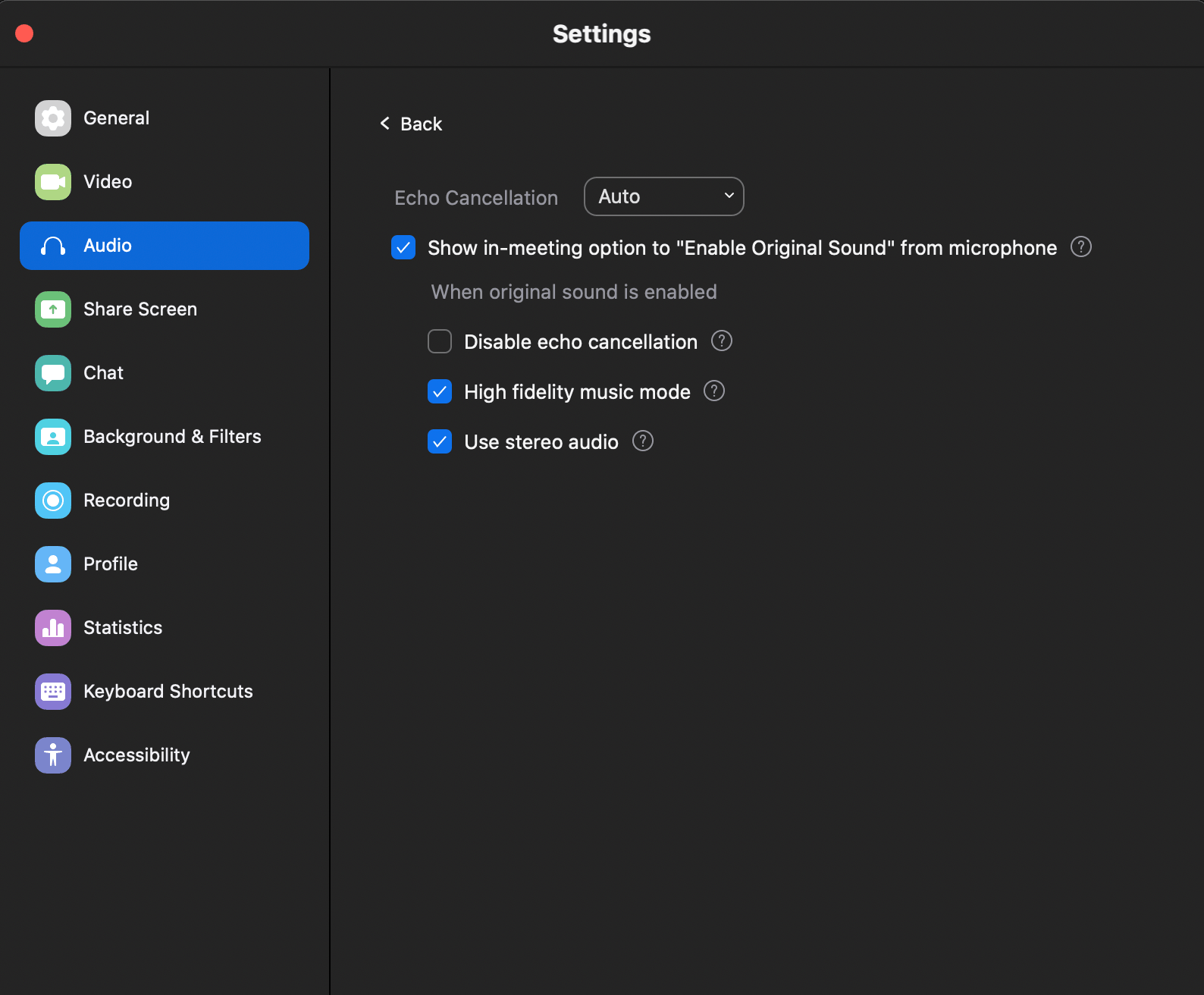

Music Mode for Online Meetings

It is possible to use popular meeting products for music!

-

Performing & Recording with JackTrip

Performing and recording network music at home? Thanks to JackTrip, now you can do it too.

-

Zeusaphone - The singing Tesla coil

Have you ever seen choreographed lightning?

-

Audio Engineering for Network Music Performances

How much more difficult could it POSSIBLY be?

-

Rehearsing Music Over the Network

My experiences with rehearsing music telematically and some tips.

-

Dråpen, worlds largest interactive music system?

This may be the largest interactive music system you have heard about

-

A Contact Microphone And A Dream: To Loop

Join a humble contact microphone on its quest for freedom!

-

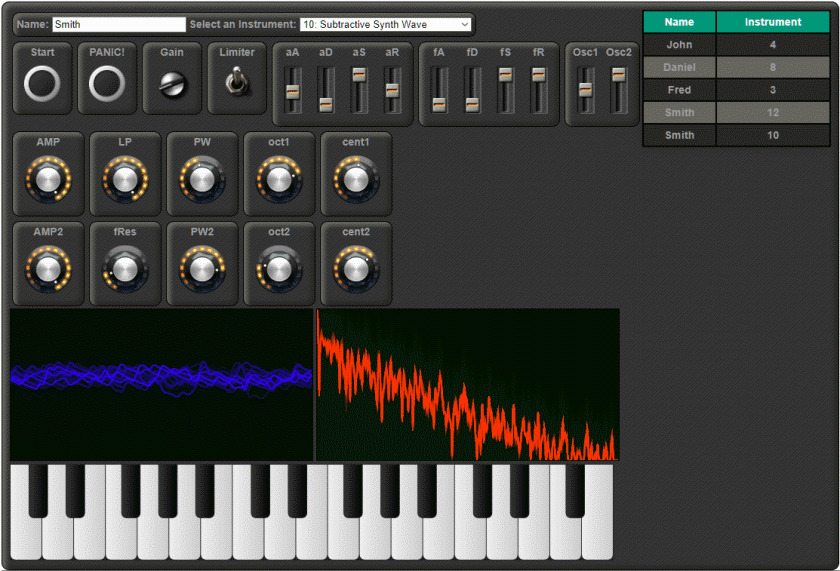

FAM Synthesizer

A simple digital synthesizer with the potential of sounding big, complex and kind of analog. Screenless menudiving included.

-

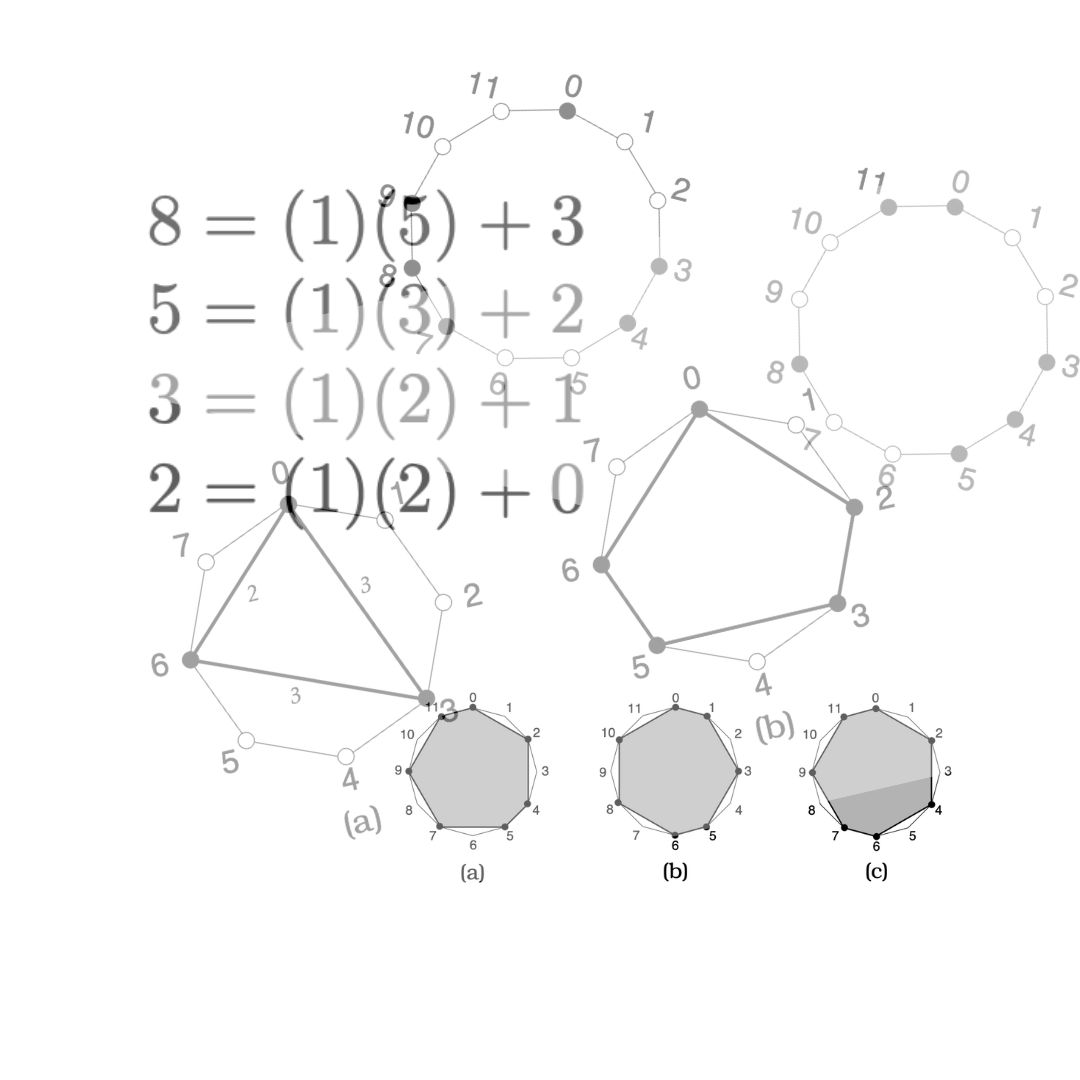

Euclidean Rhythms in Pure Data

What are Euclidean Rhythms and how can you program them?

-

The impact and importance of network-based musical collaboration (in the post-covid world)

The Covid-19 pandemic offered a unique opportunity (and necessity) to focus on the creative usage (and further development) of the technological tools used for network-based musical collaboration.

-

How to do No-Input Mixing in Pure Data

No-Input Mixing in Pure Data

-

Norway's First 5G Networked Music Performance

We played Norway's first 5G networked music performance in collaboration with Telenor Research.

-

One Last Hoorah: A Telematic Concert in the Science Library

In which we go over the most ambitious telematic concert of our MCT careers.

-

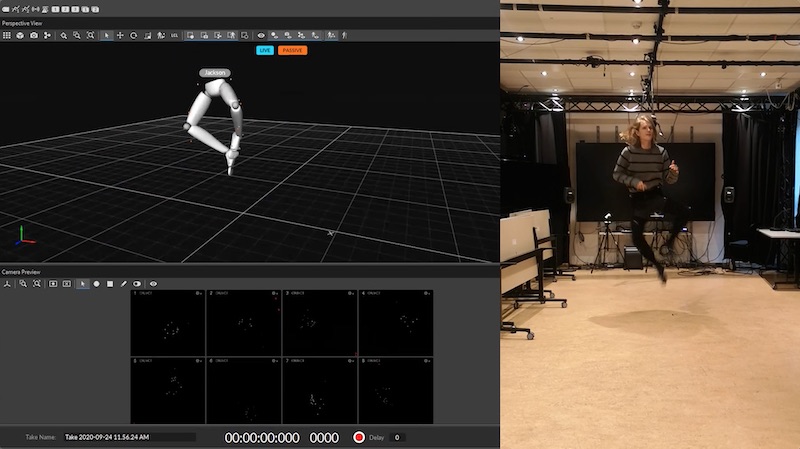

Spring Telematic Concert 2022: Portal Perspectives

Guitar and MoCap in the Portal + behind the scenes documentary!

-

Reverb Classification of wet audio signals

Differenciating reverberation times of wet audio signals using machine learning.

-

Piano Accompaniment Generation Using Deep Neural Networks

How I made use of Fourier Transforms in deep learning to generate expressive MIDI piano accompaniments.

-

Recognizing and Predicting Individual Drumming Groove Styles Using Artificial Neural Networks

Can we teach an algorithm to groove exactly like a specific drummer?

-

Developing Techniques for Air Drumming Using Video Capture and Accelerometers

Creating MIDI scores using only data from air drumming

-

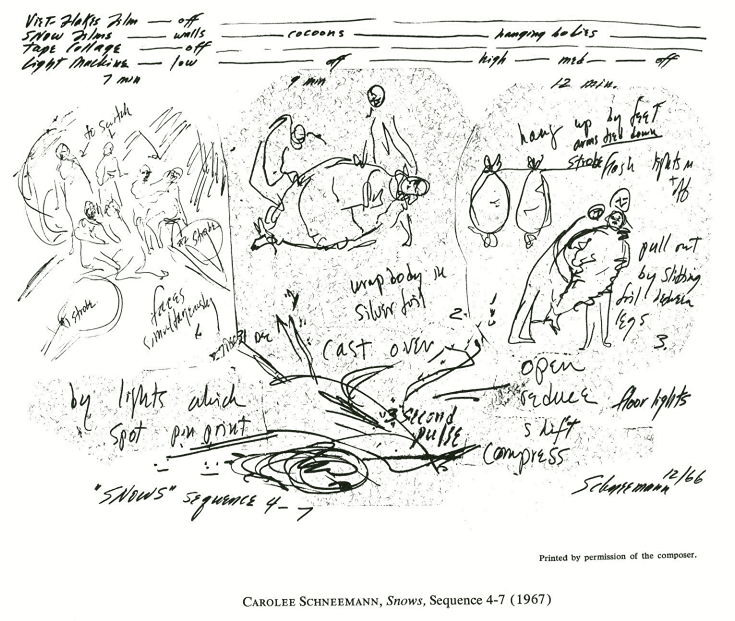

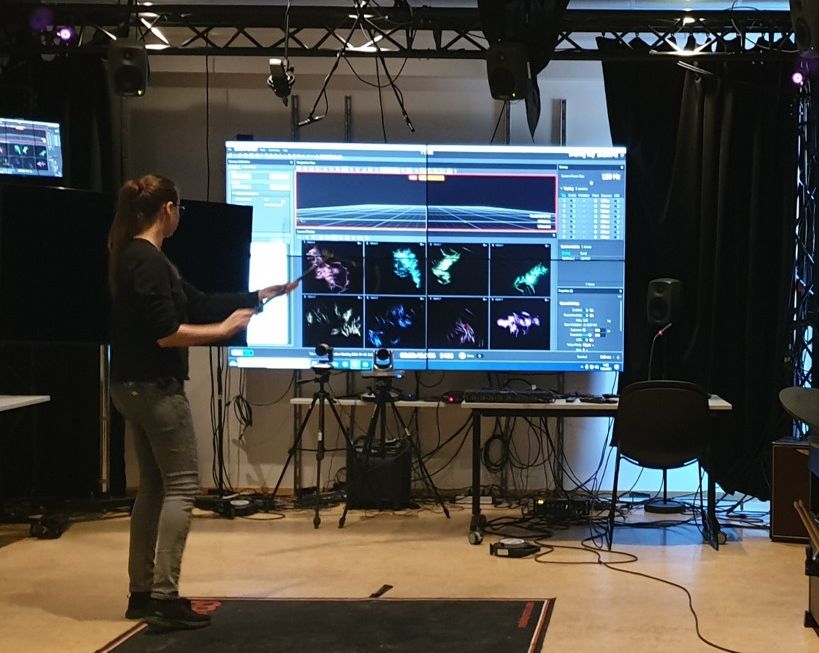

Reconfigurations: Reconfiguring the Captured Body in Dance

Building cooperative visual, kinaesthetic, and sonic bodies

-

Estimating the repertoire size in birds

Estimating the repertoire size in birds using unsupervised clustering techniques

-

Myo My – That keyboard sure tastes good with some ZOIA on top

Extending the keyboard through gestures and modular synthesis.

-

Emulating analog guitar pedals with Recurrent Neural Networks

Using LSTM recurrent neural networks to model two analog guitar pedals.

-

Playing music standing vs. seated; whats the difference?

A study of saxophonist in seated vs standing position

-

What is a gesture?

There are many takes on gesticulation and its meanings, however, I wanted to take the time to delimit what a gesture is, possible categories and gesturing patters.

-

Generating Samples Through Dancing

Using a VAE to build a generative sampler instrument

-

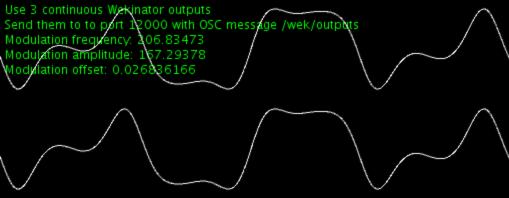

Setting up a controlled environment

Taking advantage of light-weight control messages to do Networked Music Performances

-

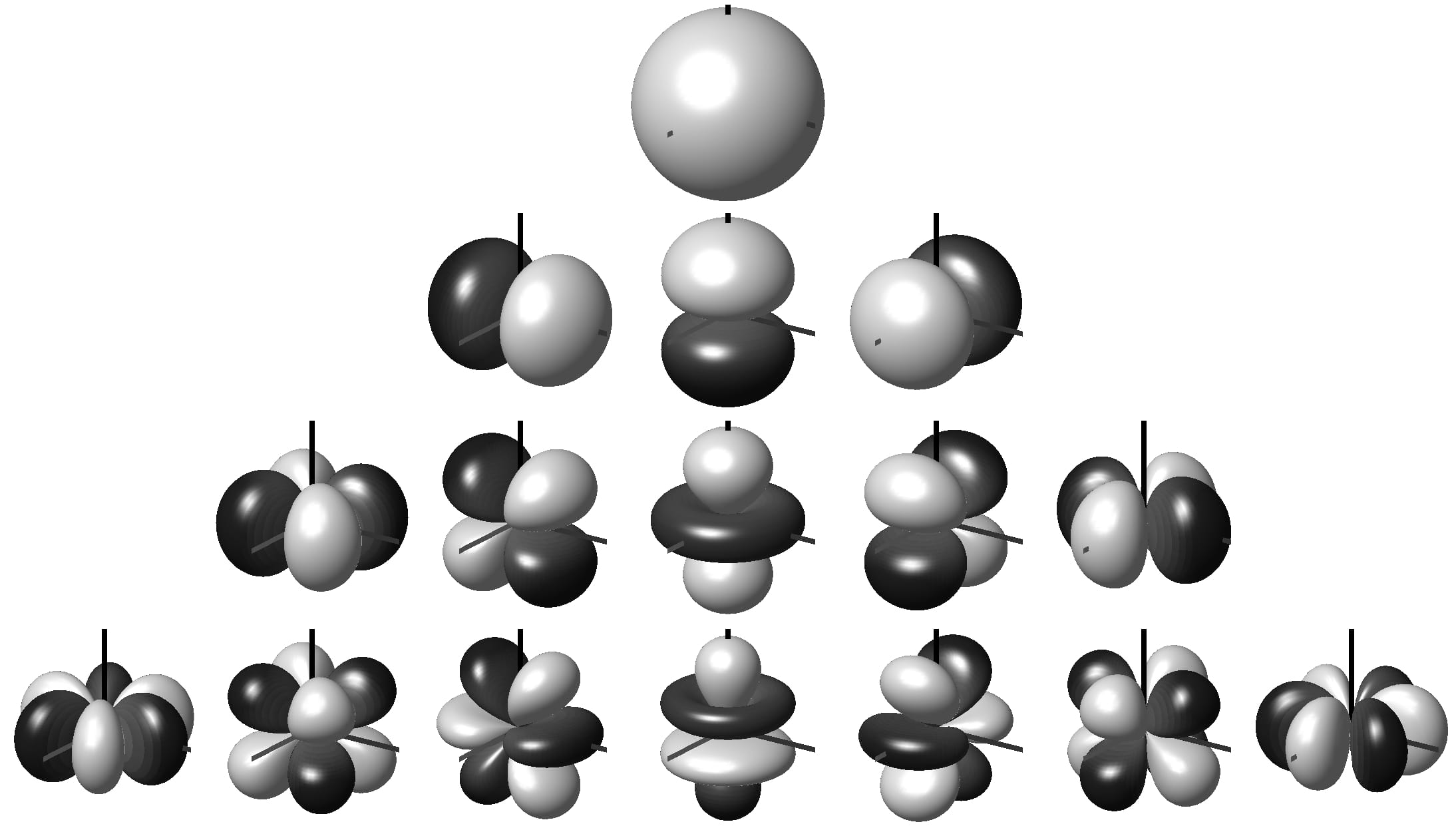

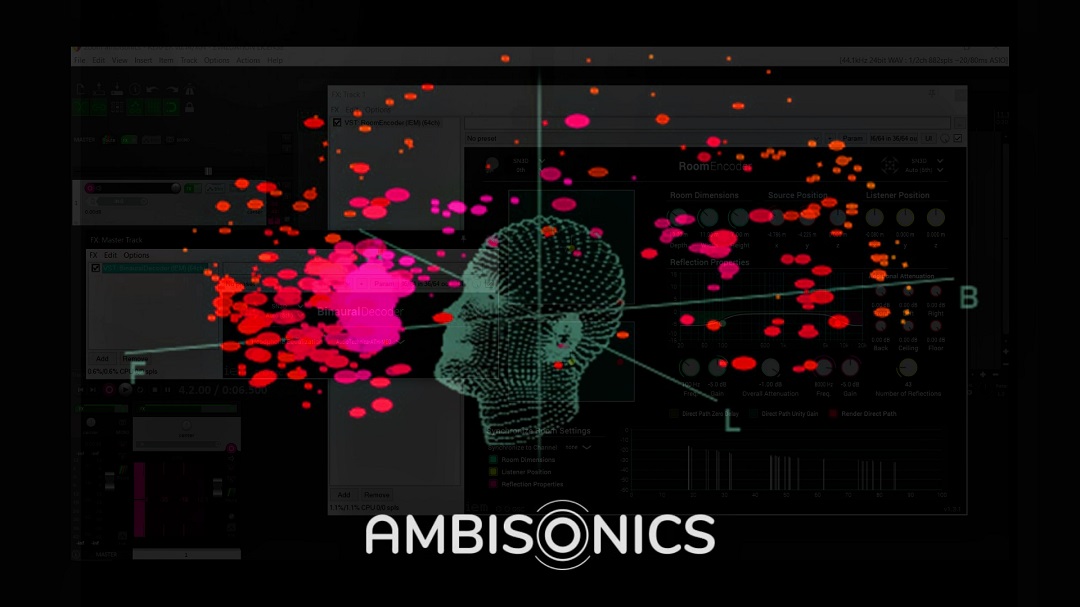

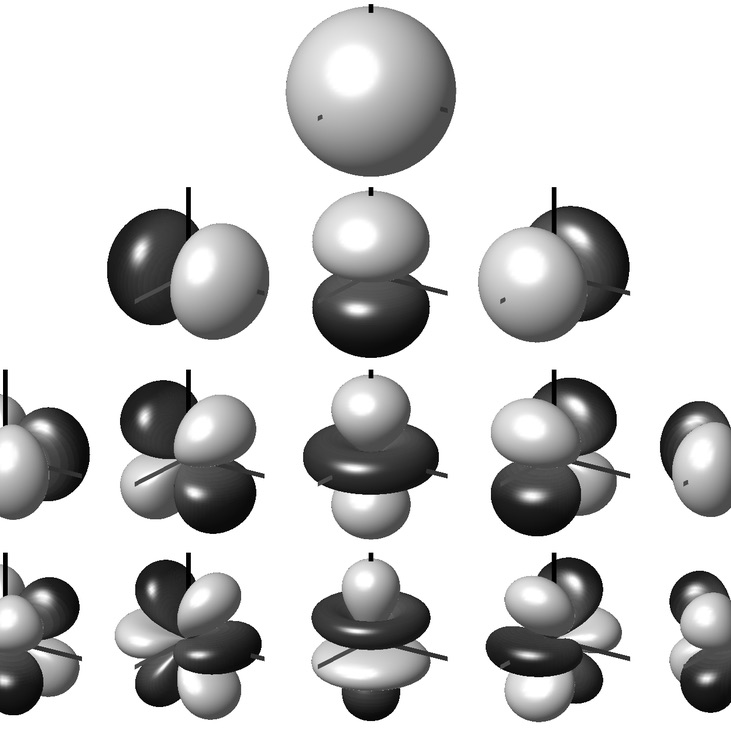

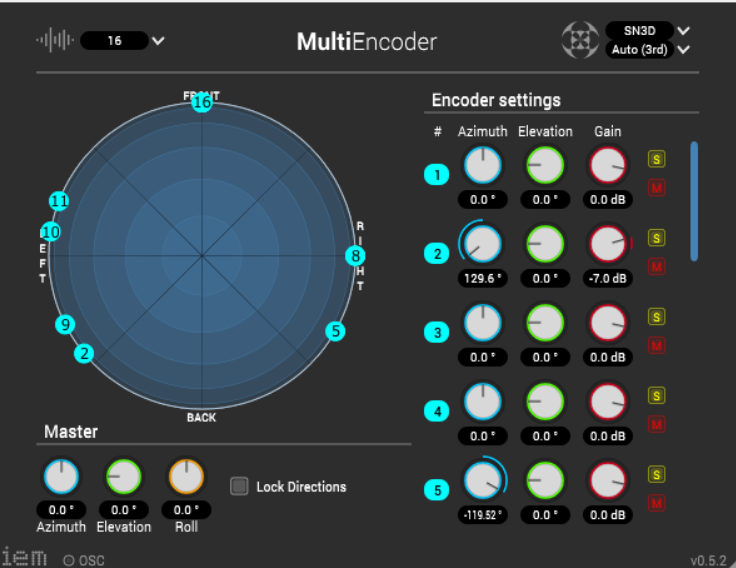

Ambisonics: Under the Hood

What happens when we encode/decode Ambisonics

-

How to Set Up Hybrid Learning Environments

Learn how to set up a hybrid learning environment, ranging from simple to complex, serving as a starting point to help your classroom catch up to the digital age.

-

Managing Network Performance

Managing Network Performance using Python

-

5G Networked Music Performances - Will It Work?

In collaboration with Telenor Research, we explored the prospects of doing networked music performances over 5G. Here are the preliminary results.

-

Generating Music Based on Webcam Input

Do you miss PS2 EyeToy? Then you have to check this out!

-

Using Live OSC Data From Smartphones To Make Music With Sonic Pi

Easy as pi!

-

Mastering Latency

Testing two techniques to work with latency when playing music telematically

-

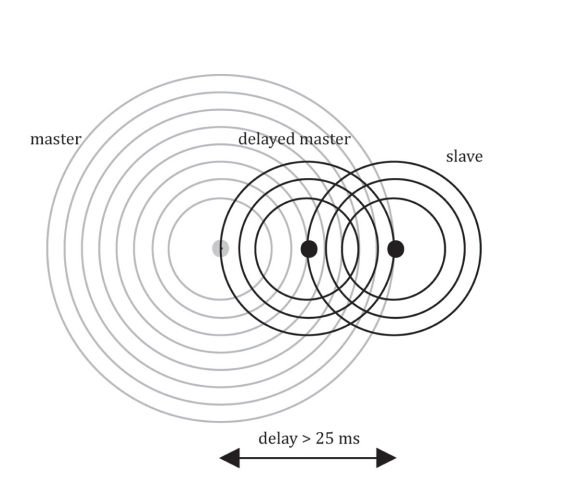

Latency: To Accept or to Delay Feedback?

We tested two of the solutions—the Delayed Feedback Approach (DFA) and the Latency Accepting Approach (LAA)—so you don’t have to!

-

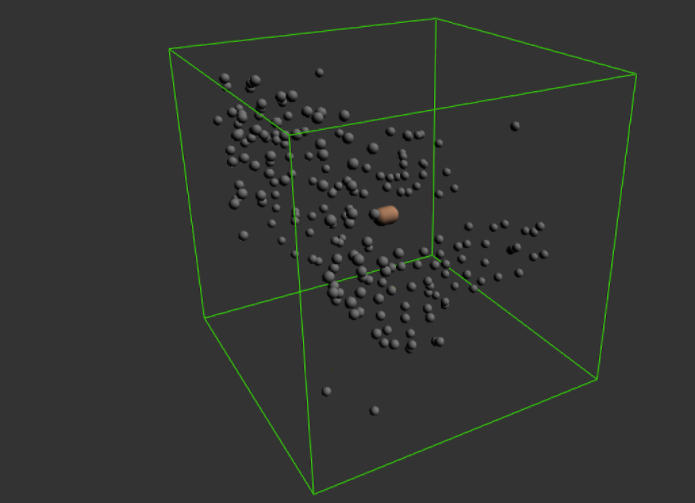

'Chasing Stars': An interactive spatial audio application

Let's explore an interactive 3D-audio application under an ethereal sound landscape.

-

The Granjular Christmas Concert (Portal View)

A report on our telematic performance in the Portal.

-

The Telematic Experience: Space in Sound in Space

A report on our telematic experience in Salen

-

Setting Levels in Virtual Communication

Inspired by how virtual communication has been adopted and integrated in daily life around the globe, this post looks at how simple control messages might help prevent acoustic feedback.

-

Chorale Rearranger: Chopping Up Bach

Can we use Midi data to rearrange a recording of a Bach chorale?

-

Mixing a multitrack project in Python

Attempting to mix a multitrack song with homemade FX in our very own mini PythonDAW

-

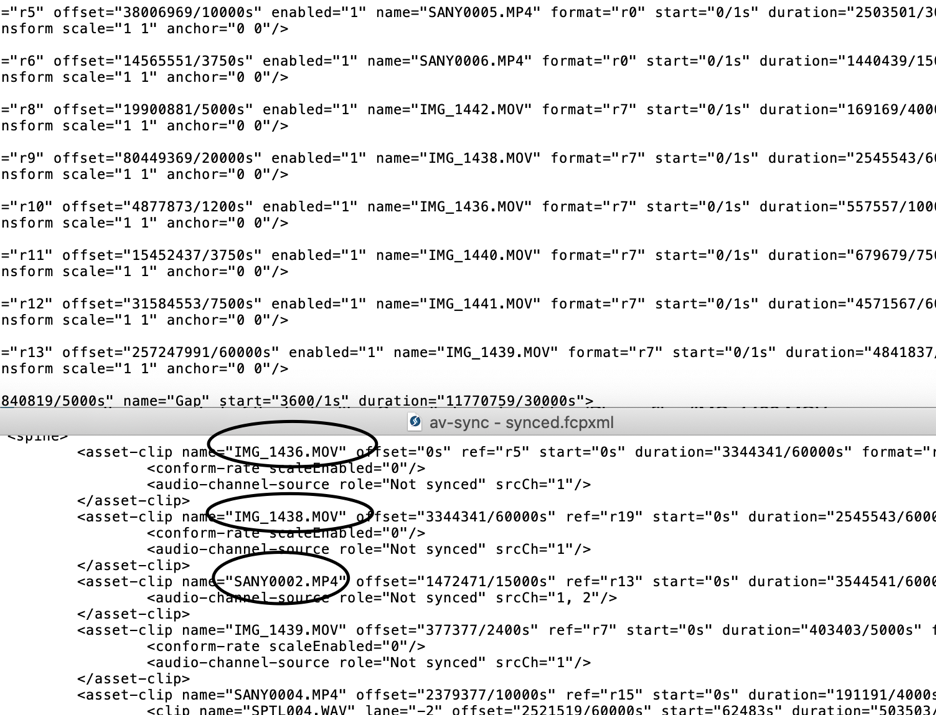

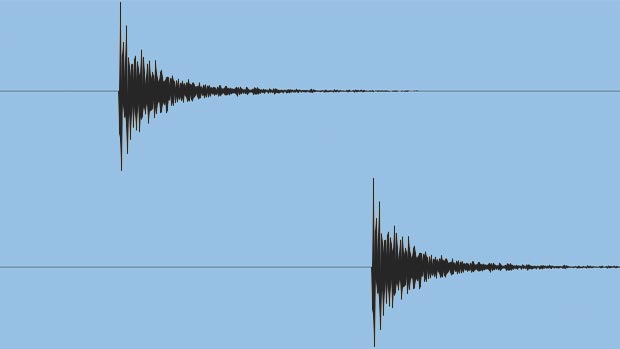

Audio-video sync

Due to the fact that sound travels a lot slower through air than the light, our brain is used to seeing before hearing.

-

Video latency: definition, key concepts, and examples

This blogpost is made after the video lecture on the same topic and it includes a definition of video latency and other related key concepts, as well as concrete examples from the MCT portals.

-

Latency as an opportunity to embrace

How can we turn unavoidable latency into an integral part of telematic performance?

-

Audio Latency in the Telematic Setting

Latency and its fundamentals in the telematic performance context.

-

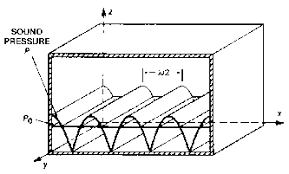

Room acoustics: what are room modes and how do they influence the physical space?

This blog post explains what room modes are, how they affect the physical space and what can be done about it. It was made together with a video lecture.

-

Audio-Video Synchronization for Streaming

This approach considers a streaming solution from multiple sources and different locations (Salen, Video Room, Portal)

-

Touchpoint that can potentially improve the audio latency in a communication system

Quick tips for future MCT-ers or external partners who will be using the MCT Portal for the first time: this article gives you practical information to start with improving audio latency.

-

A short post about feedback

Feeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeedback

-

Telematic Conducting: Modelling real-world orchestral tendencies via video latency

A conductor in one portal. An orchestra in another. What could go wrong?

-

EarSketch (Or How to Get More Python in Your Life)

A review of the asynchronous music production software 'EarSketch'

-

SkyTracks: What’s the Use?

A review and critique of the online DAW SkyTracks for asynchronous collaboration

-

The Triadmin

An instrument without any tangible interface.

-

The algorithmic note stack juggler

Interactive composition with the Algorithmic Note Stack Juggler.

-

Satellite Sessions - Connecting DAWs

A review of Satellite Sessions, a plugin that connects digital audio workstations and creators.

-

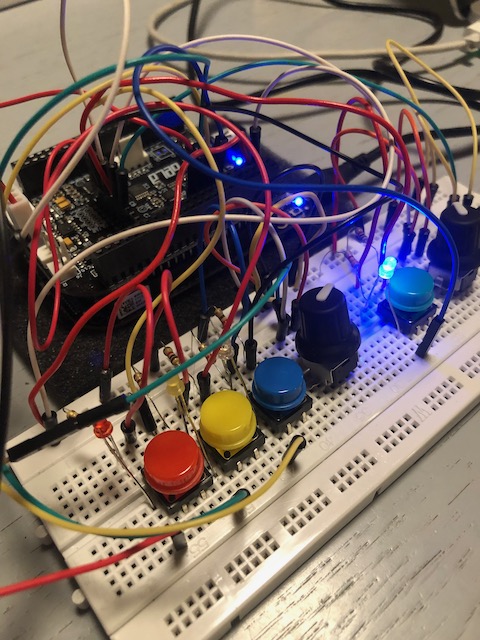

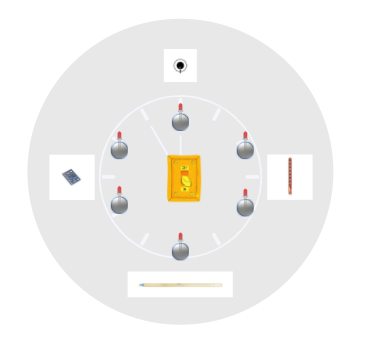

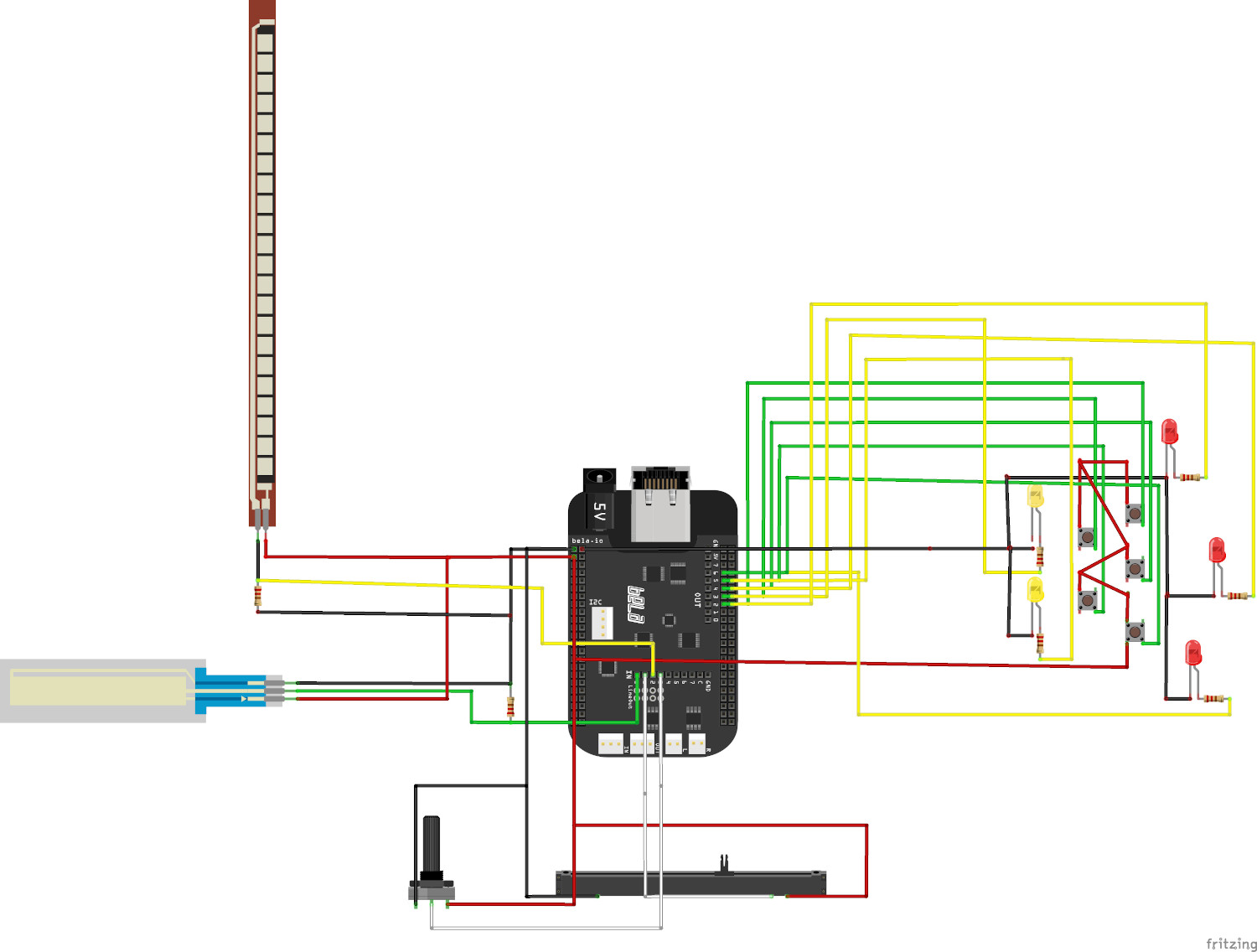

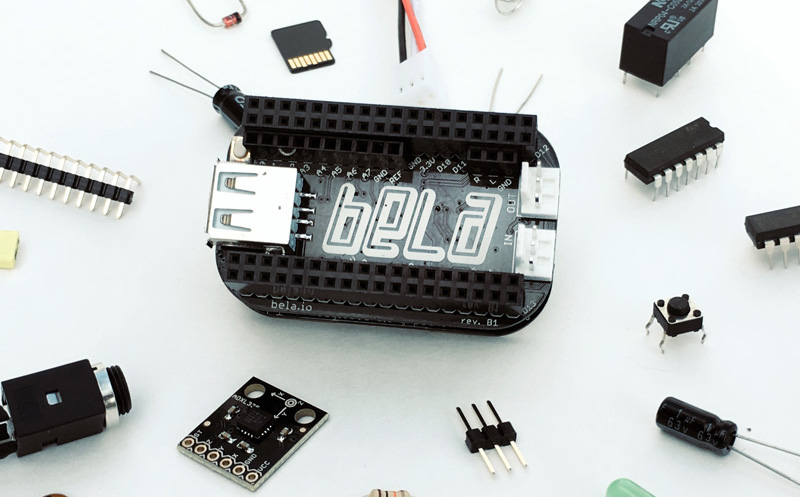

Sequencephere-Linesequencer

Exploration and design of a drum Sequencer and synth using Bela as an interactive music system with Csound

-

D'n'B

Exploration and design of the 'Drum and Bass' interactive music system with Csound

-

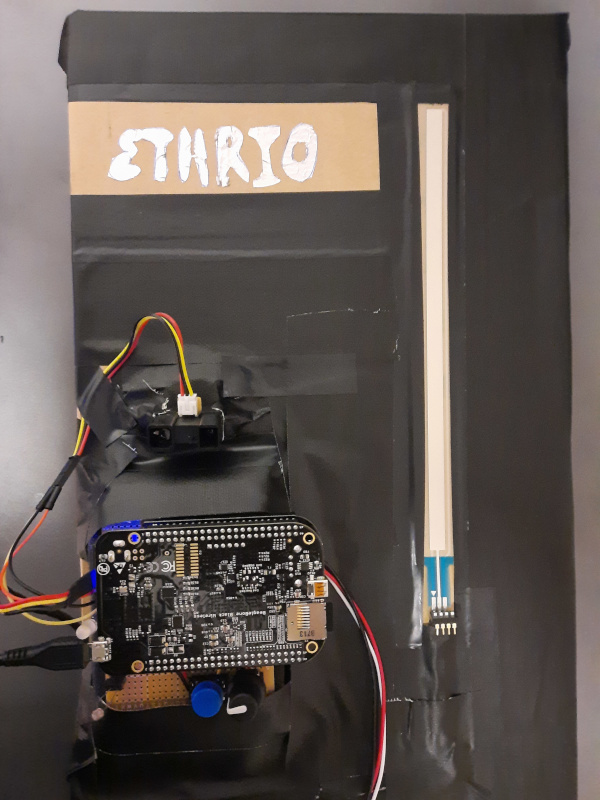

Ethrio

Ethereal sounds from the three dimensions of music: Melody, Harmony, and Rhythm.

-

Soundation Review: What to Expect

A concise review on the collaborative online DAW: Soundation.

-

Audio Blending in Python

Blending audio tracks based on transients in Python.

-

A Brief History of Improvisation Through Network Systems

A glimpse into the evolution of online improvisation and shared sonic environments.

-

Embodiment and Awareness in Telematic Music and Virtual Reality

A discisson about what Embodiment and Awareness means, and how its beeing used in Telematic Music and VR.

-

Telematic Reality Check: An Evaluation of Design Principles for Telematic Music Applications in VR Environments

7 steps for better virtual reality music applications!

-

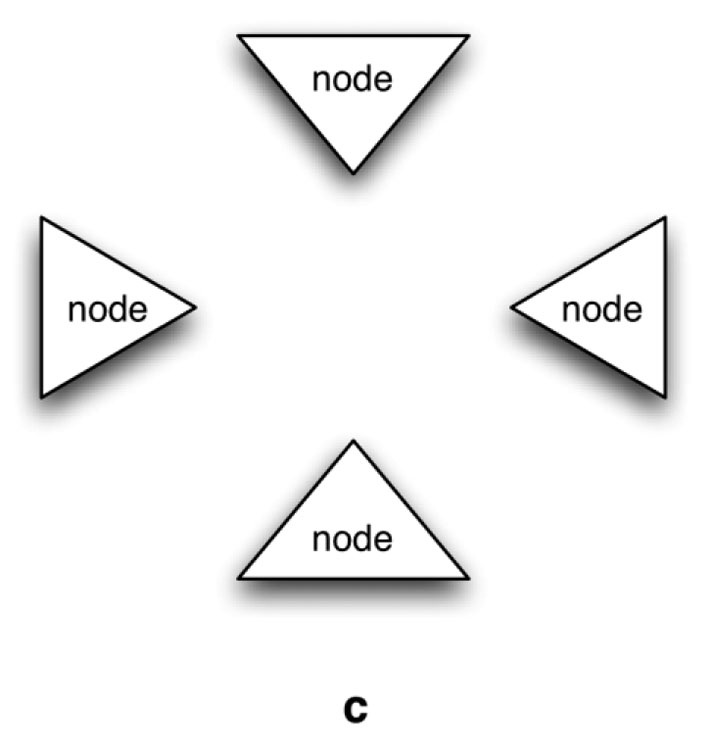

Applying Actor-Network Methodology to Telematic Network Topologies

An inquiry into the application of an actor-network theory methodology to Gil Weinberg's telematic musical network topologies.

-

Telematic performance and communication: tools to fight loneliness towards a future of connection.

With telematic interaction on the rise during a global pandemic, we should explore telematic performances to help prevent loneliness and feelings of isolation through art.

-

Approaches Toward Algorithmic Interdependence in Musical Performance

Is it possible to program interdependent algorithms to perform with each other?

-

Fight latency with latency

Alternative design approaches for telematic music systems.

-

Can Machine learning classify audio effects, a dry to wet sound ?

Distortion or No Distortion - Machine learning magic

-

Ensemble algorithms and music classification

Playing around with some supervised machine learning - genre classification is hard!

-

Internet time delay: a new musical language for a new time basis

Logistical considerations for large-scale telematic performances involving geographically displaced contributors still remain strongly present. Therefore, if networked performers are still to the vagaries of speed and bandwidth of multiple networks and if latency problems remain a significant issue for audio-visual streaming of live Network Music Performance (NMP), one can rather reflect on trying to find a new musical language for a new time basis.

-

Classifying Classical Piano Music Based On Composer’s Native Language Using Machine Learning

How does the language we speak help computers to classify classical music?

-

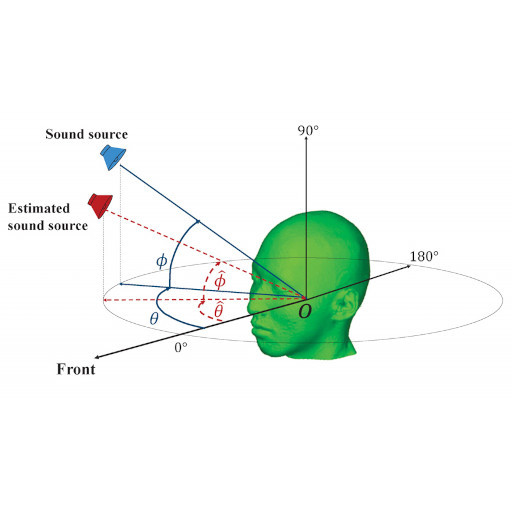

Estimation of Direction of Arrival (DOA) for First Order Ambisonic (FOA) Audio Files

Where is that sound coming from? Let's explore how a machine could answer this question.

-

Ensemble algorithms and music classification

'Why is shoegaze so hard to classify?' and other pertinent questions for the technologically inclined indie-kid.

-

Concert preparations: exploring the Portal

What we learned about the Portal and telematic performances in general while preparing our musical pieces for the end of semester concert. Details about our instrumentation and effects.

-

Preparing NTNU portal for the spring 2021 Concert.

How the NTNU Portal was setup for the spring concert.

-

End of semester reflections for the Portal experience

The first physical portal experience during the pandemic

-

The collision of Jazz and Classical

The rehearsal experience for our telematic performance.

-

The MCT Portal through the eyes of a noob

The experiences I had in the MCT Portal during the spring 2021 semester and my evolution from a complete beginner to an almost-average user of a technical and awesome room like the MCT Portal.

-

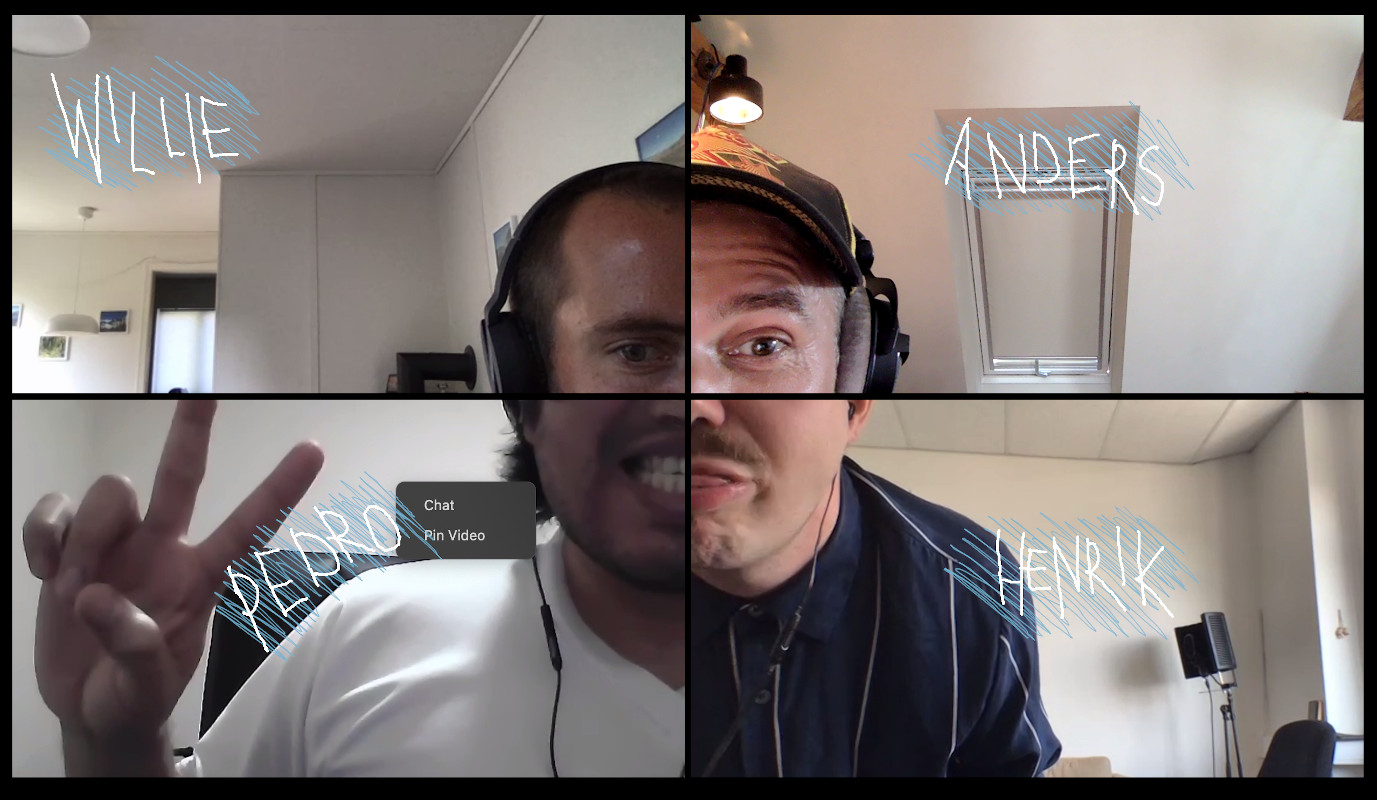

End of Semester Concert - Spring 2021: Audio Setup

As part of the audio team for the end-of-semester telematic concert, Pedro and Anders spent several hours in the portal, exploring different ways to organize audio routing. They also found time to experiment with effect loops. Check out the nice musical collaboration between two different musical cultures.

-

Cross the Streams

When performing in two locations we need to cross the streams

-

Dispatch from the Portal: Dueling EQs

How do I sound? Good? What does good mean? How do I sound? Sigh...

-

Spring Concert 2021: Team B's Reflections

We'll do it live. Team B gets its groove Bach.

-

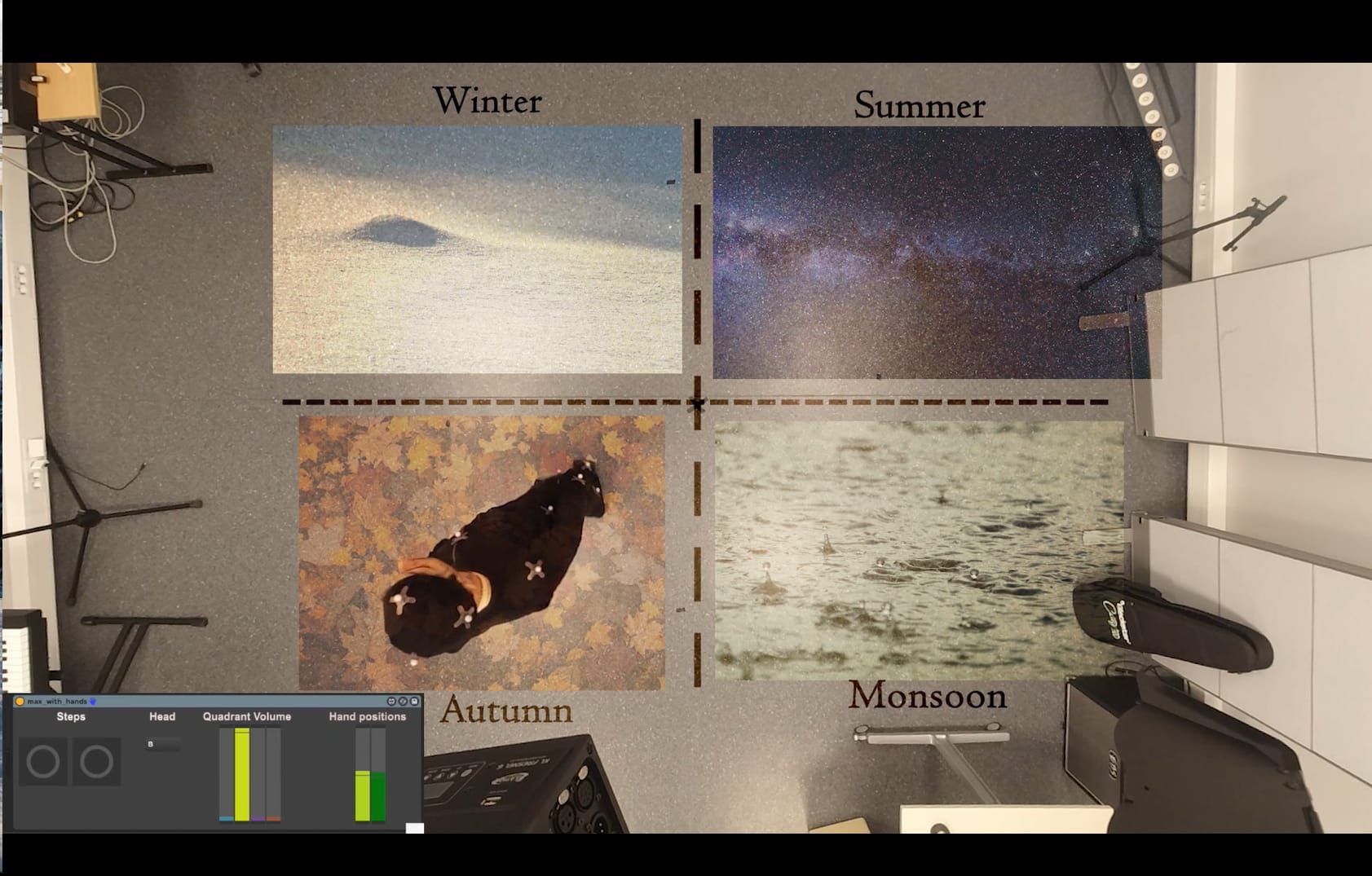

Walking in Seasons

Sonification of motion

-

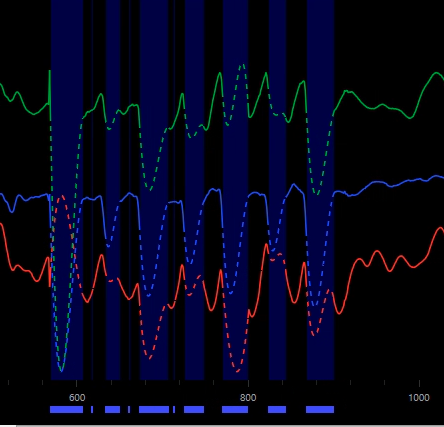

Exploring the influence of expressive body movement on audio parameters of piano performances

How expressive body movement influence music?

-

Motion (and emotion) in recording

The first time I went to a recording studio in the early nineties, my eagerness to (music)-world domination—as well as my fascination for the possibility to put my beautiful playing to a magnetic tape—totally over-shadowed that the result sounded crappy, at least for a while.

-

Shim-Sham Motion Capture

We've learned about motion capture in a research environment. But what about motion capture in the entertainment field? In this project I attempted to make an animation in Blender based on motion captured in the lab using the Optitrack system. Beside this, I also analysed three takes of a Shim Sham dance. For more details and some sneak peaks read this blog post.

-

Kodaly EarTrainer-App

App for training your ears based on old Hungarian methodolgy

-

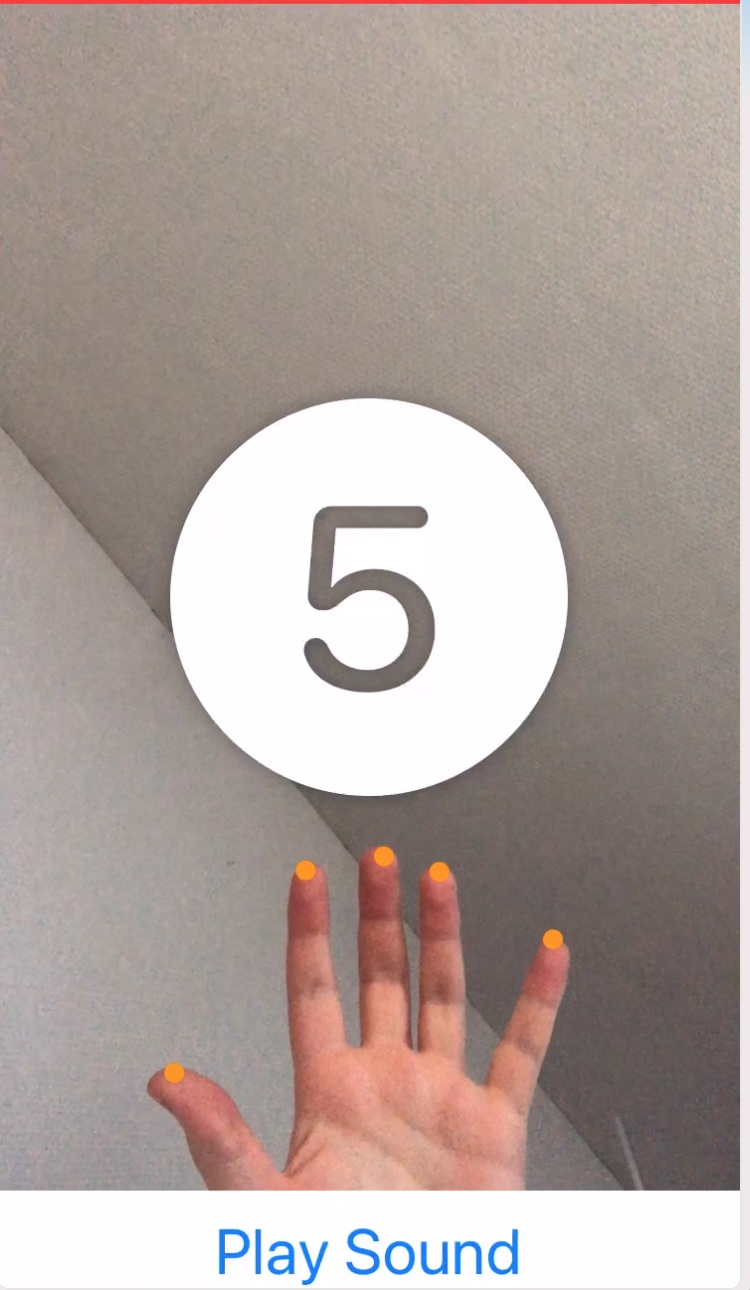

'Air' Instruments Based on Real-Time Motion Tracking

Let's make music with movements in the air.

-

An air guitar experiment with OpenCV

Get to know OpenCV by building an air guitar player. Shaggy perm not required.

-

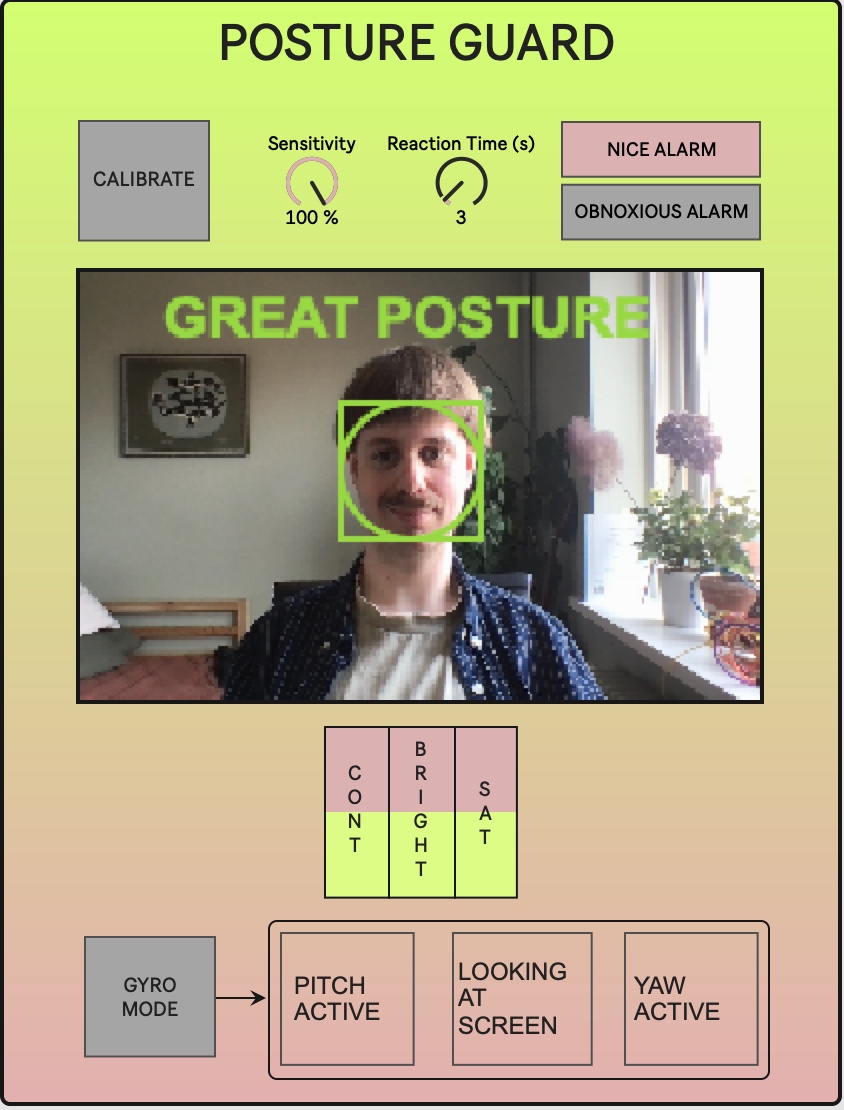

Posture Guard

Back pains, neck pains, shoulder pains - what do they all have in common? They are caused by bad posture while working on a laptop. So I made a program that makes the laptop help out maintaining a good posture while working.

-

Growing Monoliths Discovered On Mars

Mars is a hot topic these days, and weather seems to always be a hot topic too. So how about making a project with both? We ended up gamifying the weather on Mars by discovering the musical potential it may have.

-

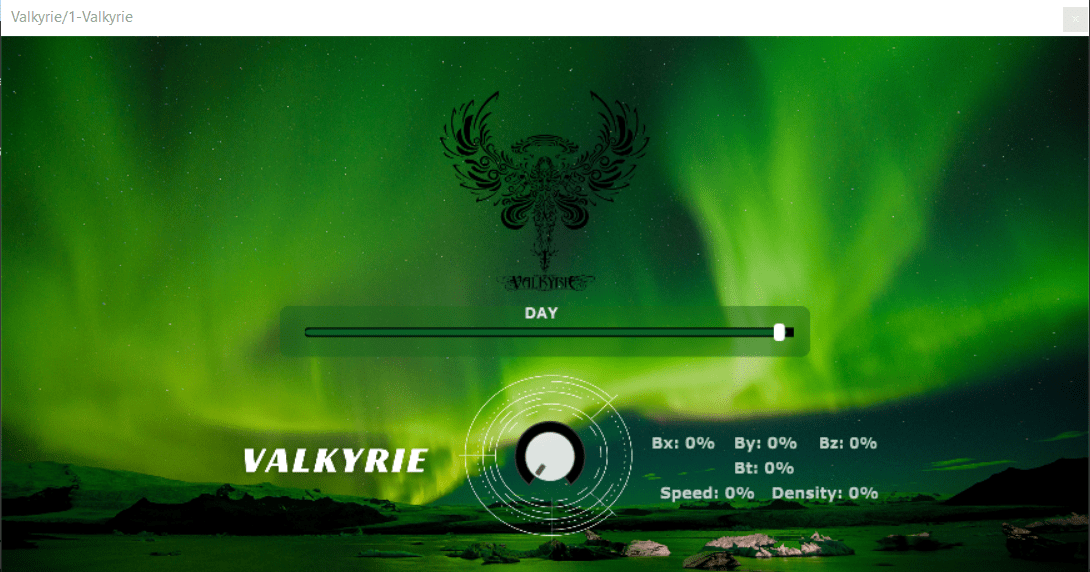

Valkyrie: Aurora Sonified

The sound of Aurora sonified in a synth

-

Pixel by pixel

The pixel sequencer is an interactive web app for sonification of images. Get online, upload your favorite picture, sit back and listen to it.

-

Tele-A-Jammin: the recipe

Our portal experience mapped on a delicious Jam recipe

-

First Weeks Of Portaling With Team B

Starting to figure out the Portal and what we did/found out in the first weeks using it. How to play and some feedback fixing.

-

Liebesgruß or we can put that 'Liebe' aside

It's simply a gruß from Team C with our first telematic setup.

-

Twinkle Twinkle (sad) Little Mars

Finally the B-boys found some hours one evening to spend in the portal, Willie up north, and Pedro, Henrik and Anders down south. This was the first day on their Mission to Mars. Enter our B-oyager, and join us.

-

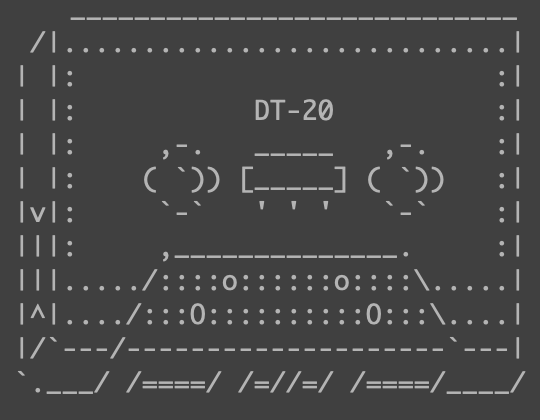

Tapeloop DT-20

For the MCT4048 we wanted to make some looper with layers and FX. But what did we get? The DT-20, a 4-track inspired digital tape looper with FX channels and a 'WASD'-mixer. Enjoy! - Stephen, Pedro, Anders & Henrik

-

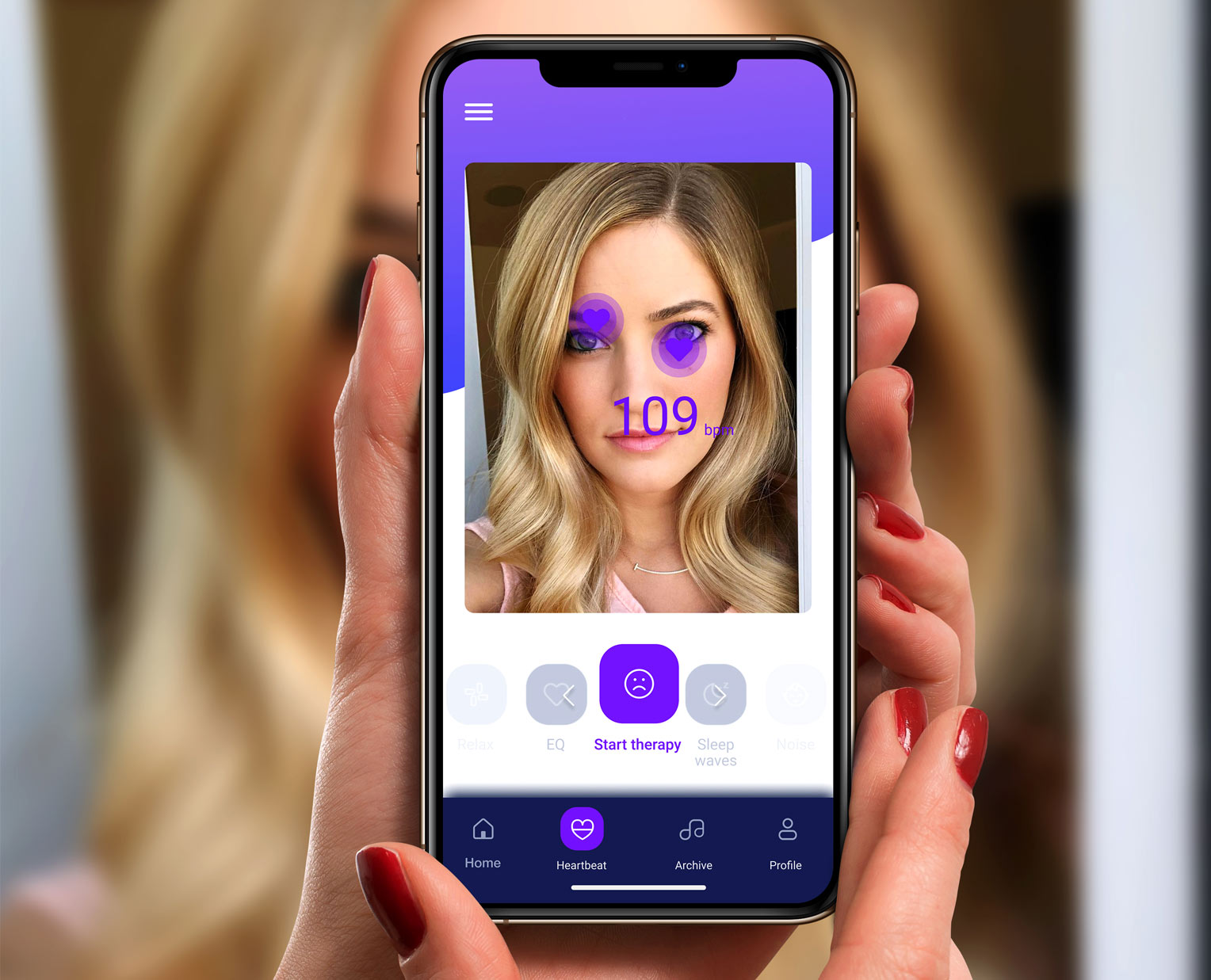

Get unstressed with Stress-less

Acoustically-triggered heart rate entrainment (AHRE)

-

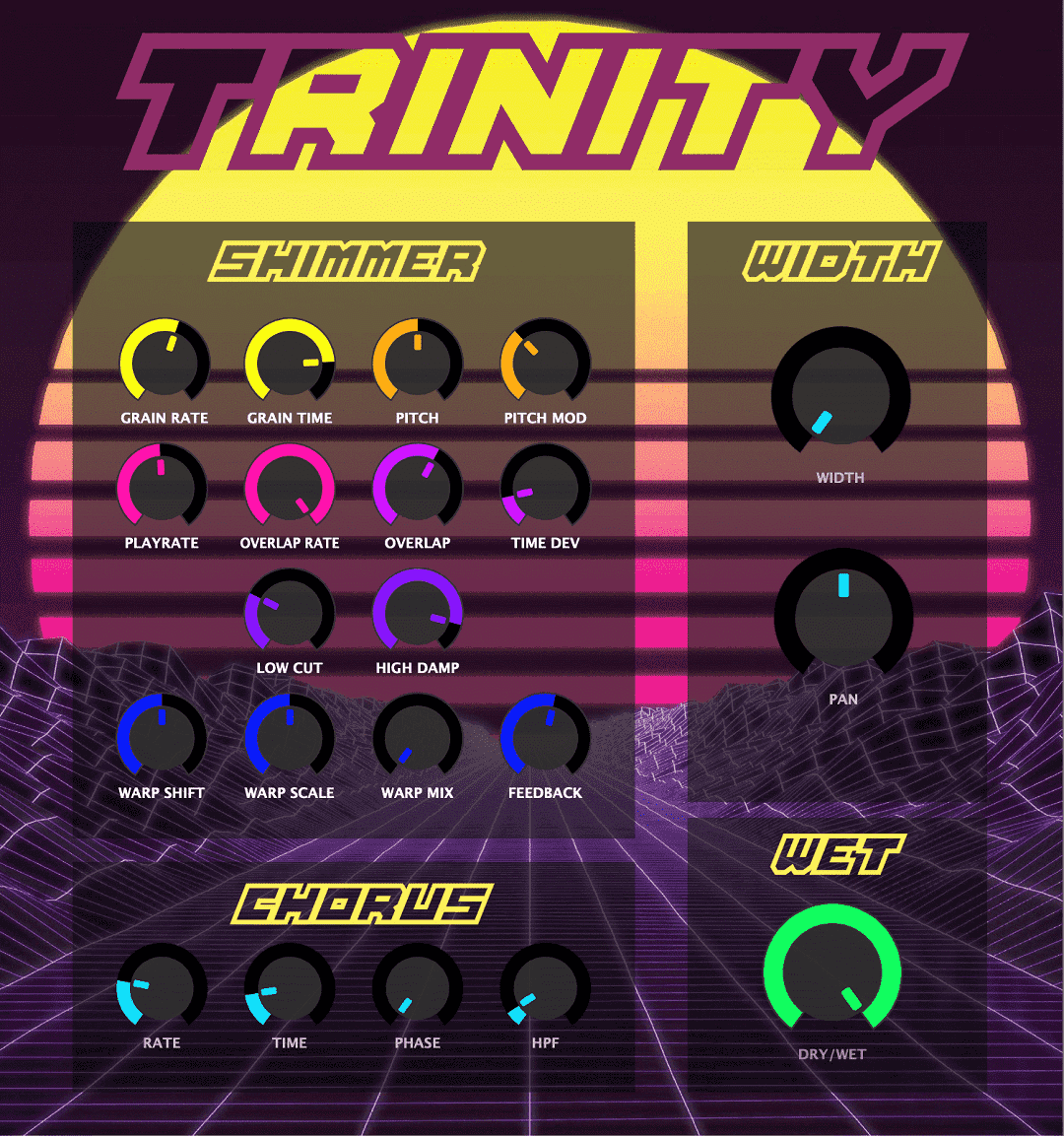

Trinity: Triple Threat

Trinity: What happens when you combine Grain shimmer + Chorus + Stereo Width? Click to find.

-

Funky Balls

Want a more organic and dynamic way of mixing and applying effects? Experiment with funky balls!

-

Clubhouse

Just a new phone or could it actually contribute to our online lives? This is not part of any course or anything, but I wanted to put down my thoughts on this new app. After all, it's all about audio and streaming which is very MCT, so I hope it's OK.

-

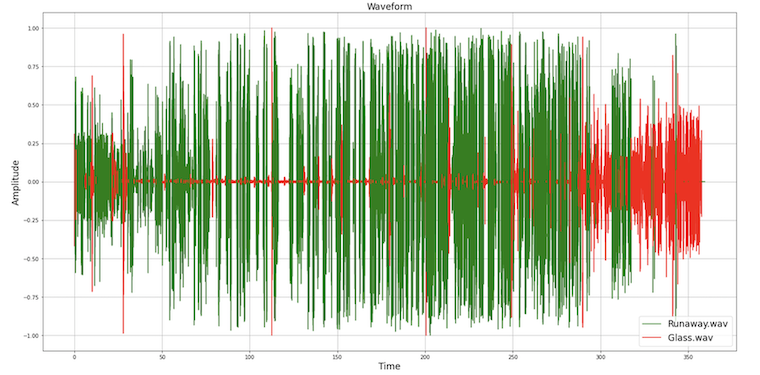

Strange Fragmented Music

A strange song from Sequencing fragments of stranger things and Astronomia

-

A new season of chopped music

The arbitrary mixing of two seasons (two songs representing seasons)

-

Chopping Chopin and Kapustin preludes

Chopping a prelude by Chopin and one by Kapustin and then merging the slices based on their loudness (RMS) and tonality (Spectral Flatness).

-

Chaotic Battle of Music Slices

Slicing up the music and reorganize them with four kinds of Librosa features.

-

Merry slicemas

Let it slice, let it slice, let it sequence.

-

Graphic score no. 7

So … it’s 00:01 in Oslo, December 1st, and the final SciComp assignment is here … And … it’s a though one … 2345 words … AI must be involved here …

-

Chop it Up: Merging Rearranged Audio

Taking beautiful music and making it less so.

-

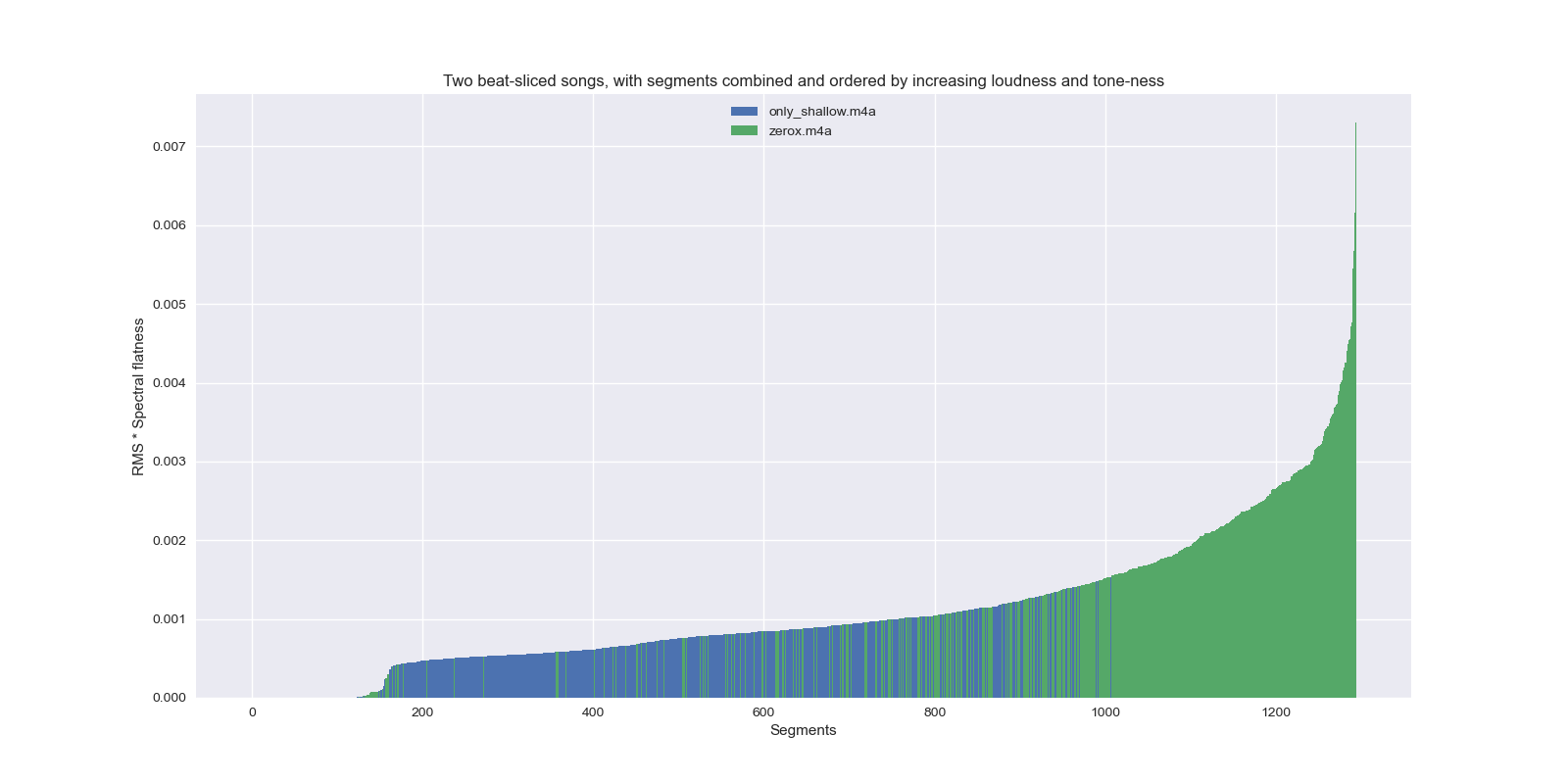

My Bloody Zerox

So the plan was to take two audio files, chop them up in some random way, mix up the pieces and stitch them back together again in a totally different order. Doesn’t have to be musical, they said. Well, just to make sure, I decided to chose two pieces of music that weren’t particularly musical to begin with - Only Shallow by shoegaze band My Bloody Valentine, and Zerox, by post punk combo Adam and the Ants.

-

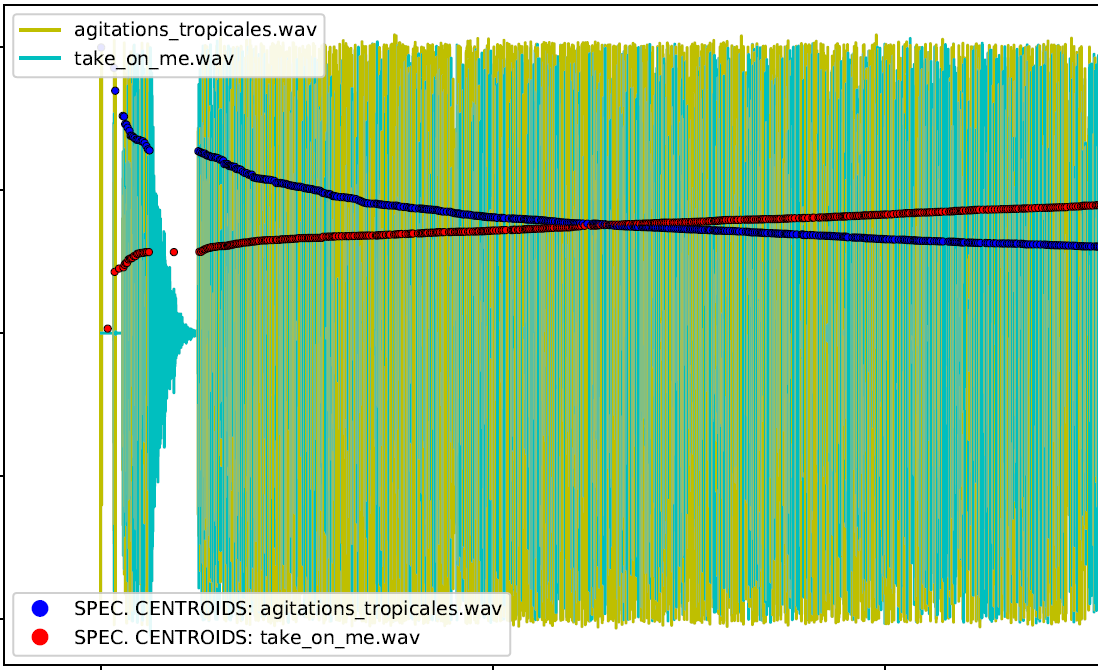

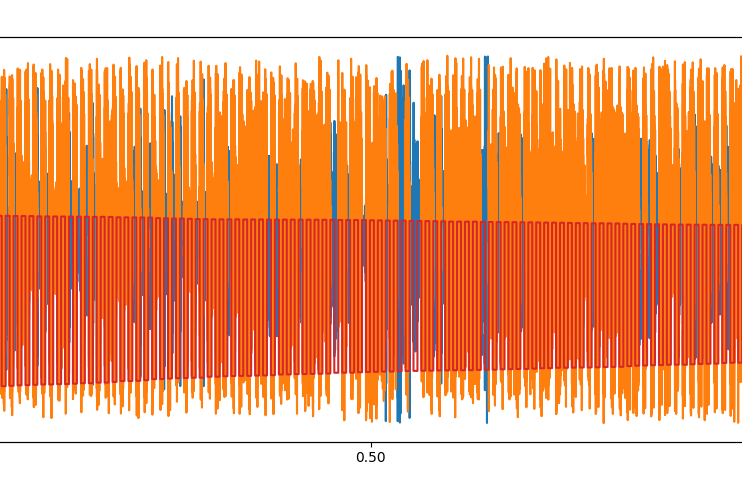

Segmentation and Sequencing from and to Multichannel Audio Files

The strategy explained here considers the slicing of stereo audio files and the production of a new stereo output file based on a multichannel solution. The spectral centroid is used to reorder and intercalate segments in an ascending or descending order according to some rules.

-

Dark Tetris

Scientific Computing assignment for a non-programmer, please don't expect anything special when you see the title about DARK Tetris, even I really want to create that...

-

Slicing and Dicing Audio Samples

Slicing up two songs and re-joining them with RMS and Spectral Centroid values.

-

How To Save The World In Three Chords

Don't run! We come in peace!

-

Music for dreams

We tried to make a spatial audio composition based around a dream

-

Peace in Chaos: A Spatial Audio Composition

Our spatial audio composition highlighted the ways audio can represent both peaceful and chaotic environments and transitions in between.

-

Zoom here & Zoom there: Ambisonics

A more natural way of communicating online, wherein it feels like all the members are in the same room talking.

-

Ambisonic as a ‘mental’ management tool in Zoom?

An immersive audio as a tool to keep our state of mind peaceful.

-

Zoom + Ambisonics

Talking together in an online room is an interesting topic, as the mono summed communication in regular Zoom can be tiring when meeting for several hours a day. Could ambisonics in digital communication be the solution we're all waiting for?

-

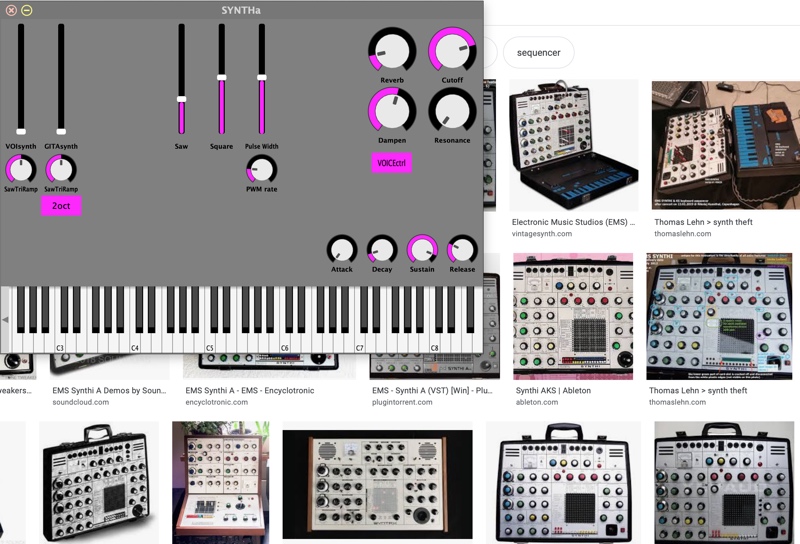

SYNTHa

While this is not a clone of the classic EMS Synthi A, it might have a trick or two up it's sliders, this one to: A softsynth with hard features.

-

Everything's Out of Tune (And Nothing Is)

Who says that Csound and early music can't mix? Building a VSTi for swappable historical temperaments.

-

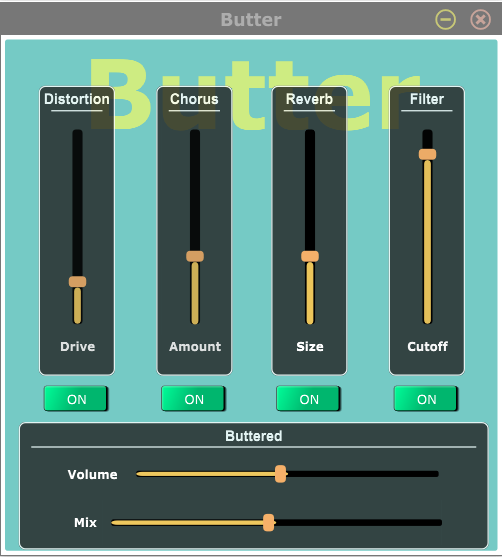

Butter: a multi-effect plugin

An easy to use and fun plugin to make your sound smooth as butter.

-

A fake Steinway made by Csound

How to use Csound to create a digital Steinway?

-

Spaty Synthy

Attempt at modelling a Delay Repeating Spatial Synthesizer using Csound

-

Phade - a phaser delay FX

A swirly, shimmery shoegazey multi-fx.

-

Beethoven under Moonlight

Creating a CSound project that uses Frequency Modulation synthesis (carrier and modulating oscillator) and plays the first few bars of the Moonlight Sonata by Beethoven based on a score.

-

Ondes Martenot's brother - Orange Cabbage

The evolution of Ondes Martenot.

-

'El Camino de los Lamentos': A performance using custom Csound VST Plugins

This performance is using two VST plugins produced in Cabbage through Csound. A synthesizer based on elemental waveforms, frequency modulation, and stereo delay, and an audio effect for pitch scaling and reverb.

-

Kovid Keyboard

A web based CSound Synth letting you play together online!

-

Shaaape

Distorting signals with ghost signals.

-

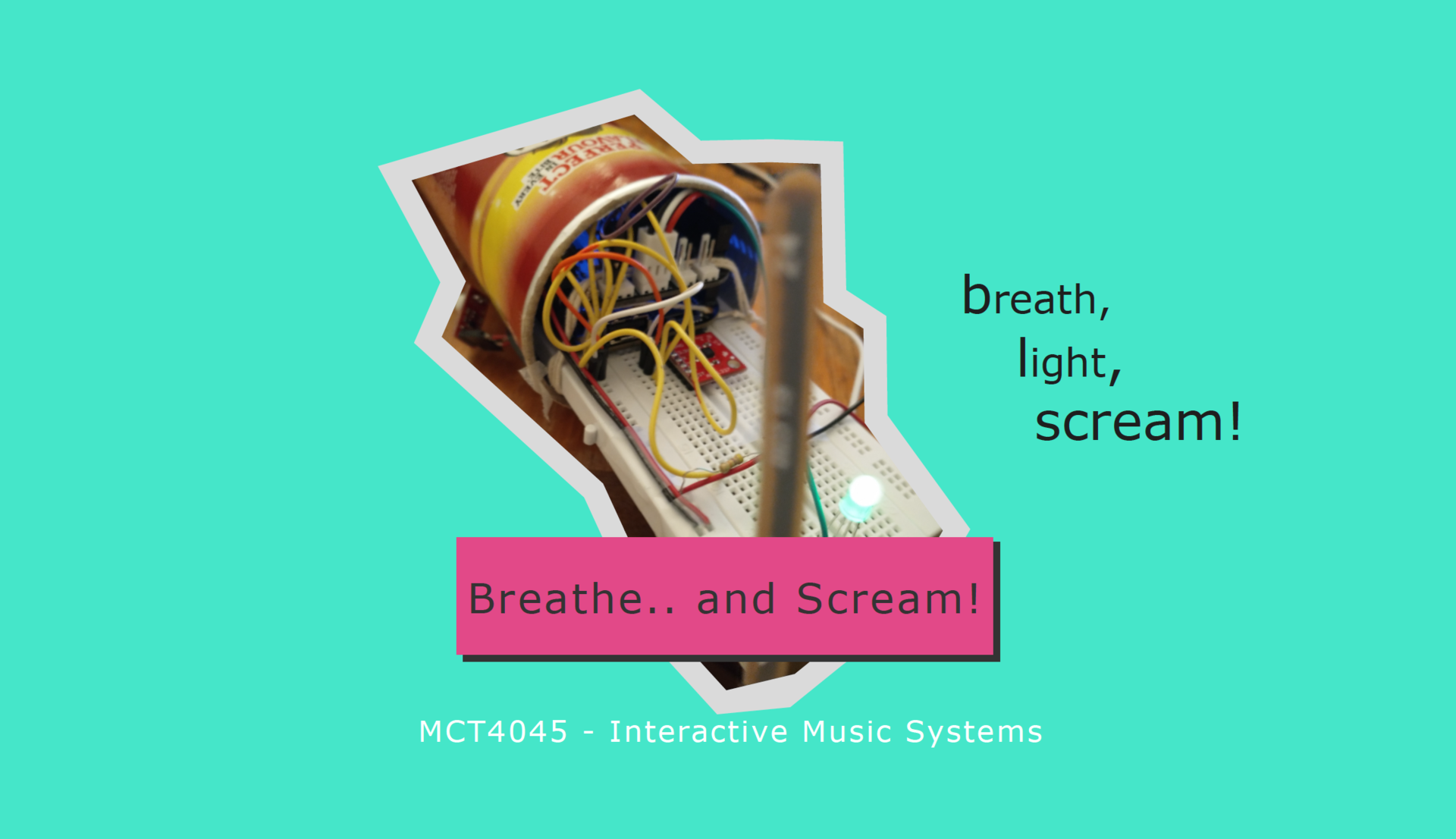

Breathe the light, scream arpeggios!

Multisensorial music interface aiming to provide a synesthetic experience. Touch, light, breathe, scream - make sound!

-

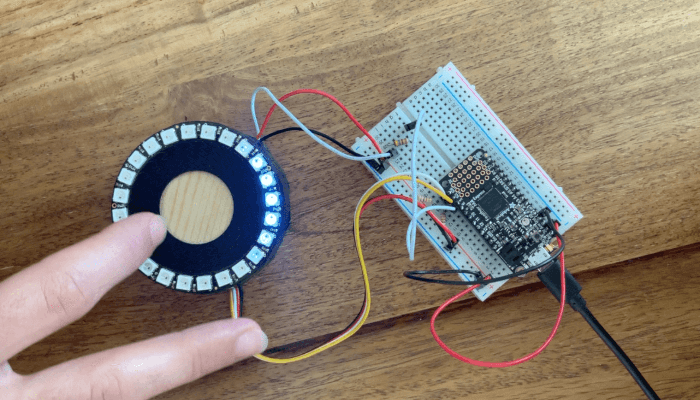

The Ring Synth

Exploring speed as sound shaping parameter

-

HyperGuitar

An exploration of limitations and how to create meaningful action-sound couplings.

-

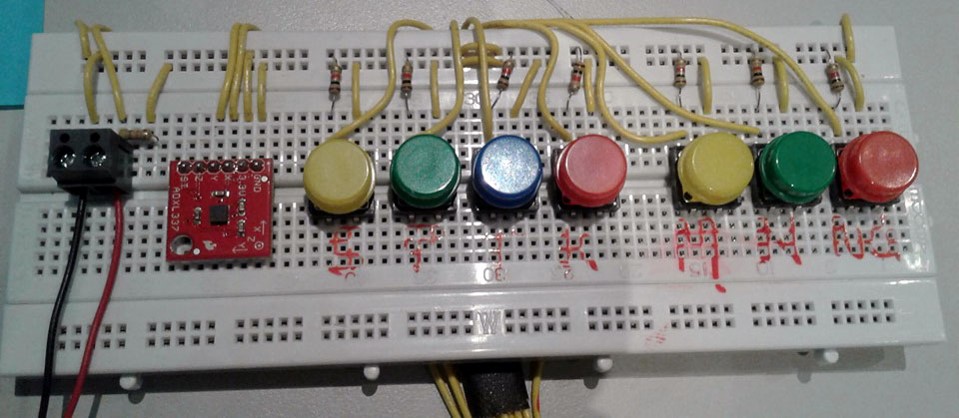

The singing shelf bracket

Pure Data (PD) has a lot of possibilities, but when getting the opportunity of putting together all of those digital features into the real word: with real wires, real buttons, real sensors - I must admit - I got a little over-excited!

-

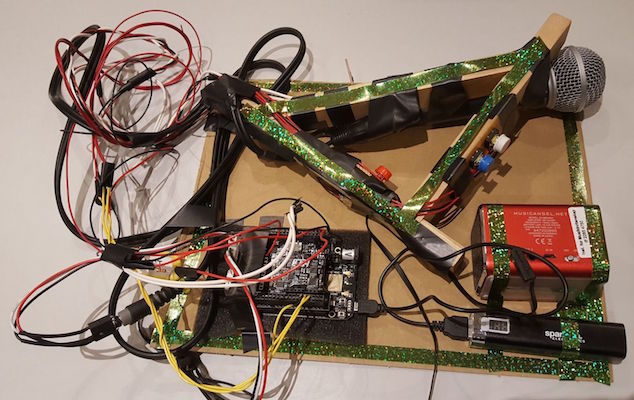

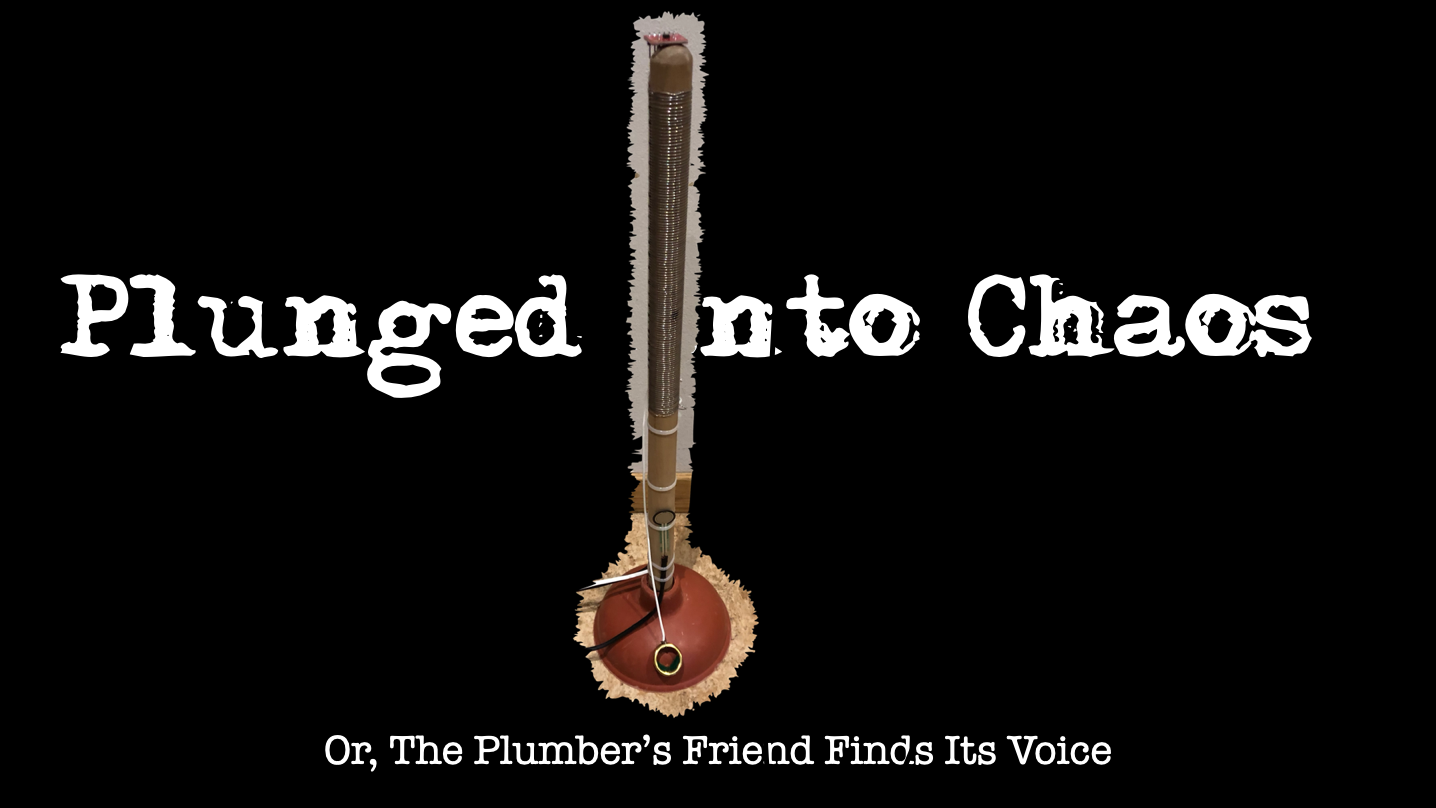

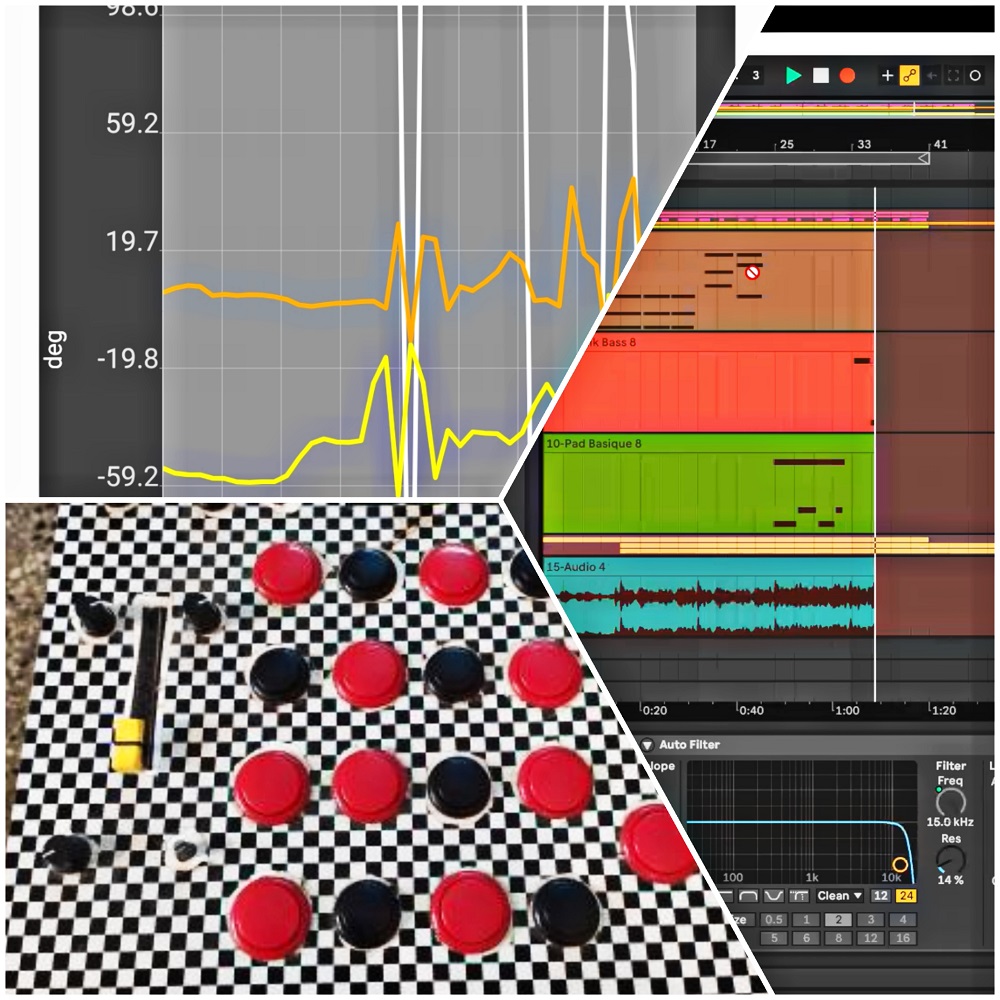

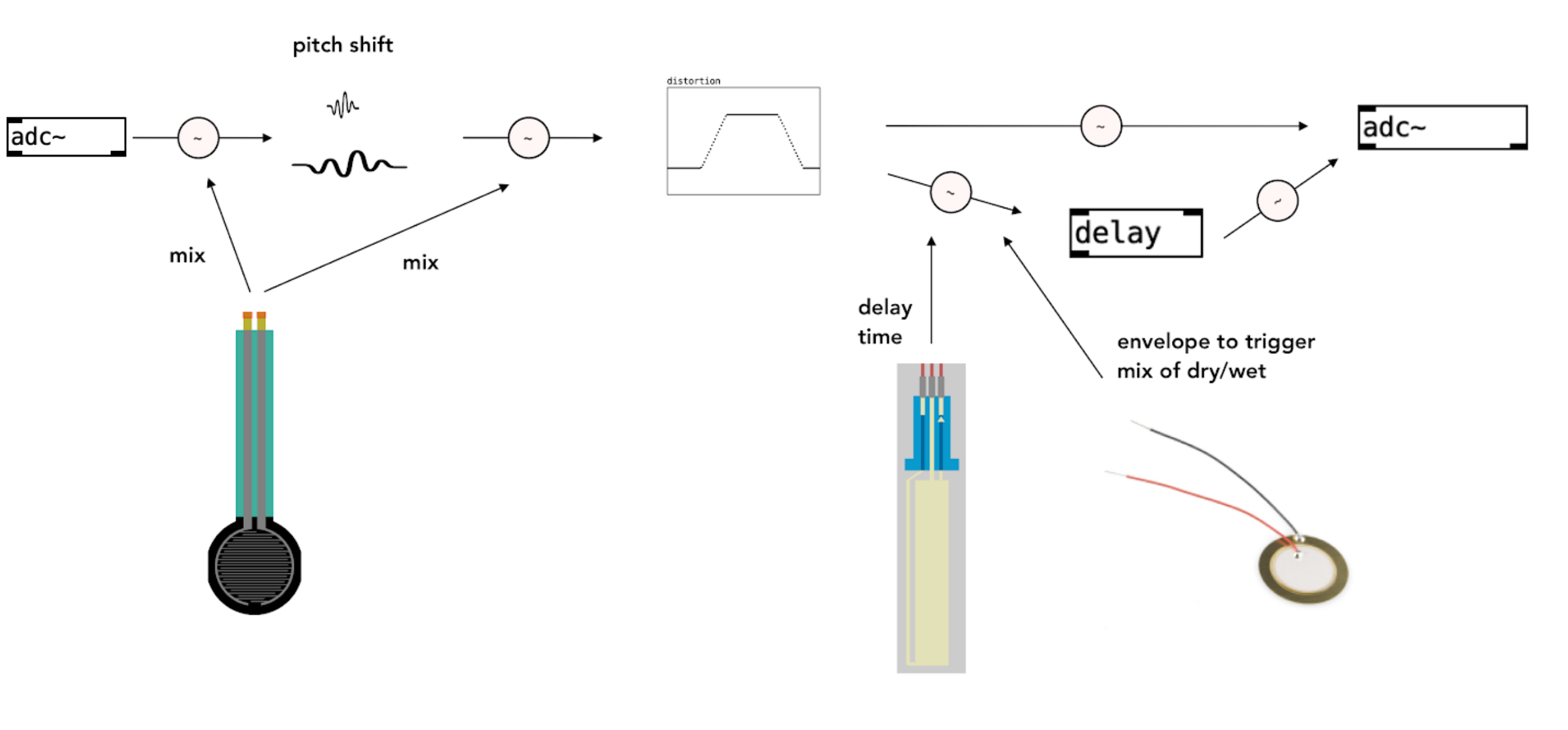

Plunged Into Chaos

Wherein the lowly Oompa-Doompa assumes its ultimate form.

-

MIDI and Effects: the Musicpathy

The magic of controlling instruments from 1000km apart

-

Voice augmentation with sensors

Trying to achieve a choir-like effect by augmenting microphone input with sensory features

-

SamTar

An interactive music system exploring sample-based music and improvisation through an augmented electric guitar

-

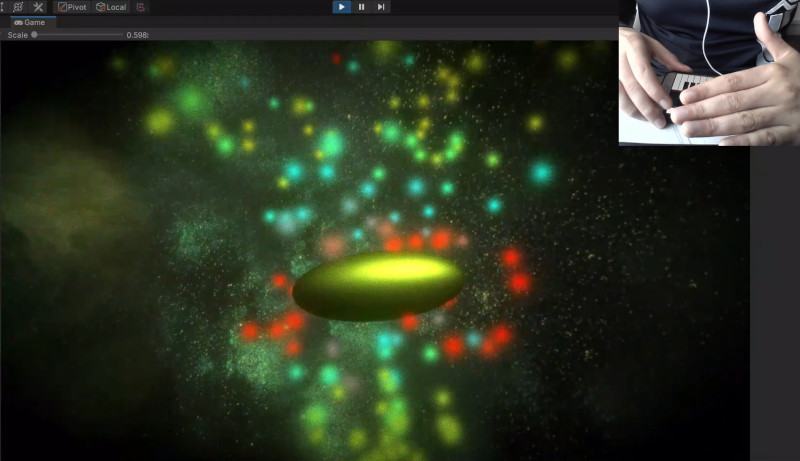

The Psychedelic Journey of an Unexpected Spaceship

An electronic music performance in an audio-visual digital environment. Let's go through the galaxy in a crazy spaceship and have an experience full of color and funny turbulence.

-

A Live Mixer made from mobile devices

How does it look to control audio effects in real-time using gestures?

-

cOSmoChaos

Cosmos and chaos are opposites—known/unknown, habitated/unhabitated—and man has through all times been seeking to create cosmos out of chaos. But what has this to do with GyrOSC controlling my hardware … well, everything.

-

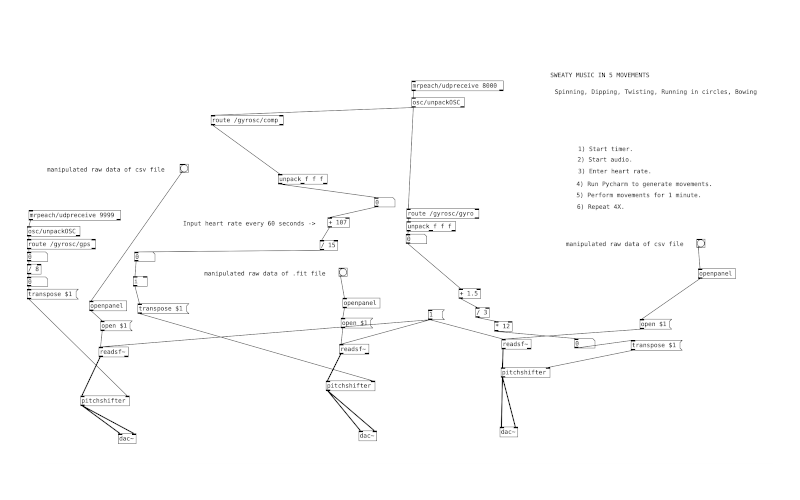

Chance Operations, Rudimentary Pure Data (PD), and a Bunch of Spinning in Circles

Sometimes you want to compose and get your workout. Experience a chance composition that may leave the performer sweating.

-

Improvised electronica with TouchOSC

In this project, I wanted to explore the options available when performing electronic music live with no pre-recorded / pre-sequenced material.

-

Real-time audio processing with oscHook and Reaper

Fun and not too complicated interactive audio processing.Using oscHook to transmit sensordata from an Android phone to OSC Router and then to Reaper to control the values of certain effects' parameters.

-

The Dolphin Drum

My granular synthesis percussive instrument from the Interactive Music Systems course.

-

Musings with Bela

A tale of accelerometers, knobs, an EEG and the attempt to tame sound with my mind. Follow along!

-

The voice of a loved one

Can AI really know what our facial expressions mean?

-

Team C's reflections on Scientific Computing

Despite our diverse professional backgrounds, three of us are pretty much beginner pythonista. All of our code seems pretty readable. Joni’s code is very clean, possible because she’s a designer, with good commenting. Wenbo favoured a single cell for his code. Upon the review on everyone’s submissions, we agreed that our team activity in the future has to increase. Meister Stephen will have to be more patient with us!

-

Reflections on Scientific Computing (The B Team Bares All)

Team B reflects on a week of coding ups and downs.

-

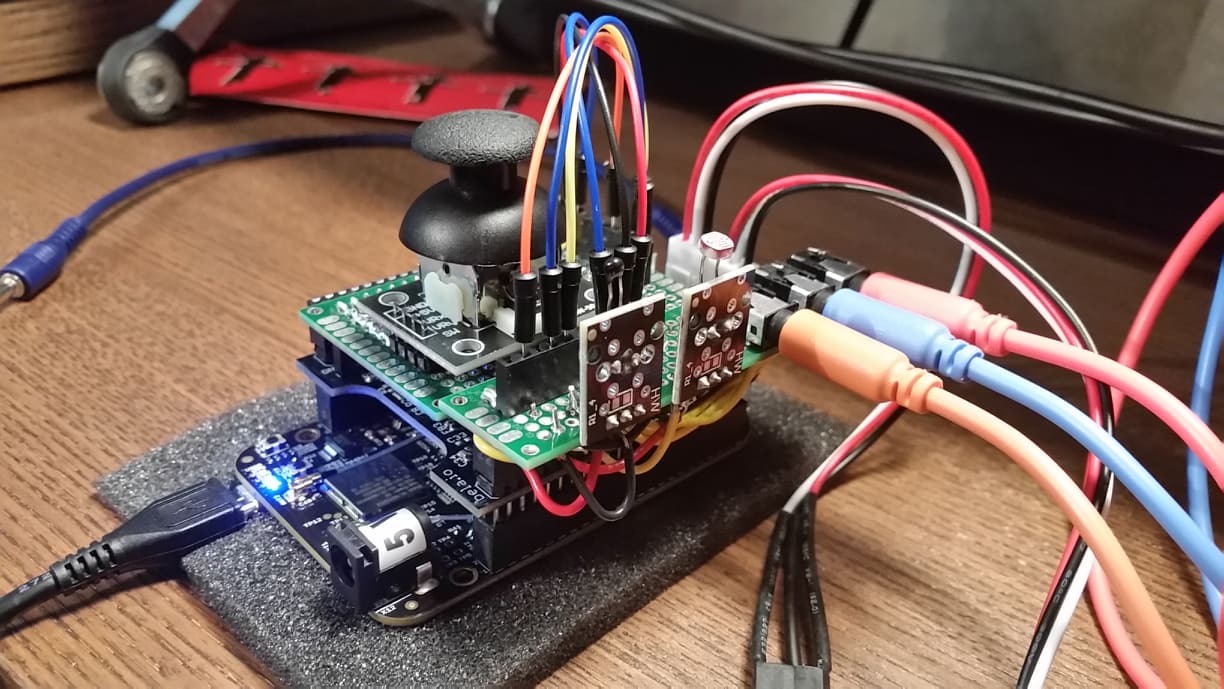

Making virtual guitar playing feel more natural

Can using sensors, buttons and joysticks to play a virtual Guitar resemble the experience of playing a real guitar and result in a more natural performance than using a keyboard for input?

-

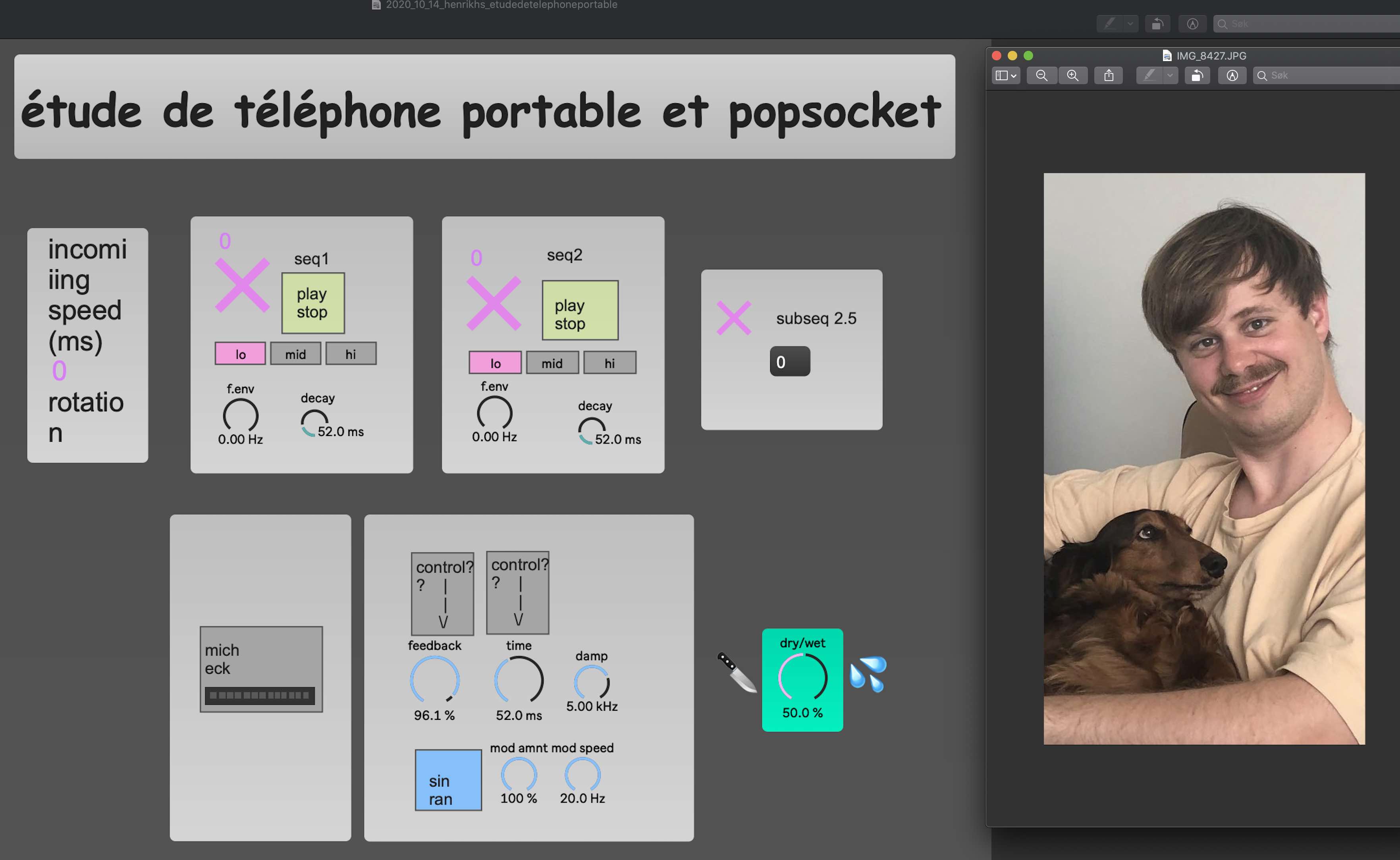

Étude de téléphone portable et popsocket

Click to see a cute dog making strange music. Unbelievable. I think that sums it up.

-

Scientific Computing Midterm

Team A's reflection on the midterm scientific computing assignment. Shortly, this had a purpose of creation of a general python program that would read audio files from a specified folder based on a csv file and would output another csv file with an added values for each individual audio file: the average Root Mean Square, Zero Crossing Rate, Spectral Centroid. Moreover, the program displays and saves to a file several scatterplots.

-

Musicking with JackTrip, JamKazam, and SoundJack - Presentations

The class of 2021 presented on JackTrip, SoundJack and JamKazam and their networked musicking potential. Presentations are included in this blog post as pdfs.

-

The Joys of Jitsi

Jitsi is a venerable open source chat and video-conferencing app thats been around since 2003. It's multi-platform, and runs pretty much everywhere. We were given the task of testing the desktop apps on MacOS and Windows, and the mobile apps on Android and iOS.

-

Zoom - High Fidelity and Stereo

For the members of Team B, Zoom meetings are an everyday occurrence. Like most people during the covid era, we spend much of our professional time communicating from the comfort of our living rooms. These days, using Zoom feels akin to wearing clothes; we’re almost always doing it (sometimes even at the same time).

-

Pedál to the Metal

Another week, another audio-streaming platform to test. Here is Team A's impressions of Pedál. According to their website, Pedál is a cross platform service to stream audio, speak, share screen, and record high quality audio together and the simplest way to make music together online.

-

A Brief Workshop on Motion Tracking

Since our spring semester of motion tracking was a purely digital experience, a few of us got to together to quickly test out the OptiTrack system within the Portal.

-

ML something creative

Intuition tells me that a larger network should be better. More is more, as Yngwie says, but that is definitely not the case.

-

Multilayer Perceptron Classification

Multi-layer Perceptron classification. Big words for a big task. During this two-week course in machine learning all my brain cells were allocated in solving this task. Initially I wanted something simple to do for my project since wrapping my head around ML was a daunting enough task. I soon realized there really is no such thing as simple in machine learning.

-

Scale over Chord using ANN

Try to learn scale over chord choices of great jazz improvisers

-

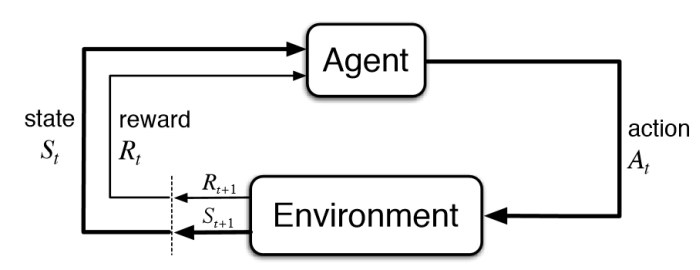

Learning to sequence drums

Can reinforcement learning be a useful tool to teach a neural network to sequence drums?

-

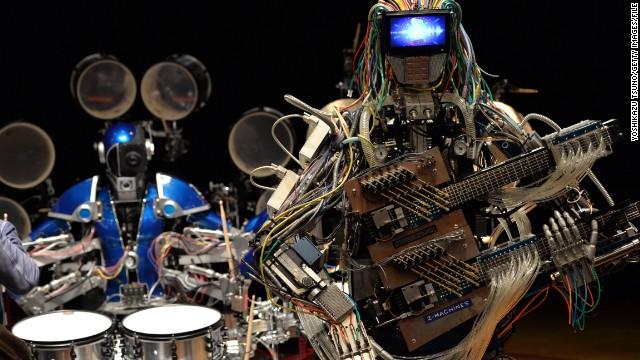

Beneficial Unintelligence

In the future, when the robots take over the world, we all will be listening to 24/7 live streamed death metal until infinity

-

Postcard from The Valley of Despair

Well, that was fun.

-

Support Vector Machine Attempt

Not fun

-

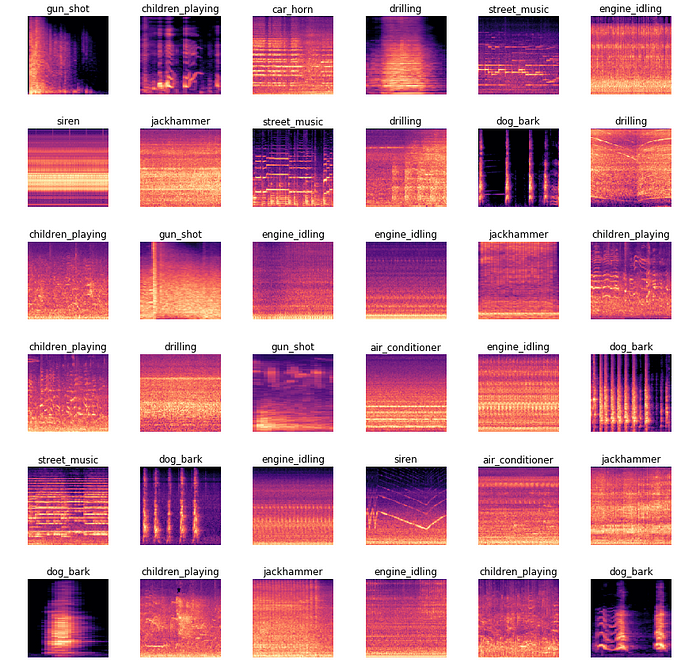

Classifying Urban Sounds in a Multi-label Database

How well does a convolution neural network perform at detecting multiple classes within a single sample? This experiment explores augmenting the UrbanSound8K database to test a well performing CNN architecture in a multi-label, multi-class scenario.

-

![[ Music Mood Classifrustration ] [ Music Mood Classifrustration ]](/assets/image/2020_09_20_rayaml_ML-feelings.jpg)

[ Music Mood Classifrustration ]

This is an attempt to create a Music Mood Classifier with feature extractions from Librosa.

-

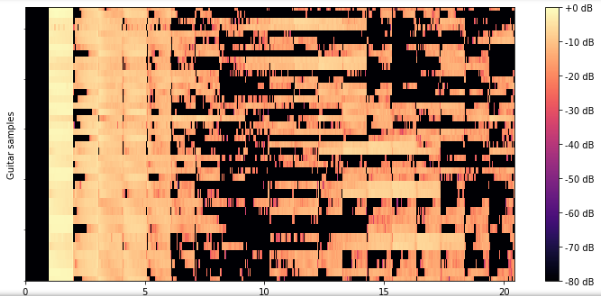

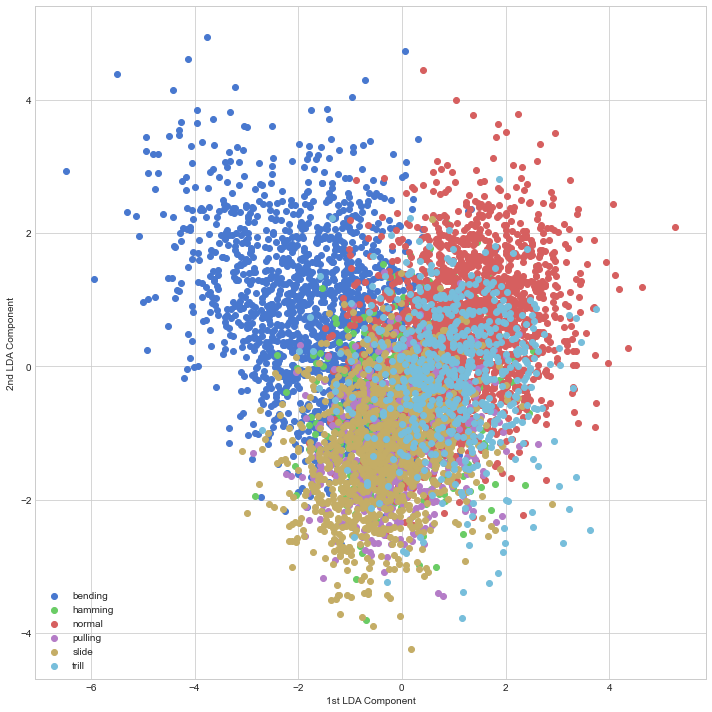

Classification of guitar playing techniques

An attempt at making a model which can classify 6 different playing techniques on the guitar

-

Exploring Music Preference Recognition Using Spotify's Web API

A proposed ML model that predicts the degree to which I will enjoy specific Spotify tracks based on previous preference ratings.

-

Jamulus test session.

Can a physical metronome keep us in time? Experiences from the jamulus test session in the Physical-Virtual Communication and Music course.

-

Jamulus: Can y-- hear -e now?

During the second session of the Physical-Virtual Communication and Music course from 2020, we had our first experience with telematic music performance. It was not the greatest jam we ever had, but we learned from it.

-

Jamulus, or jamu-less?

Playing music together is not at all only about hearing, but also about the visual. Today the MCT students of 2020 experienced this in a “low latency jam session” in Jamulus.

-

Audio and Networking in the Portal - Presentations

The class of 2021 recently presented broadly on networking and audio within the context of the Portal. Presentations are included in this blog post as pdfs.

-

a t-SNE adventure

A system for interactive exploration of sound clusters with phone sensors

-

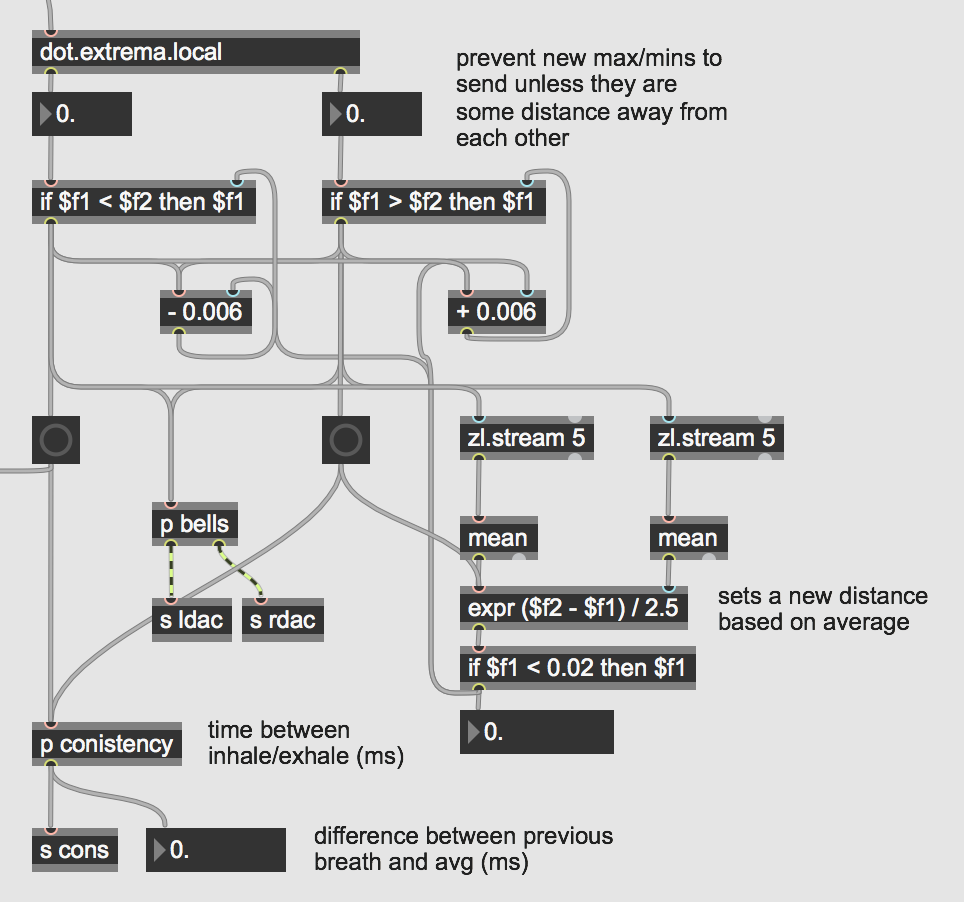

Breathing through Max

For the COVID-19 version of motion-capture, I developed a system to track your rate of breath and sonify it through Max. It emphasized the tenants of biofeedback and hopes to serve as a responsive system for stress relief.

-

I scream, you scream, we all scream for livestream

Some cameras won't allow you to film for more than 30 minutes, don't use those.

-

Sound Painting

An application that tracks, visualizes and sonifies the motion of colors.

-

NINJAM, TPF and Audiomovers

During these last few weeks of “quarantine” during the COVID-19 outbreak, we have tested out several TCP/UDP audio transmission software’s from home to check for latency and user-friendliness. Our group consisting of Simon, Iggy, and Jarle, were tasked with looking into NINJAM and TPF.

-

On communication in distributed environments

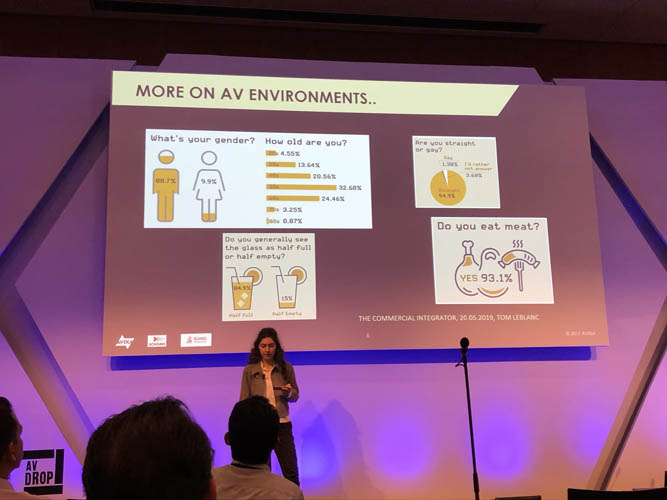

Photo by Dr. Andrea Glang-Tossing. At ISE Europe, a trade fair in Amsterdam ( 11.02.-14.02. 2020) I was presenting together with SALTO the MCT course at the AVIXA Higher Education Conference. Researchers and students were invited to highlight emerging innovative methods that enhance learning and teaching experiences through AV technologies. The ISE Europe is the world's biggest pro AV event for integrated systems, with 80000 visitors and 1400 vendors, spread over a dozens of halls. For the conference I was specifically asked to contrast the overall technically curated program with social aspects from a student perspective. A retrospective from current conditions.

-

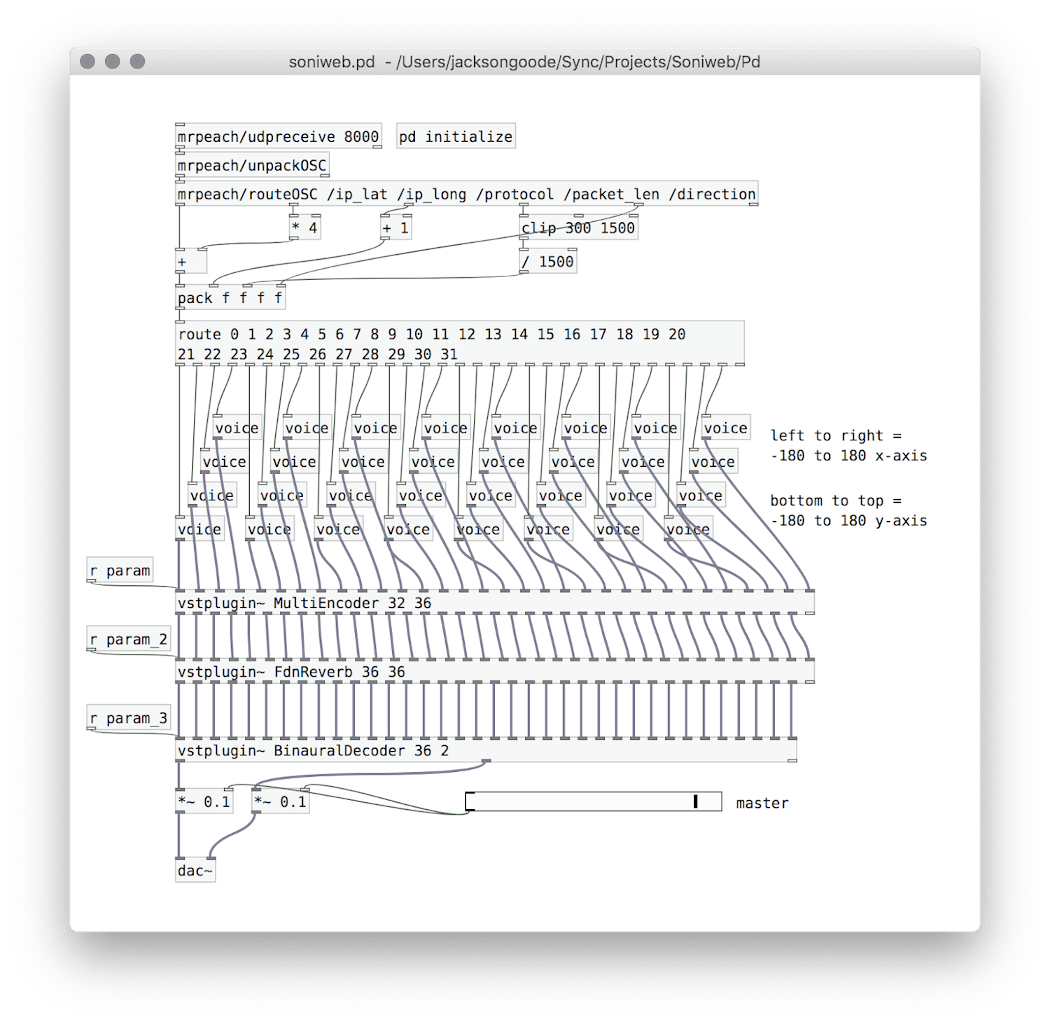

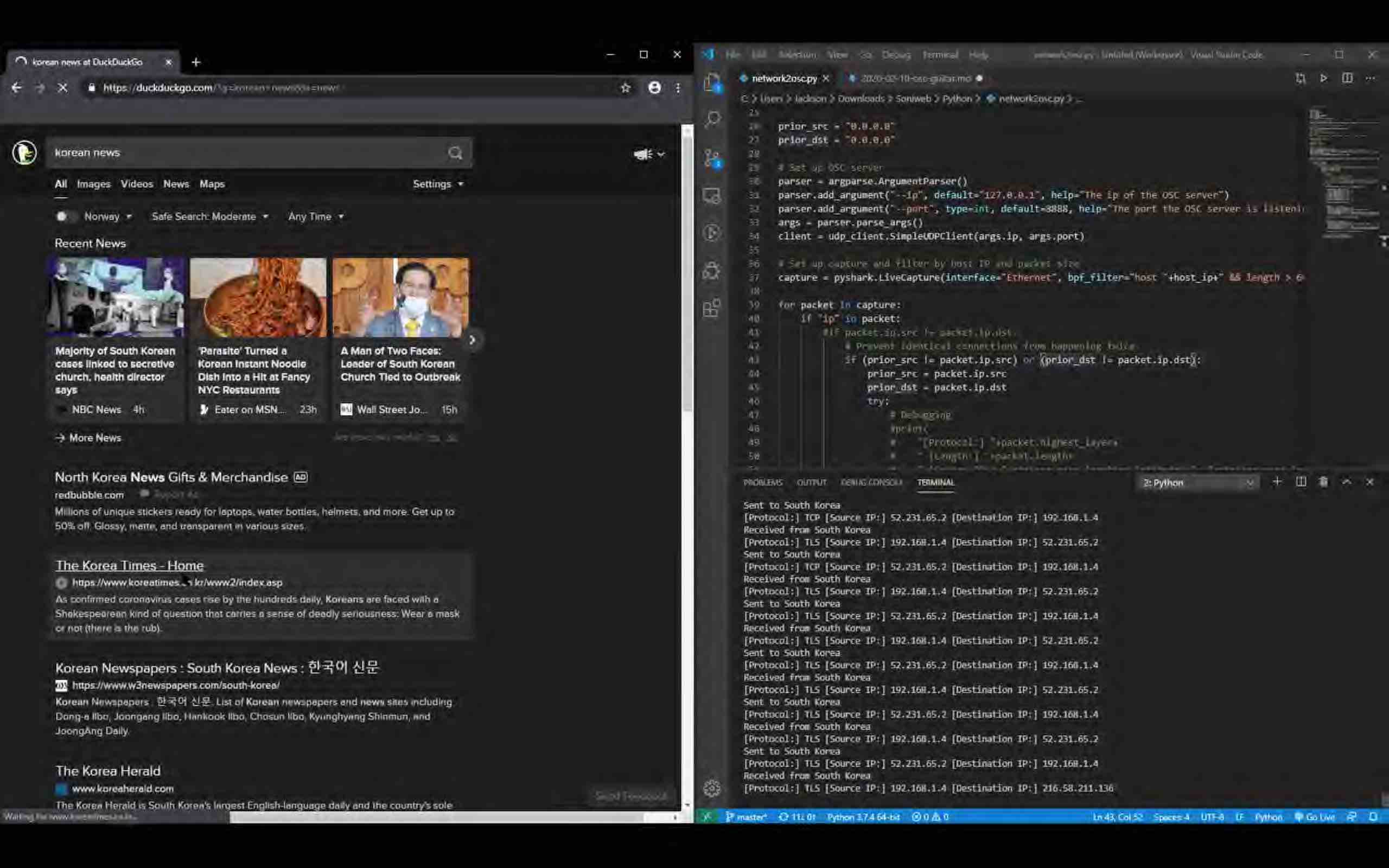

Soniweb: Epilogue

An update to the Soniweb project featuring dynamic sonification!

-

Zoom and other streaming services for jamming

One thing you can do is to enable the option of 'preserve original audio' in Zoom.

-

Exploring SoundJack

SoundJack is a p2p browser-based low-latency telematic communications system.

-

Smokey and the Bandwidth

Hijacking Old Tech for New Uses

-

Testing out Jacktrip

As we have begun settling into the COVID tech cocoon of isolation, we test out a technology that might be able to fulfull our dreams of real-time audio communication.

-

MCT vs. Corona

In light of the recent microbial world war, we have taken matters into our own hands by sharing audio programming expertise through small introductory courses on Zoom.

-

Soniweb: An Experiment in Web Traffic Sonification and Ambisonics

For the course MCT4046 Sonification and Sound Design, our group was tasked to create a system that collects and sonifies data in real time. For this project, we went even further(!) and spatialized the network data that passes through a computer.

-

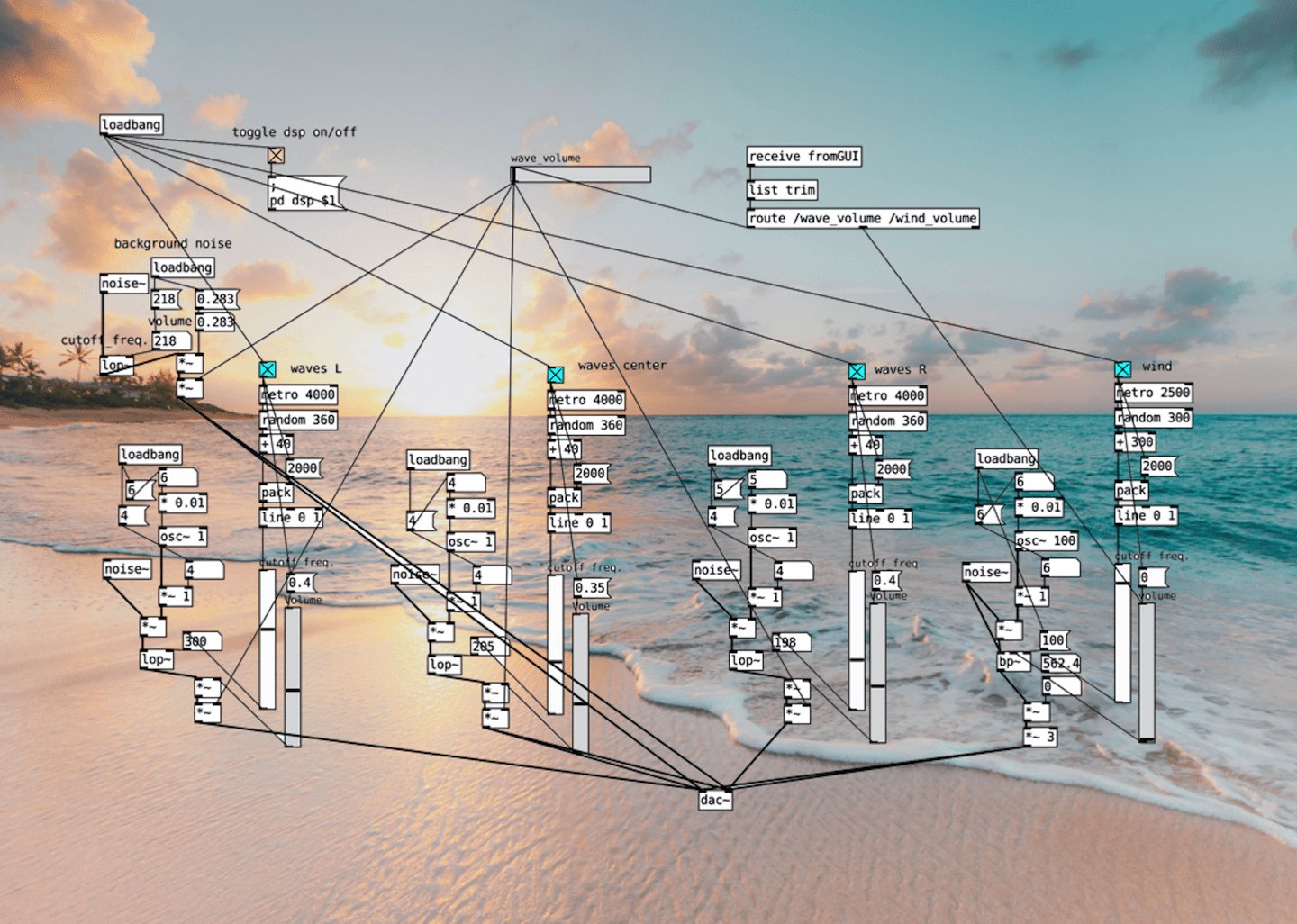

Weather Music

Experiencing weather is a multi-sensory and complex experience. The objective of our sonification project was to sonify the weather through the use of online video streams.

-

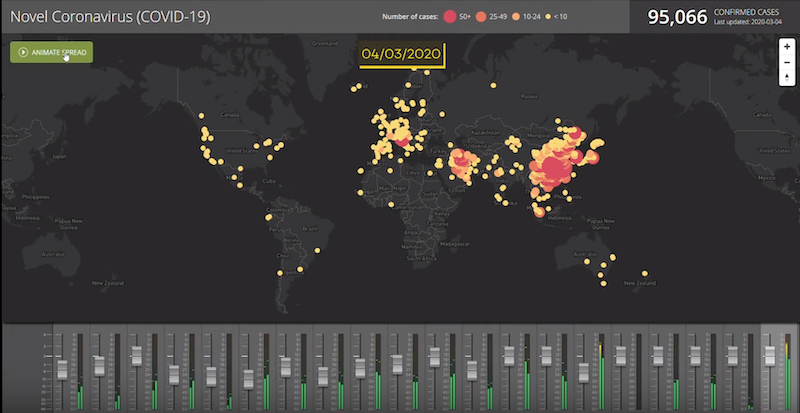

Sonifying The Coronavirus Pandemic

Finding a voice for difficult data

-

The Immersive Portal

The MCT portal is rigged for ambisonics to fit a virtual classroom in a classroom

-

![[ pd Loop Station ] [ pd Loop Station ]](/assets/image/2020_02_11_rayaml_blog-cover-small.jpg)

[ pd Loop Station ]

This is an attempt to create a Loop Station with features that I wish I had in such a pedal / software.

-

Making Noises With |noise~|

Wherein I attempt to either program paradise or just make bacon-frying noises, which could be the same thing, actually

-

Sonification of plants through Pure Data

I am not sure if I am going crazy or if I am actually interacting with plants, but here me out here

-

Strumming through space and OSC

A gesture-driven guitar built in Puredata and utilizing OSC

-

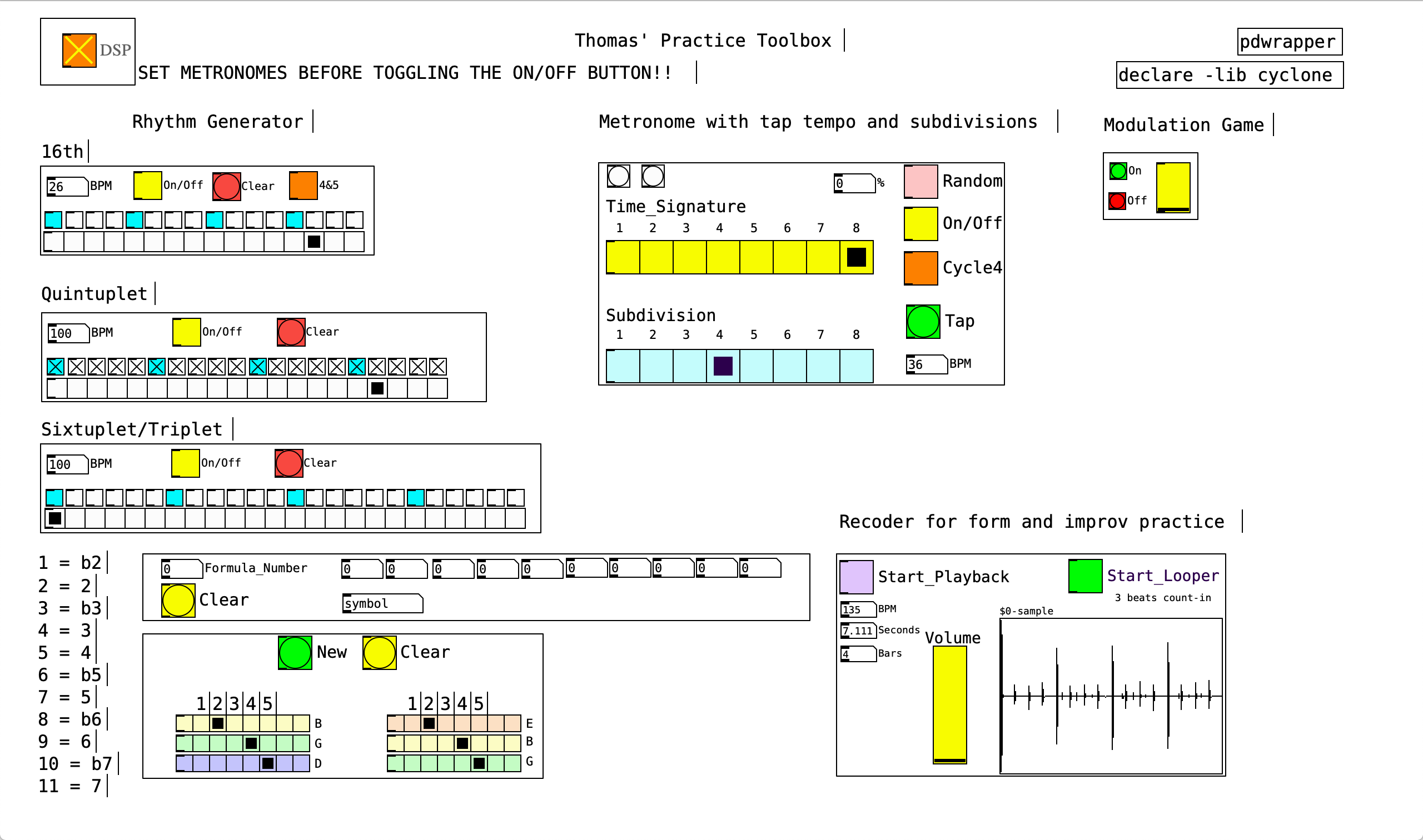

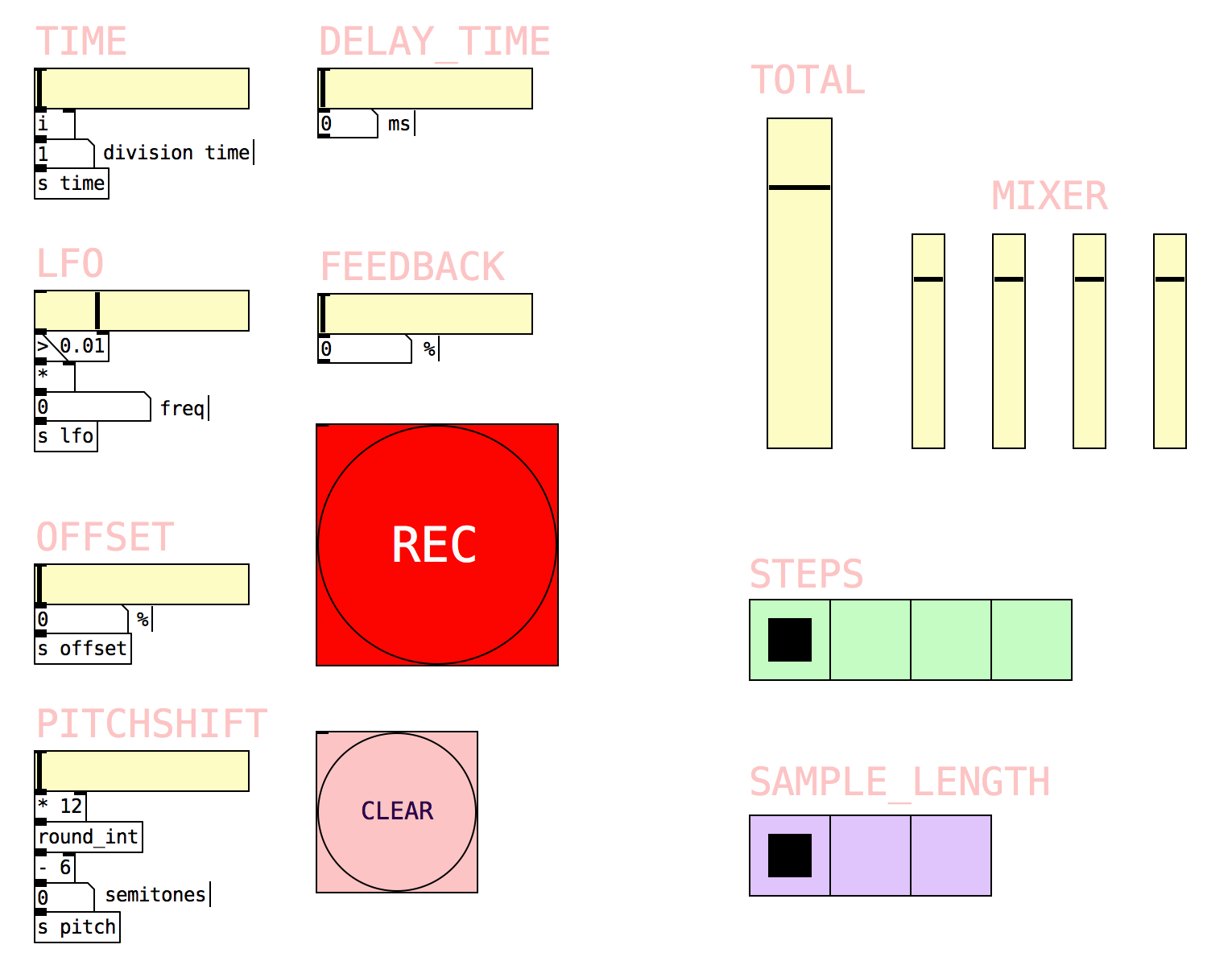

Practice-Toolbox-for-students-of-music

In our audio programming course we were tasked to make a PD-patch without any restrictions on what it should be. I wanted to make something useful I could incorporate in my daily practice routine, and also distribute to some of my guitar students.

-

Multi voice mobile sampler

A mobile tool to dabble with small audio recordings wherever you encounter them

-

The Delay Harmonizer

This chord generator uses a variable length delay fed by a microphone input as sound source.

-

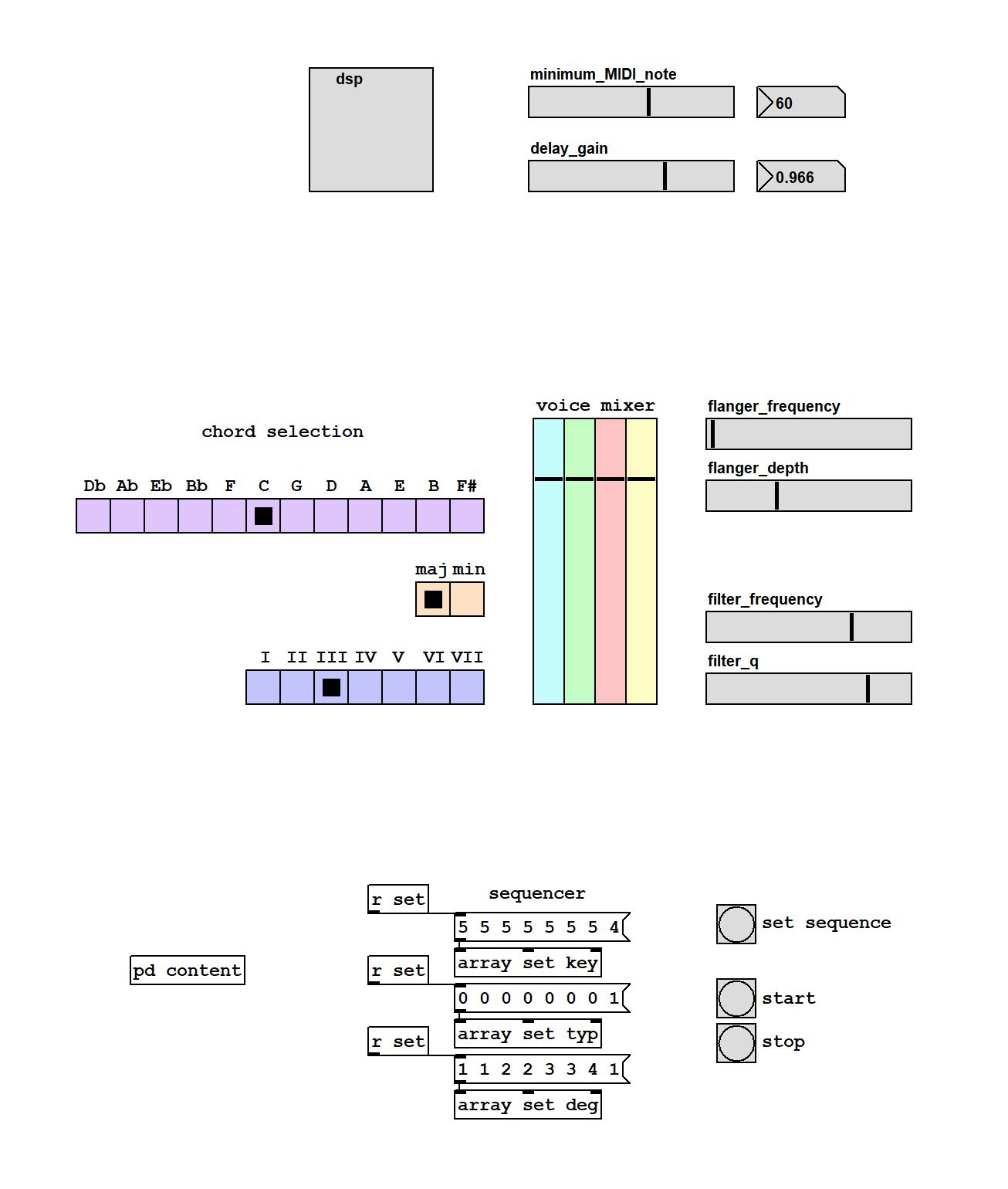

The MIDI Remixer

This sequencer based poly FM-synthesizer invites its users to remix and play with some of Johann Sebastian Bach's most famous preludes and fuges.

-

Camera Optimization in the Portal

On the quest for optimizing the visual aspect of the Portal

-

Testing Latency in the Portal

We 'officially' test some the latency in the Oslo and Trondheim Portal

-

Staggering towards the light

During a hackathon in our introduction course to physical computing, we developed a prototype of a DMI. In our blog post from this project we explained how the system was built and gave a short summary of our performance. In this blog post however, we will look at the instrument from an HCI-perspective. Where the main focus will be a summary of the problem space, the research question, the methodology used and our main findings and contributions.

-

Prototyping musical instruments

Prototyping musical instruments in the name of recycling - exploring Orchestrash from an HCI point of view

-

Portal ideas

Instead of starting up the M32 every day, recalling the correct preset, adjusting the faders, turning on the screens, turning on the speakers, opening LOLA, connecting to the other side, pulling your hair out because nothing will work... Imagine just pressing a button and it all just works.

-

The B Team Wraps up the Portal

We made it out of the Portal, now what?

-

Reflections on the Christmas concert

Trondheim reflects on the Christmas concert 2019

-

The B Team Dives in Deep (Learning!)

Well, here we are, at the end of a semester where one of the most challenging courses remain - only Rebecca Fiebrink can save us now.

-

Group C Learns to Think about how Machines Learn to Think

Wherein we describe the denouement of MCT4000, Module #9: Machine Learning.

-

Music and machine learning - Group A

Using machine learning for performance and for classifying sounds

-

3D Radio Theater - Lilleby

A 3D radio theater, produced by MCT students, Trondheim

-

Spatial Trip - a Spatial Audio Composition

In this project, we recorded and synthesized sounds with spatial aspects which is meant to be heard over headphones or loudspeakers (8-channel setup in Oslo/Trondheim). Coming to simulate both the indoor and outdoor real and or fictional scenarios.

-

Orchestrash hackathon performance

The title of our project is "Orchestrash" inspired by the theme of the competition and our approach to solving it, by making individual instruments controlled by recycled materials and "recycling" sound by sampling

-

The B Team: Mini-Hackathon

For the MCT 4000 mini-hackathon in the physical computing module we tried to send sound at the speed of light.

-

Physical Computing: Heckathon: Group C

Taking our cue from the main theme of the Day 4 Hackathon of “Recycling”, Team C chose the 2017 U.S. withdrawal from the Paris Agreement on climate change mitigation as a central theme in our work.

-

Microphone Testing Results

We've spent a few days (in addition to the many miscellaneous hours during class) reconfiguring the Portal and testing out new hardware and how it might improve the quality of our sound.

-

Wizard_of_Vox

Wizard Of Vox - Wizard Of Vox is a gesture-based speech synthesis system that can be can be “made to speak”

-

The Fønvind Device

For my interactive music systems project, I wanted to make use of the Bela's analog inputs and outputs to make a synthesizer capable of producing not only sound, but also analog control signals that can be used with an analog modular synthesizer. This post goes briefly through some of the features and the design of my system, and at the end there is a video demonstration of the system in use.

-

Instant Music, Subtlety later

When drafting ideas in unknown territory one can become overwhelmed with the sheer endless options to create an IMS (interactive music system). Here a real-time processing board for voice with gesture control.

-

The B Team: To Heck and Back

Today we began our experiments with some lofi hardware, simple contact mics, speakers, batteries, and some crocodile cables to connect it all. We left in pieces.

-

Physical Computing Day One: Victorian Synthesizer Madness! Group C Reports In From Heck

The first day of Physical Computing started and ended with a bit of confusion in the Portal, but that is par for the course. Once we set up the various cameras and microphones, and dealt with feedback, echo, etc, the fun began!

-

Physical computing Day 1 - Group B

First day of physical computing

-

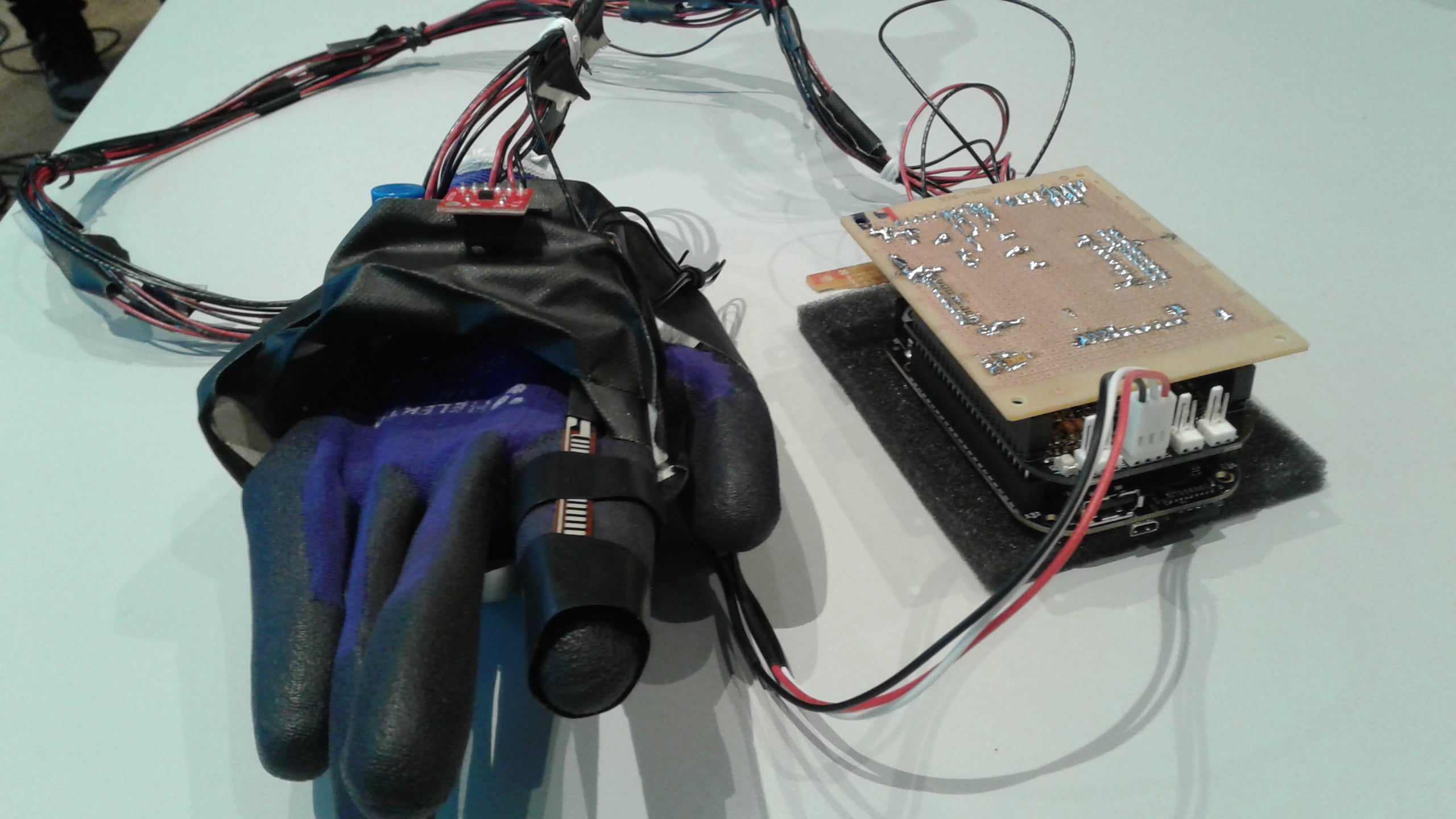

AudioBend

My project idea for the Interactive Music Systems was to build a glove that can manipulate sound. It was actually inspired by seeing the “mi.mu Gloves”. The paper on the “Data Glove” gave me ideas on the design aspect of the glove although the way it works is a bit more different than what I use in my glove. “Data glove” uses multiple flex sensors on the fingers and force sensitive sensors to contact the finger tips and an accelerometer to get data from the wrist control. In my glove I used flex sensor on index finger, 3 – axis accelerometer on my hand and a Distance Ultrasonic sensor on my palm. Attaching those stuff to the glove was a bit tricky but “ducktape” saved my life.

-

Alien_Hamster_Ball

The Alien Hamster ball - an instrument expressed through a 3D space

-

LoopsOnFoam

During a 2-week intensive workshop in the course Interactive Music Systems I worked on the development of an instrument prototype, which I named LoopsOnFoam.

-

Microture

Microture is an interactive music system, based on manipulation of the input sound (microphone sound) with small gestures..

-

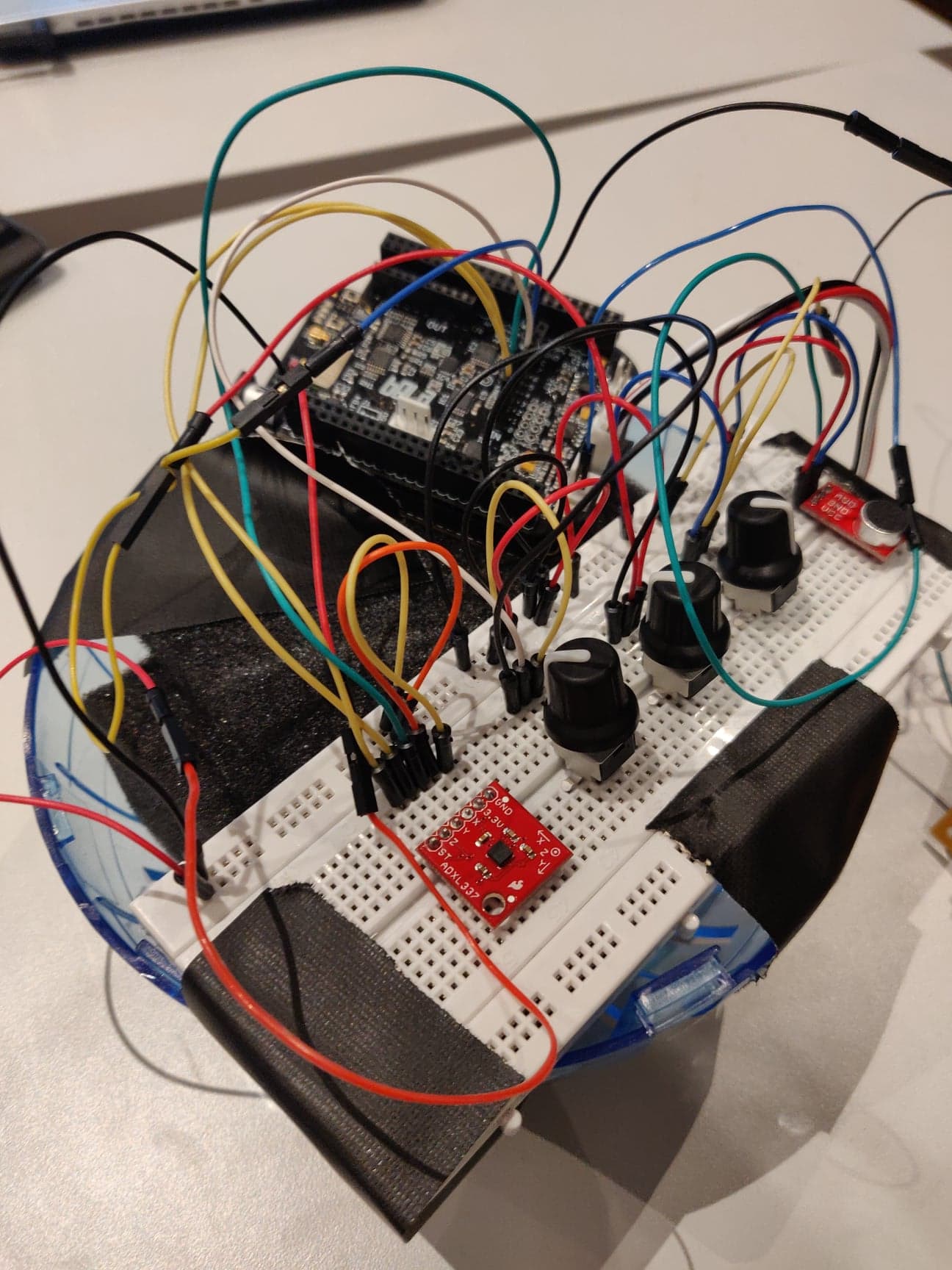

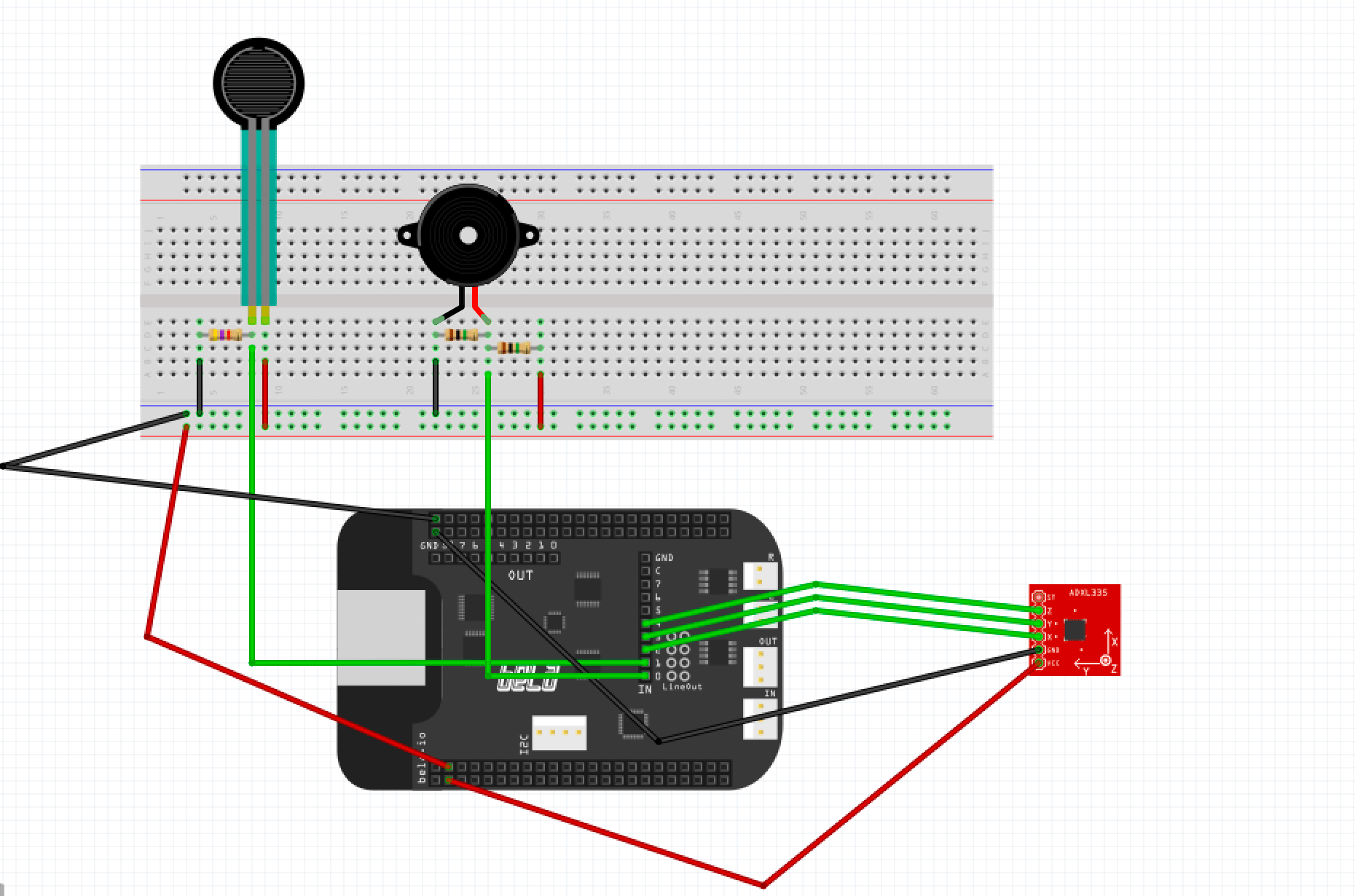

Body Drums - A wearable drumset

Body Drums For the course MCT4045 - Interactive Music Systems, I built a wearable drumset. The wearable drumset consists of a piezo-element placed on one hand, a force-sensing resistor on the other and a accelerometer on the fot. These sensors are then used to trigger one file each. In my case I used a kick drum sound for the foot, snare drum sound for the piezo element and a hi hat sound for the FSR. Then when these sensors are triggered, the sound that are mapped to the sensor will be played. For example if I stump my foot, the kick drum sound will be played.

-

The HySax - Augmented saxophone meant for musical performance

an augmented saxophone meant for musical performance, enabling background layer and delay to be controlled via gestures.

-

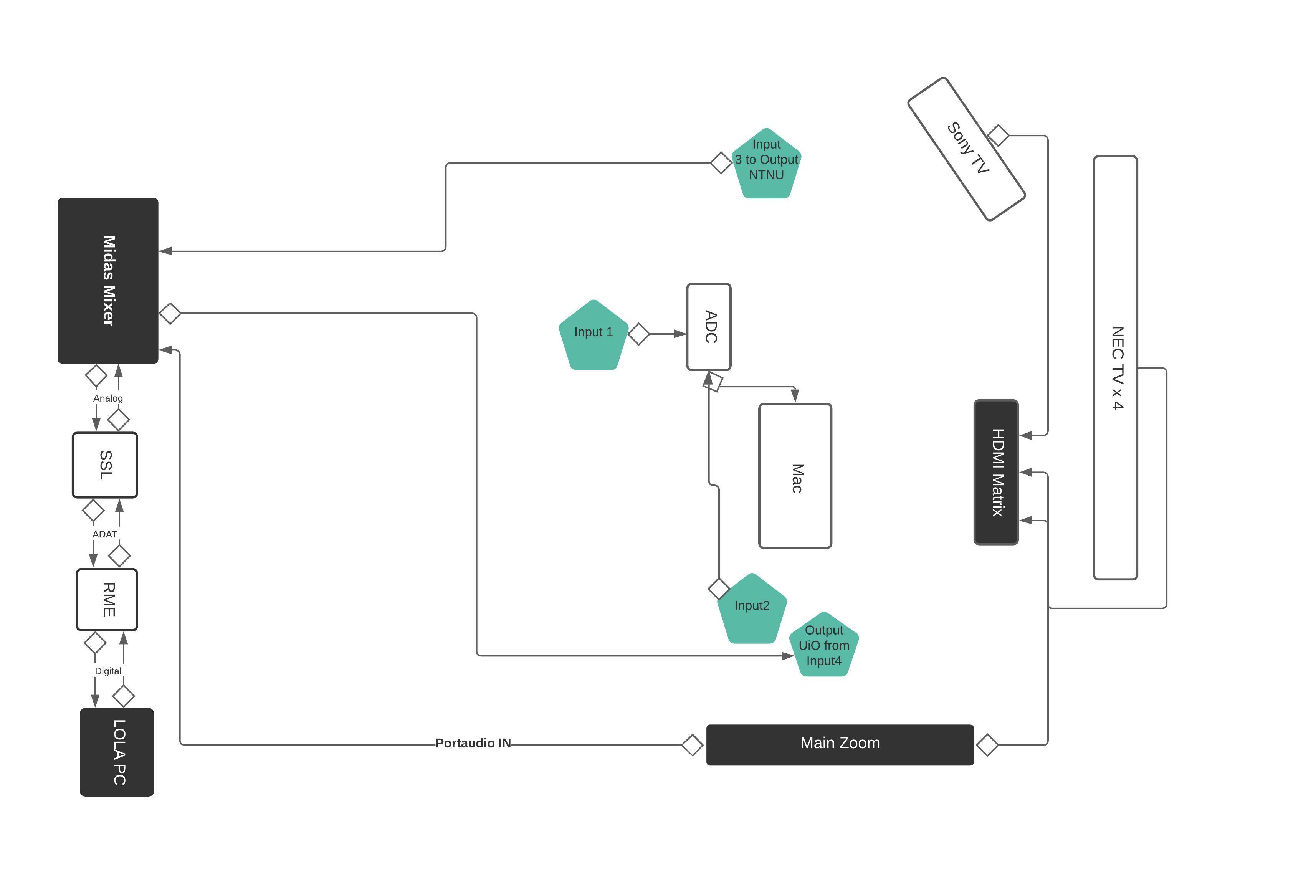

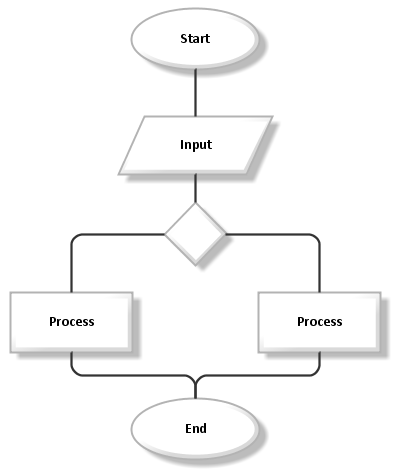

Portal Flowchart

The MCT portal has been subject to many configurations over the last couple of months. In this post, we explore how flowcharts may help us see a brighter tomorrow (we need as much light as we can get here).

-

Recovio: Entrepeneurship - Group C

Group C's project for the MCT 4015 Entrepeneurship course. Recovio is an audio digitization and storage company that serves companies at a scale and pricepoint that best fits their needs.

-

Entrepreneurship for MCT - Group B

Summary of MCT4015 project from group B

-

The importance of sound quality

Reflections after several lectures with less sub-optimal sound quality

-

UltraGrid

Exploring an alternative audio and video network transmission software in the MCT portal.

-

Machine Learning, it's all about the data

For my machine learning project, I wanted to see if I could teach my laptop to distinguish between different types of music using a large amount of data. Using metadata from a large dataset for music analysis, I tested different machine learning classifiers with supervised learning to distinguish between tracks labeled belonging to 'Rock' and 'Electronic'. The project was developed using Python and libraries for data analysis and machine learning.

-

Clustering high dimensional data

In the project for Music and Machine Learning I was using raw audio data to see how well the K-Mean clustering technique would work for structuring and classifying an unlabelled data-set of voice recordings.

-

MIDI drum beat generation

Most music production today depend strongly on technology, from the beginning of a songs creation, till the the last final tunings during mix and master. Still their is usually many human aspect involved, like singing, humans playing instruments, humans using a music making software etc..

-

Could DSP it?

Is polarity the solution?

-

IR Reverberation Classifier using Machine Learning

Using Machine Learning to classify different reverb spaces (using impulse response files)

-

Multi-Layer Perceptron Classifier of Dry/Wet Saxophone Sound

The application I have decided to work on is of a machine learning model that can ultimately differentiate between a saxophone sound with effect (wet) and without effect (dry).

-

Triggering effects, based on playing style

Technology in collaboration with art could create creative solutions and achievements. In here we use machine learning in order to ease the work of player while playing an instrument.

-

Classification of string instruments

During a 2 week intensive workshop in the course Music and Machine Learning I had to develop a machine learning system for the field of music technlogy.

-

An introduction to automix

At first glance, automix might look like your regular old expander or gate, but what makes automix special is that it does not only work on a channel to channel basis, but links all the channels in an automix group together and opens up the channel that has the strongest signal, while ducking the others.

-

Reflections on diversity

First year students in Trondheim reflects on diversity in MCT

-

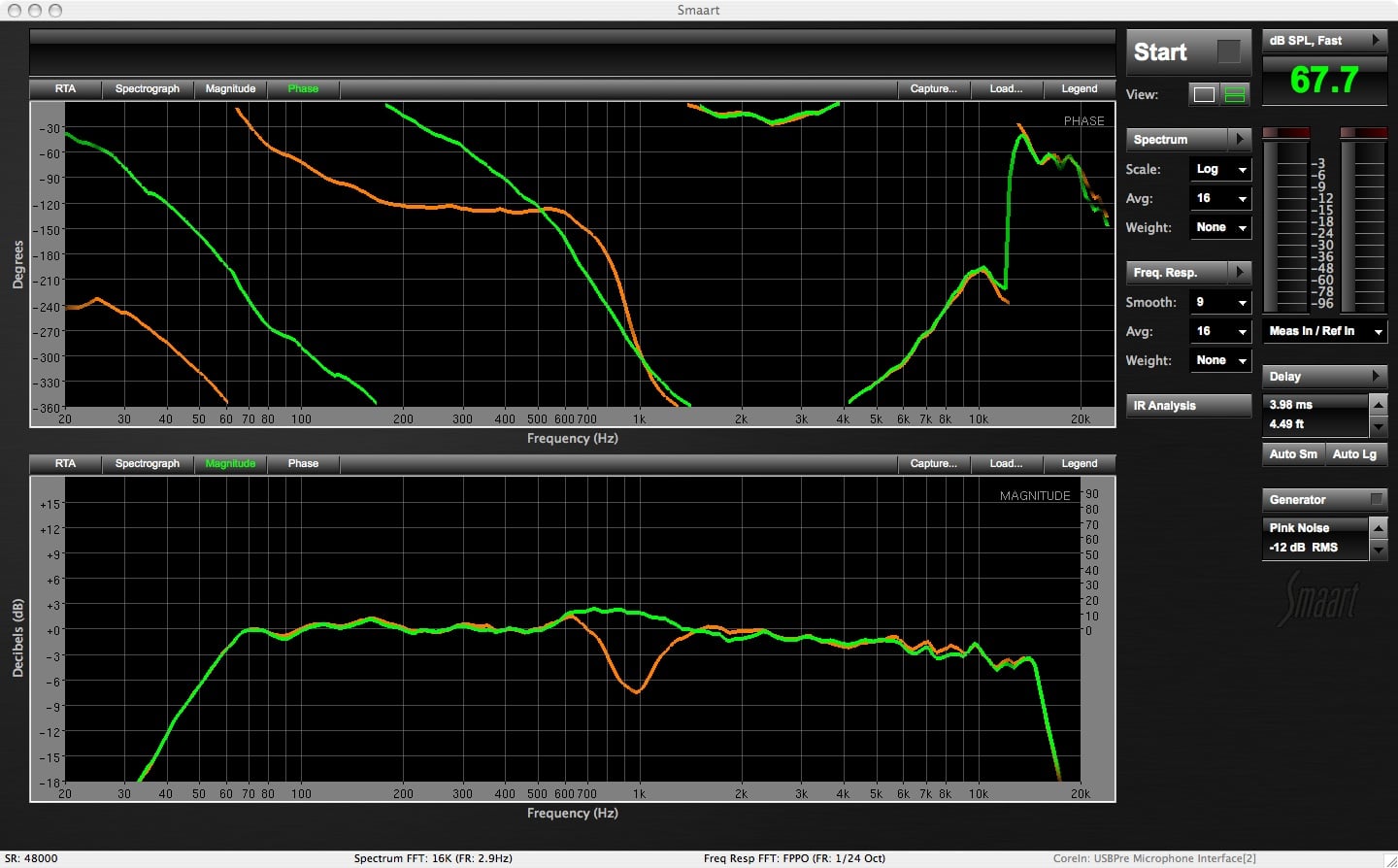

It's not just phase, mom!

Why phase coherency in loudspeakers matters.

-

Sonification of Near Earth Objects

As a part of a two-week workshop in the Sonification and Sound design course, we worked on the development of a self-chosen sonification project. For three days we explored how to design and build an auditory model of Near-Earth Objects (NEO) with data collected from NASA.

-

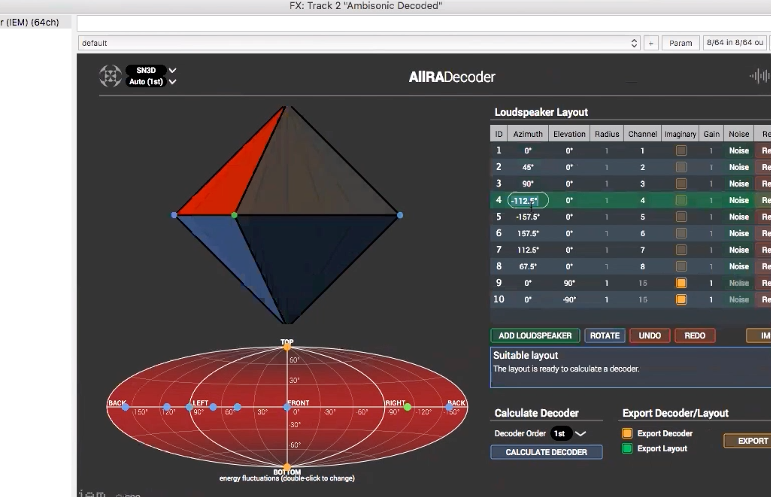

Experiencing Ambisonics Binaurally

During the MCT2022 Physical-virtual communication course we have had two sessions where we explored multichannel and ambisonic decoding. The first session, on February 27th, was mainly about recording a four channel A-format stream with an Ambisonic 360 sound microphone. We converted the A-format mic signal into a full-sphere surround sound format which was then decoded to 8 loudspeakers layout we placed evenly across the portal space. We have used the AIIRADecoder from the free and open source IEM plug-in suite.

-

Blue sky visions on sonic futures

Pedro Duarte Pestana was the final guest speaker who joined us virtually with his presentation 'Career Management in Music Technology / Knowledge Engineering in Music Technology and Sonic Arts' from Porto. Pedro was the first student in Portugal who held a PhD in computer music. He works as a researcher and consulter for emerging audio technologies and intelligent systems.

-

Scenarios in the Trondheim Portal during the spring semester-2019

We have had numerous scenarios set up in the portal in the period of January-May 2019. Each of the scenarios are unique and therefore serve specific functions. This blog presents four of such scenarios with a bit of discussions on the advantages and challenges with the set ups.

-

Augmented Reality

In the final Portal workshop of this semester we were looking at Ambisonics as a potential way to create an augmented auditory space from the perspective of sound.

-

A generic overview to the 'Sound in Space' exhibition at KIT

Marking the final event before Easter and from our Sonification and Sound design course I was tasked to visit the group exhibition 'Sound in Space' that took place on the 11th of April at Gallery KIT, Trondheim. It was also the closing event for the sound art course in which music-technology and fine art academy students (NTNU) could participate.

-

How music related motion tracking can sound

During the course 'Music related Motion Tracking' there were several approaches among the students to realize their ideas. The Opti-Track system, new to all of us consists of infrared-cameras, markers and a software with calibration tools. We were exploring the functions from scratch during the first week when hosting the 'Nordic-stand-still-championship' on both campus.

-

Brexisonification

The goal in the project is to sonify Brexit, in a way that the audience could interpret new insight from the data through audio.

-

The Sound of Traffic - Sonic Vehicle Pathelormeter

Is it possible to transmit complex data-sets within an instance of a sound, so the content gets revealed? As communication and dissemination of information in our modern digital world has been highly dominated by visual aspects it led to the fact that the modality of sound got neglected. In order to test the hypothesis, the project presents a model for sonification of temporal-spatial traffic data, based on principle of Parametric Mapping Sonification (PMSon) technique.

-

MuX -playground for real-time sound exploration

It was so fascinating to have Edo Fouilloux in the MCT sonification seminar series. Edo is a visual innovator and a multidisciplinary craftsman of graphics, sound, computing, and interaction. He co-founded Decochon in 2015 to work with Mixed Reality (XR) technologies. MuX is a pioneering software in the field of interactive music in virtual reality systems. Edo demonstrated the concepts and philosophies inside Mux, where it is possible to build and play new instruments in the virtual space.

-

Ole Neiling Lecture

I had the pleasure of introducing Ole Neiling, a extradisciplinary 'life-style' artist. This was part of a series of lectures held over the portal in the sonification and sound design module.

-

Presentation by Pamela Z

As part of our Sonification and Sound Design course (MCT4046), we were fortunate enough to host scholars and artists which are well established within the sonification and sound design field. Pamela Z is a composer, performer and a media artist who is known for her work of voice with electronic processing. Pamela arrived in Norway for several workshops and performances, and we were lucky enough to have her for a short presentation on April 4th. After a brief introduction by Tone Åse who has been a long-time fan of Pamela’s work, Pamela started the session with a 10 minutes performance of a live improvised mashup of several existing pieces she often performs. While performing, Pamela is being circled by several self-made sensory devices that are connected to her laptop. On her hands, she wears sensors that send signals to her hardware setup. She sings and makes sounds with her voice, hands, and body and manipulates all that with hand gestures.

-

An Overview of Sonification by Thomas Hermann

It was my privilege and honour to facilitate a guest lecture and introduce one of the 'Gurus' in the field of sonification, Dr. Thomas Hermann. He shared his enormous knowledge on sonification with hands on exercises for two days (March 28, and 29, 2019) through the MCT portal in Trondheim. I am quite excited to share my notes and will try to cover the summary of his talks in this blog.

-

Using Speech As Musical Material

As a part of a three-week workshop in the course Sonification and Sound design at MCT, we were lucky to have Daniel Formo as a guest speaker.

-

Advanced collaborative spaces, tele-immersive systems and the Nidarøs Sculpture

Leif Arne Rønningen introduced us to 'Advanced Collaboration Spaces, requirements and possible realisations' and to the 'Nidarø Sulpture', a dynamic vision and audio sculpture. In both parts Leif's main research areas on tele-immersive collaboration systems and low latency networks are at the forfront.

-

MoCap Recap - Two weeks recap of a Motion Tracking workshop

During weeks 10-11 we attended the Music related motion tracking course (MCT4043) as part of the MCT program. The week started with the installation of the OptiTrack system in Oslo, placement of cameras, connecting wires to hubs and software installation and setup. we got familiar with the Motive:Body software and was able to run calibrations, set and label markers, record motion data, export it in a correct way and experiment with sonifying the results with both recorded and streamed motion capture data.

-

Composition and mapping in sound installations “Flyndre” and “VLBI Music”

Øyvind Brandtsegg talks about the creation and life cycle of two art installations in this inspiring talk. Follow the link to read about the first lecture in a series about sonification.

-

Repairing scissors and preparing the portal for talks

-

Ambisonics!

On 27 February 2019, we had a workshop on Ambisonics in the portal course. Anders Tveit gave us a lecture on how to encode and decode sound inputs from Lola, using the AIIRADecoder in Reaper.

-

Touch the Alien

The web audio synth 'Touch the Alien', a project by Eigil Aandahl, Sam Roman, Jonas Bjordal and Mari Lesteberg at the master's programme Music, Communication and Technology at University of Oslo and Norwegian University of Science and Technology. The application offers touchscreen functionality, Oscillators, FM Oscillator & Delay, phaser, Chorus & Filter on Dry/wet slider,Canvas UI with follow visual FX and it's alien themed for your pleasure!

-

The Magic Piano

During our second week learning about Audio programing and web Audio API we were divided into groups and had to come up with an idea for a final project. The main challenges were to find an idea that is doable within 4 days, to code collaboratively and to prepare for the presentation of our project. Guy had an Idea for building a piano keyboard that will help beginners play a simple melody and Ashane and Jørgen agreed to collaborate and join forces in creating 'The Magic Piano'.

-

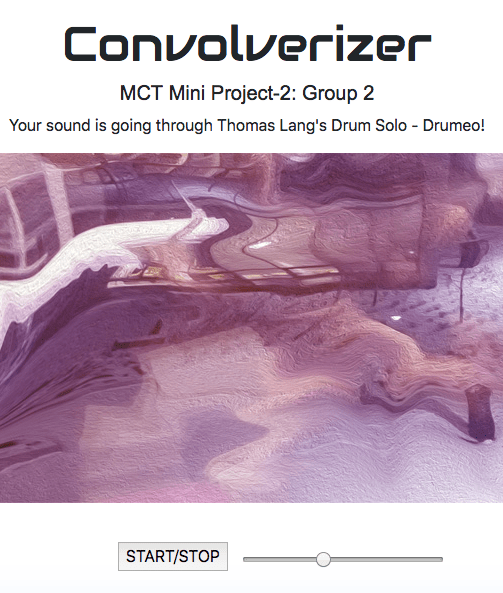

Convolverizer

Convolverizer, Real-time processing of ambient sound, voice or live instruments, utilizing the convolution effect.

-

The Giant Steps Player

As part of the MCT master program we are being introduced to a variety of technologies for creating music and sounds. We have just finished a week long workshop learning about Audio programing and web audio API. The benefits of this technology are helpful and relevant in areas like art, entertainment or education. We were introduced to several ways for creating and manipulating sound, follow tutorials and experiment on our own during the days. I must admit that I do not have intensive knowledge in programing in general and javaScript in particular. Many failures accrued while trying, from simple syntax errors to flawed design. But understanding the idea behind each process and striving towards the wanted result was an important progress.

-

The Spaghetti Code Music Player

The Spaghetti Code Music Player is a simple music player that is loaded with one of my own tracks. The player allows you to play and stop the tune, turn on and off a delay effect and control a filter with your computer mouse. The player also has a volume control.

-

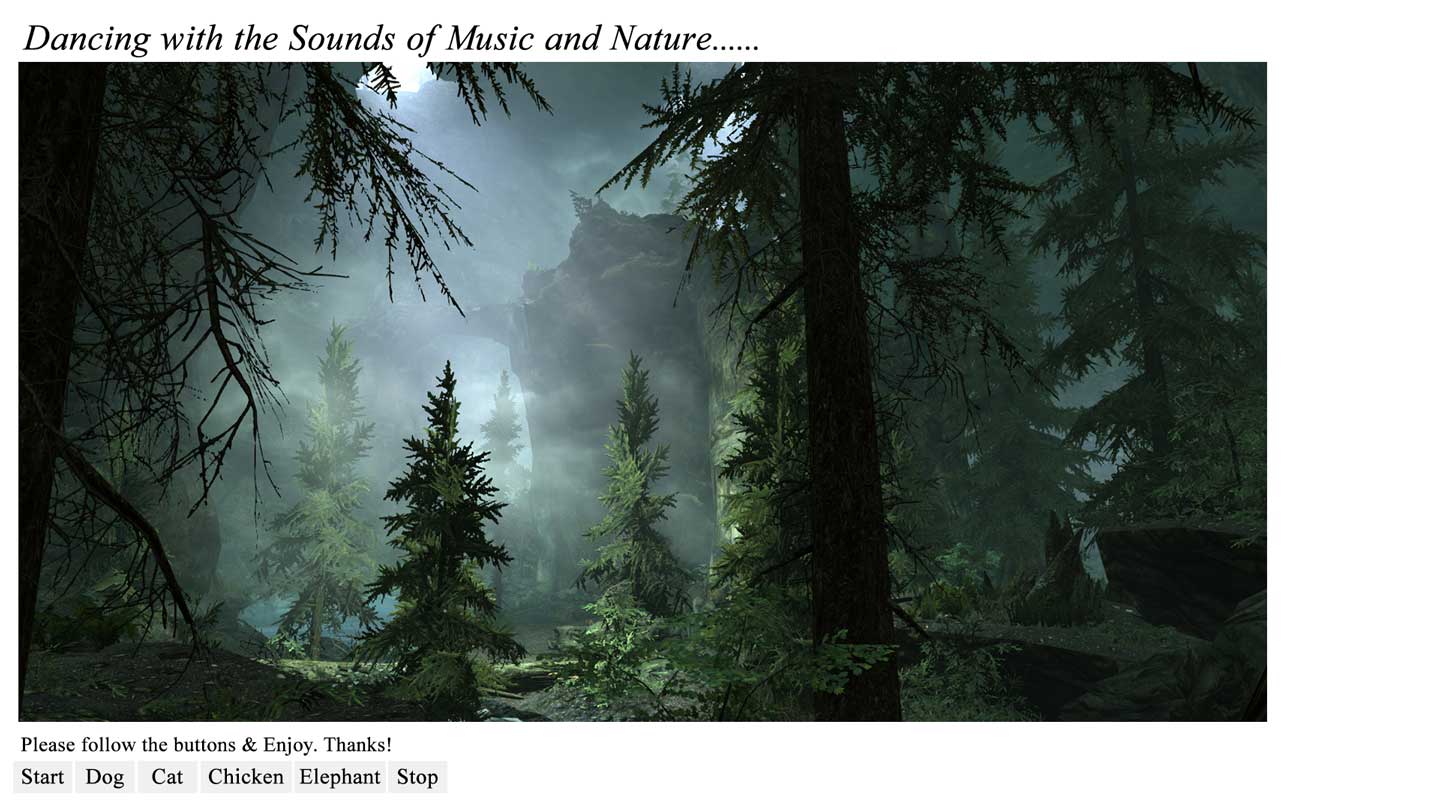

Odyssey

Odyssey is a simple prototype of a Web Audio API envisioned to immerse users into a misty jungle environment. Besides soundscape of a jungle, the application adds bits of flavour of few domestic animals and mix them all together with a piece of jazz music. The web audio application is developed using HTML5 and javascript.

-

Catch the wave – First week's dive into web audio programming

It is possible to create simple, but effective applications on the web browser, even without prior knowledge. However, it took way longer to implement those ideas, but luckily there was always someone around to ask.

-

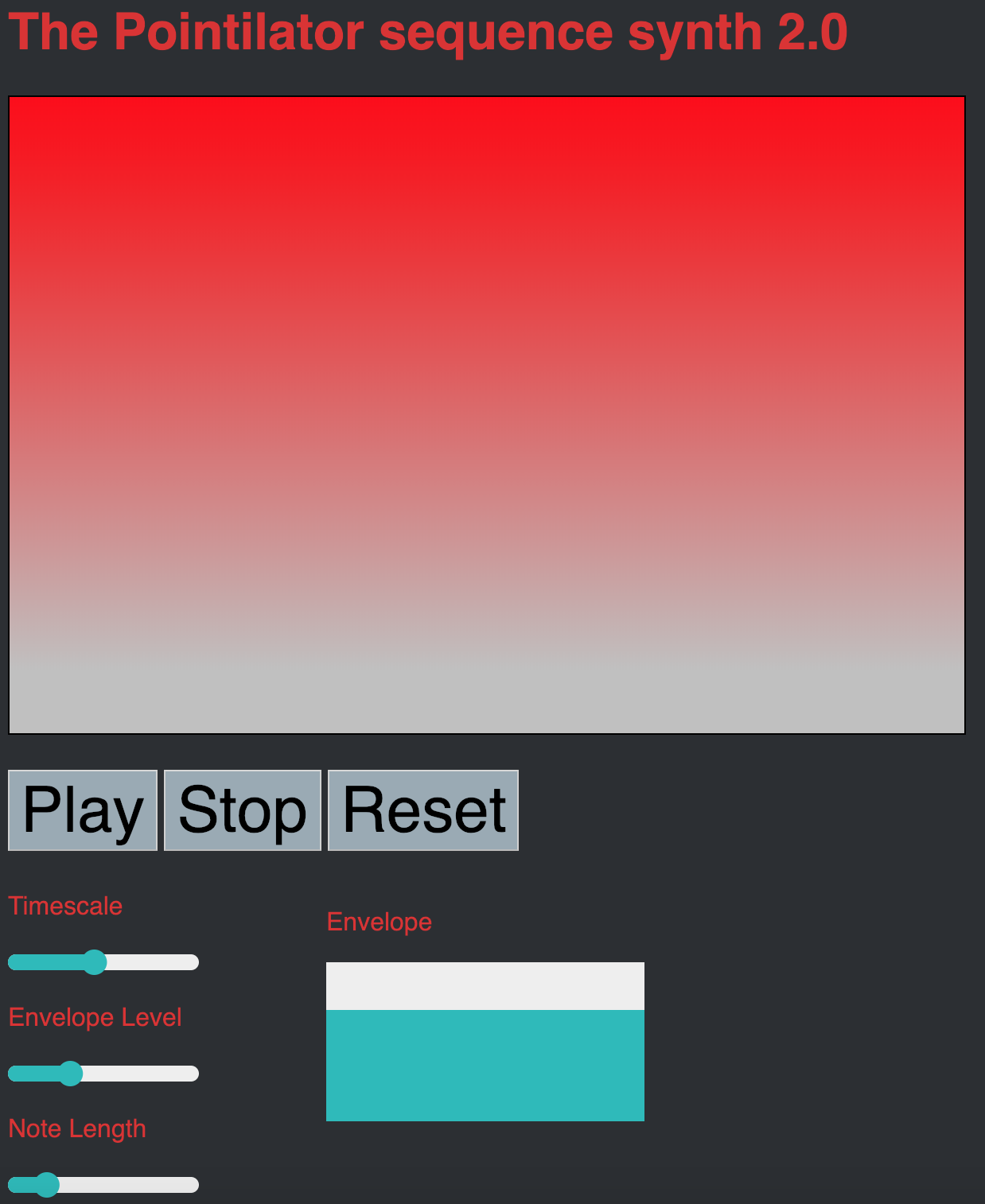

The Pointilator Sequence Synthesizer

The Pointilator sequence synth is an experimental instrument that can be played directly from a web browser! It is tested to work with Opera and Chrome, but does not work in Safari. It is based around entering a sequence of notes as points on a Canvas that registers each click and draws a circle where the note was put. It can then play back the notes from left to right with the height of the click translating to pitch. The result is a sequencing synthesizer that has a finely detailed scope in both time and pitch, although it is not easy to control based on traditional musical scales or rhythmic time.

-

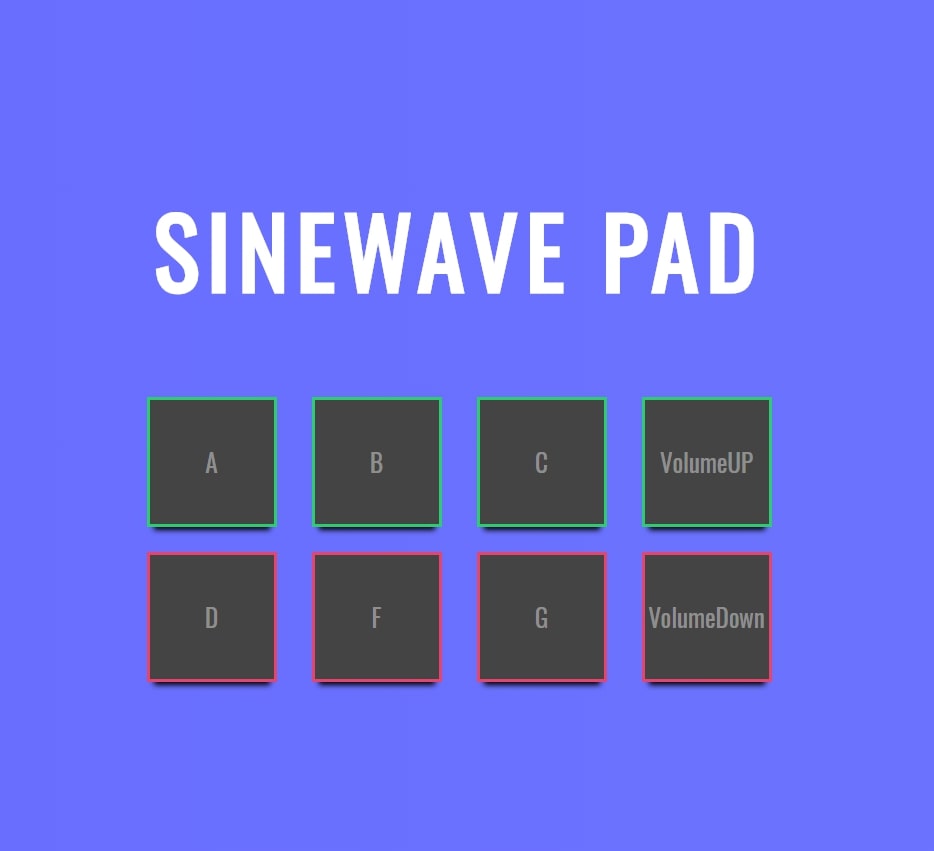

SineWave Pad

It was a wonderful journey we had for a week getting hands-on experience with Web audio API and JavaScript. In the beginning, I was tensed about the way that I will handle coding with zero prior experience. But, at the end of the week, I was happy about what I have managed to achieve. I was lacking ideas to start a project for the week but after getting introduced to oscillators, I thought of making a synthesizer or a drum pad that works on the browser. So it was either to work with Oscillators or sound loops.

-

Reese da Alien!

The project I have developed on over the first week of web audio based programing is called Reese da Alien - a web based synth of sorts with mouse functionality. The idea is that the program presents a relatively novel way of producing a reese, by the user moving around the mouse on the the page to find different sweet spots as they affect the pitch and amplitude of two oscillators with the movements. The persona of the application came after early in development I likened the sounds to an alien talking – I felt it a fitting title for the weird, abrasive sounds that the program creates.

-

Freak Show

As my first experience working with Web Audio API, utilizing JS, HTML and CSS; it was quite a challenge, but a pleasant one that lead to the outcome that I wanted and also broadened my perspective, in regards of my future plans.

-

The Mono Synth

This blog post outlines the production of the MonoSynth. The Mono Synth is drawn by Jørgen N. Varpe, who also wrote a lot of the code. The objective of this prototype was to improve my familiarity with coding, and at the same time be able to have a working chromatic instrument. Working with a cromatic instrument is interesting because it allows me to have a less abstract understanding of what happens in the code - behind the scenes if you will.

-

The Wavesynth

During the first workshop week in the course Audio Programming, I have been working on a project which I have called "The Wavesynth". I have called it this because I have chosen to use wavetables to shape the output of an oscillator. I have not made a wavetable synthesizer like for instance Ableton's Wavetable, where you can interpolate between waves. instead I use some wavetables created by Google Chrome Labs to make it sound like "real" instruments. The synth is played by using the computer keyboard, and the user can choose the output sound, and adjust three different effects to shape the it the way they want. The synthesizer is made using web technologies, including HTML, JavaScript, Web Audio API, and more.

-

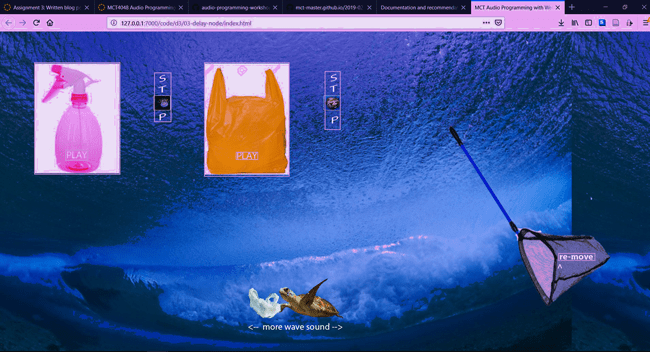

Documentation and recommendations from the latest Portal Jam

As the Portal is still in its infancy, pushing and exploring its technical possibilities is an ongoing process. We still encounter different issues while actually seeking a smooth and standardized setup of the signal routing and performance space. At the end it is about optimizing the telematic experience but not getting stucked in technicalities at the same time.

-

How to stream content from the portal

In this blogpost, we will try to explain in more detail how these streams have been set up using OBS, Open Broadcaster Software and Youtube Live while being connected between Trondheim and Oslo. This can be of use for anyone looking to set up a co-located stream of a speaker or performance.

-

The Sounds We Like

For our first lesson in the Sonification and Sound Design course, we were asked to give a short presentation of a sonification or a sound design of choice. It was interesting to see the variety of examples among our classmates. Each of us brought a unique example and explained what is it about? why did they choose it? and how does it relate to our work at the MCT program?

-

Portal Jam 23 January 2019, Documentation from Oslo Team

On the 23 of January, we were testing out to jam together through the Portal.

-

Christmas jazz concert between Trondheim and Oslo

A short trailer from the multi-cam set up, in Oslo. For now, it will have to do with the sound from the GoPro camera mounted on the keyboard.

-

A-team - Getting the portal up and running...again

Final week before christmas. After months of experimentation with microphones, lectures with _TICO_, workshops with _Zoom_, jams with _LOLA_ and routing on the _Midas_ mixer - the portal is in disarray.

-

Mutual Concert Between Oslo and Trondheim - Personal Reflections

The mutual concert between Oslo and Trondheim at the schools on November 27th, and it’s successful end result represents the end of a long process which we were trained in the many aspects of playing together over the portal. We have learned about sound and acoustics, about amplification and mixing, video, audio, and network systems, cognition, perception, and last and not least, team work. I feel that the team worked very well and pulled through considering all the technical difficulties. In a way, I feel we all took part in a small historical event, where two groups of high-school students, in two separate locations, managed to play together.

-

DSP Workshop Group C

During our DSP workshop we were introduced to several tools and techniques for creating, manipulating and controlling sound. We started exploring Csound and waveguides. We learned a bit about the code in Csound, setting inputs and outputs and rendering the result to audio files.

-

Group B, DSP workshop

The 4 day DSP workshop introduced some basics of Digital Signal Processing techniques. We were given examples in Csound that focused on physical modeling of a string, and also how to use Csound as a DSP-tool. The exercises allowed us to take ownership of the codes by modifying and creating new sounds. We used simple operations like addition and multiplication to process the digital signals. We enjoyed experimenting with digital waveguides to model sounds travelling through strings and also manipulating with reverbs delay, envelope etc. Trondheim team also built an instrument with a piece of wood connected to a contact mic and used it in some of the tasks.

-

A-team - DSP workshop blog

On the final DSP class we were bringing it all together - and performing our combined CSound based creations! Having the day to develop on our instruments, we prepared for performing with the other groups at 2 o’clock. After finding our respective rooms, We had a A-Team meeting on how to finish off our sounds,and devise a plan of action for our performance.

-

Group B, LoLa multichannel audio

The objective this week was very clear from the start, we had to set up multichannel audio for LoLa. There was just one problem: our great LoLa supervisor, Anders, was sick, and we were left to figure out things on our own. This was of course a great challenge, but we like challenges. It might take a little more time, but it's another experience when you are forced to try and fail on your own. Often you also learn much more when you're not being fed all the answers from an expert.

-

MCT Heroes

During our Human Computer interaction seminar, Anna Xambó asked us to create a blog post with notable people in music technology that inspire us. Here are the submissions from the class.

-

Exhibition 'Metaverk' in Trondheim: DSP, Csound orchestras and bone feedback

Once you enter Metamorph, an art and technology space in the old town of Trondheim (Bakklandet), you will immediately become part of the generative soundscape of the exhibition 'Metaverk'.

-

A-team - week 44

It is very important and professional to keep the signal chain (physically) clear and cabling clean and tidy. We thus decided to tidy up the portal, making it easier for everybody to see the signalchain and able to troubleshoot. Epecially in the way that MCT students are supposed to work together (in 3 teams, in charge of modification and doing the portal job, in order, every week). This will also help a lot if we are to modify or move the components in the future; otherwise it would be very hard to follow what is what.

-

Group B, Short cables and LoLa

A few weeks ago we worked on setting up LoLa in the portal. This blogpost got a bit delayed, but we are back!

-

A two week wrap-up of Group C