Video latency: definition, key concepts, and examples

Introducing Video Latency

The aim of this blogpost is to explain and clarify concepts and processes rather than introduce new ideas or recommendations. Audio latency or the problem of audio-video synchronization is not discussed here.

Let’s start with the beginning. Video Latency is the difference between the time of capturing and that of displaying whatever video was captured.

The total time difference between source and viewer is called glass-to-glass latency, or end-to-end. Other terms like “capture latency”, “encoding latency” only refer to the lag added at a specific step of the workflow.

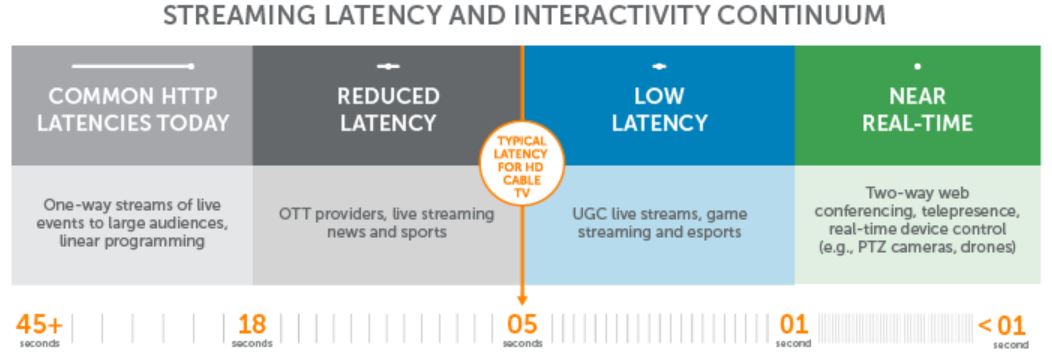

Each user case has its own latency requirements. One-way streams of live events to large audiences can have up to 45 seconds of delay without any bad consequences, whereas in the live stream of a football match, for example, so much delay would be problematic (think about social media spoiling an important goal you haven’t seen yet…)

It is even more important to have low video latency in a two-way conference, real-time device control, or, of course, telematics performances (think about playing together… and missing all the visual cues and feedback!)

Live Streaming

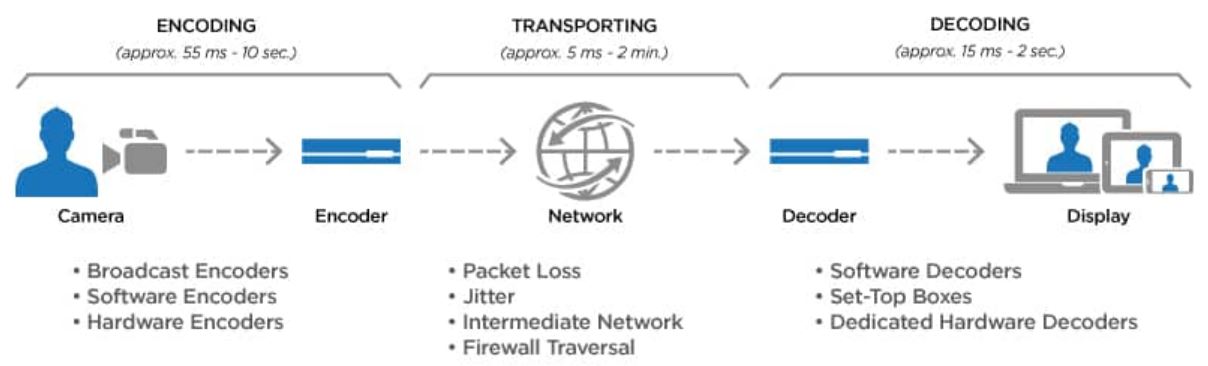

Let’s quickly discuss the most important components of the streaming chain with regards to video latency (aka the ones that usually add the most lag).

Video encoding is the process of compressing raw video for it to be later transported over the internet. At this step, the encoder needs to compress the content according to the available bandwidth of the network.

There are two types of video encoding, file-based and live. In the first case, encoders are used to compress and reduce the size of video content so that it uses less storage space and is easier to transfer. Since the video files are not live, the latency is rarely a key problem here.

Live video encoding is the process of compressing real-time video and audio content prior to streaming – significantly reducing bandwidth while maintaining picture quality. However, depending on the type of encoder used, compressing live video can add to the glass-to-glass latency, negatively impacting the overall experience quality.

Video decoding is the process opposite of encoding. It can output uncompressed video through SDI for further video processing or over HDMI for displaying directly on a screen.

To keep latency low in a video streaming workflow, it’s important to work on each step at a time – e.g., if the video encoder is adding latency, there won’t be a way to “catch up” on that delay later in the streaming process.

CODECs

Another critical step that adds to the glass-to-glass latency is compressing and decompressing data into files or real-time streams. This process is done following video protocols known as the codecs. The term codec is a portmanteau of the words enCOding and DECoding.

Most codecs use a “lossy” compression method – some redundant spatial and temporal information is lost. “Lossless” compression is used when the goal is to reduce file and stream sizes by only a slight amount in order to keep picture quality identical to the original source.

Codecs for live video (mainly H.264/AVC or H.265/HEVC) can reduce raw content data by as much as a thousand times, saving much needed bandwidth – e.g., a typical uncompressed HD stream is about 1.5 gigabits, but compressed it gets to around 5 megabits for live broadcast television.

Network transport protocols are also influencing the end-to-end latency. Different protocols will introduce different amounts of latency to the streaming workflow, so, for live applications a transport protocol with as low latency as possible should be chosen. Encrypting data for security purposes is another lag-adding parameter. “Packet loss” and following error correction methods also adds latency.

Depending on each use case, the image quality or the low-latency will be more important. For applications where latency is critical, such as telematic performances, picture quality can often be exchanged in favor of minimizing latency. However, if the video quality is of importance one has to accept extra latency. Ultimately, the optimal combination of bitrate, picture quality, and latency settings will result in a great live experience over any network.

Practical examples from the MCT Portals

Protocols

On a daily basis we use Zoom to communicate between Trondheim and Oslo, at least visually. For a better performance, this technology combines the two codecs:

- The Advanced Video Coding (AVC) codec has a fast encoding speed and is very efficient for HD videos. However, as the demand for 4K continues, it is slowly being replaced with the High Efficiency Video Coding (HEVC) protocol, which can deliver the same quality at half the bitrate (while using significant processing power).

- FFMPEG (Fast Forward MPEG) is an open source, command-line based, multimedia project for encoding and decoding a variety of media formats (both audio and video) – essentially an upgrade of the grandpa MPEG.

Both the Trondheim and the UiO portal are equipped with two very good cameras: the Logitech Pro and Minrray PTZ, both supporting AVC. The Minrray PTZ camera even supports the better HEVC (too bad Zoom doesn’t support it).

Once a semester or so, for our awesome telematic performances, we need to set up a stream that feeds several camera perspectives (and audio!) to the Internet, mostly to Youtube. For this we use OBS Studio – which also uses AVC. For a transport protocol, it uses SRT (Secure Reliable Transport) – open source streaming protocol that enables encryption and utilizes packet recovery to maintain high quality over unreliable networks without compromising latency.

Video Latency in the MCT Portals

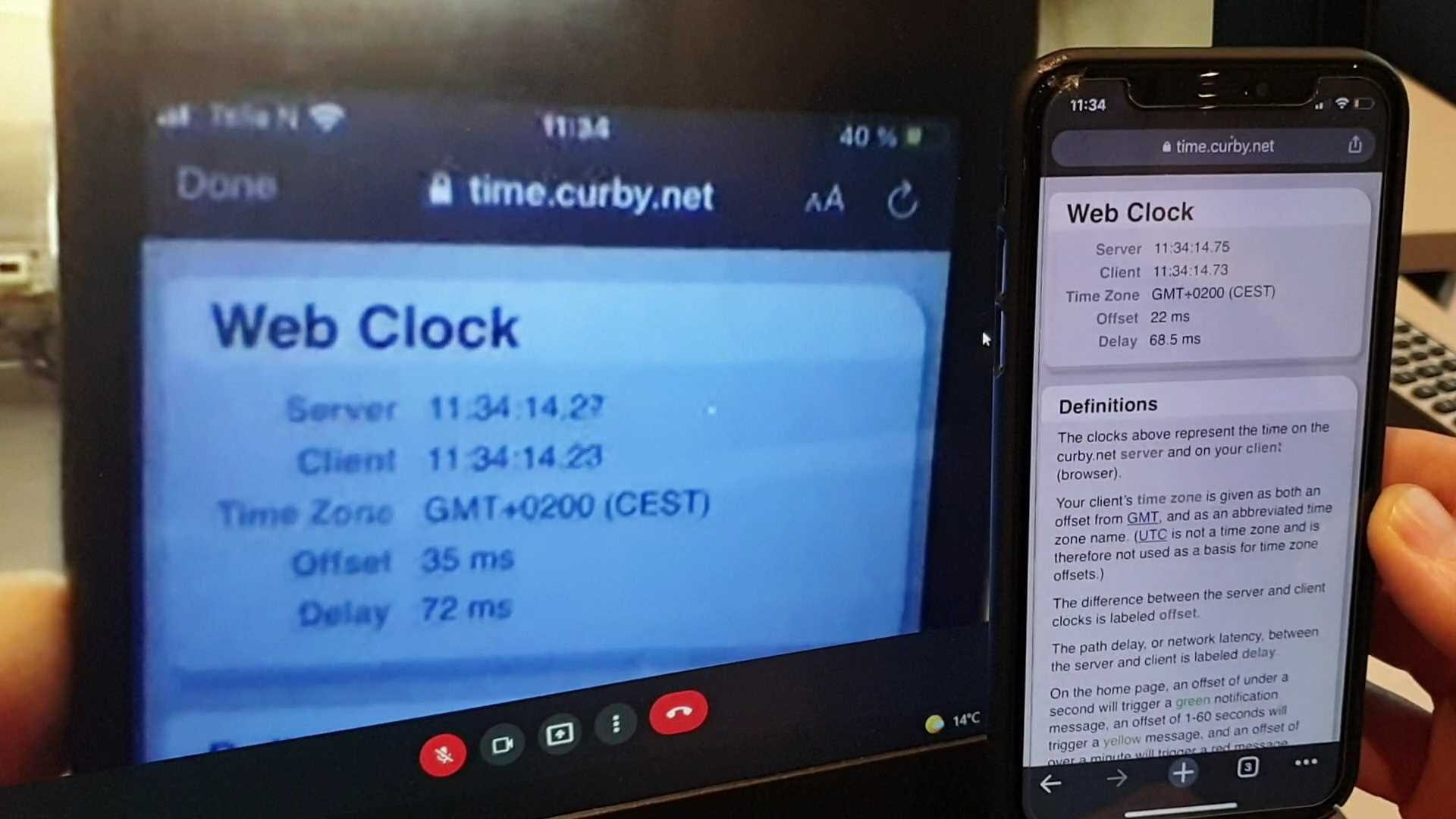

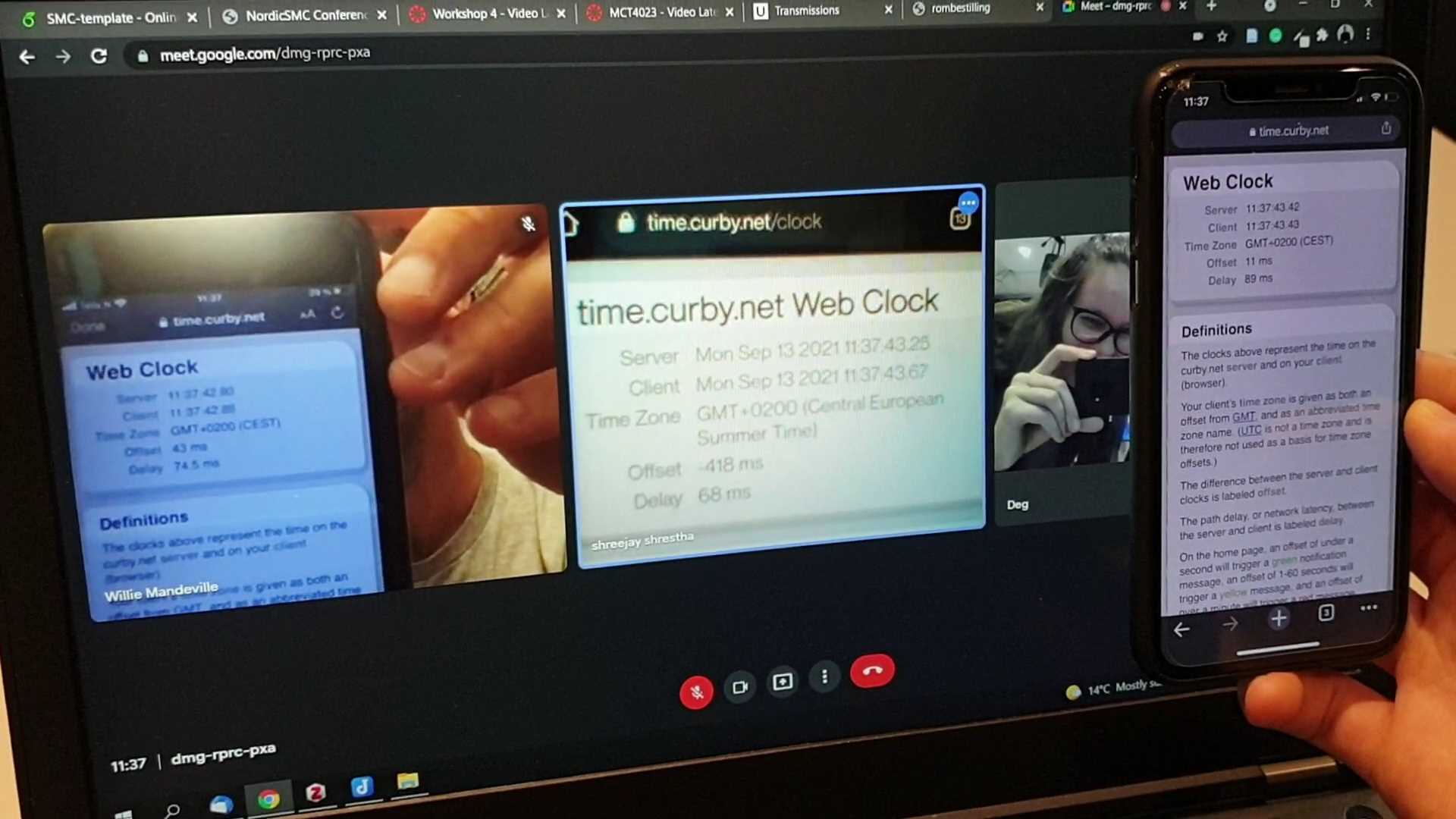

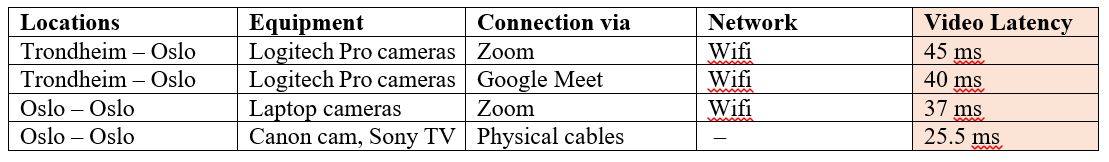

Using a fun method of filming a web clock from more locations and then calculating the time difference, we tested some actual latencies:

What is this telling us? Firstly, the latency between Trondheim and Oslo is not that terrible! And secondly, even in the same room there’s latency to consider.

Closing remarks

Remember, when working with video latency, to:

- choose hardware that is engineered to keep latency as low as possible even when using a standard internet connection;

- choose equipment that supports high efficiency video codecs;

- make sure the transport protocols are suitable for your task;

- find the balance between latency, picture quality and bandwidth depending on the use case.

If you’re searching for inspiration for another latency test consider this use cases: during one of our MCT courses you need to use several camera perspectives: one for the lecturer, one for the class, one for the mixer view. Calculate the video latencies (and perhaps also video quality) between all cameras and compare them. Based on your findings decide which cameras should be placed where. Hint: perhaps it is more important to have a high quality stream of the mixer view rather than the other angles…

As a last thought… have you considered that latency can be a good thing? For example to prevent obscenities from airing, for live subtitling, or closed captioning.

Congratulations to all MCT students and teacher that set up our portals – despite our constant complaining the “worst” latency was still under 50 ms! Great job!

References and further reading

Eberlein, P. (n.d.). Understanding Video Latency. U.S. Tech. http://www.us-tech.com/RelId/1490479/ISvars/default/Understanding_Video_Latency.htm Haivision. (n.d.). The Essential Guide to Video Encoding: From Video Compression and Codecs, to Latency and Transport Protocols. Retrieved November 1, 2021 from https://www.haivision.com/resources/white-paper/the-essential-guide-to-low-latency-video-streaming/ Nikols, L. (2021). Video Encoding Basics: What is Latency and Why Does it Matter? Haivision. https://www.haivision.com/blog/all/video-encoding-basics-video-latency/ Ubik, S, & Pospíšilík, J. (2021). Video Camera Latency Analysis and Measurement. IEEE Transactions on Circuits and Systems for Video Technology, vol. 31, no. 1, pp. 140-147, doi: 10.1109/TCSVT.2020.2978057. Wowza Media Systems. (2021). What Is Low Latency and Who Needs It? (Update). https://www.wowza.com/blog/what-is-low-latency-and-who-needs-it