In search of sounds

In search of sounds

Are you looking for sounds to inspire your next project? There are many ways. Trying new physical instruments or new configurations of familiar ones, finding or recording samples and making them into something new. At synth.is you can discover new sounds by evolving their genes, either interactively or automatically.

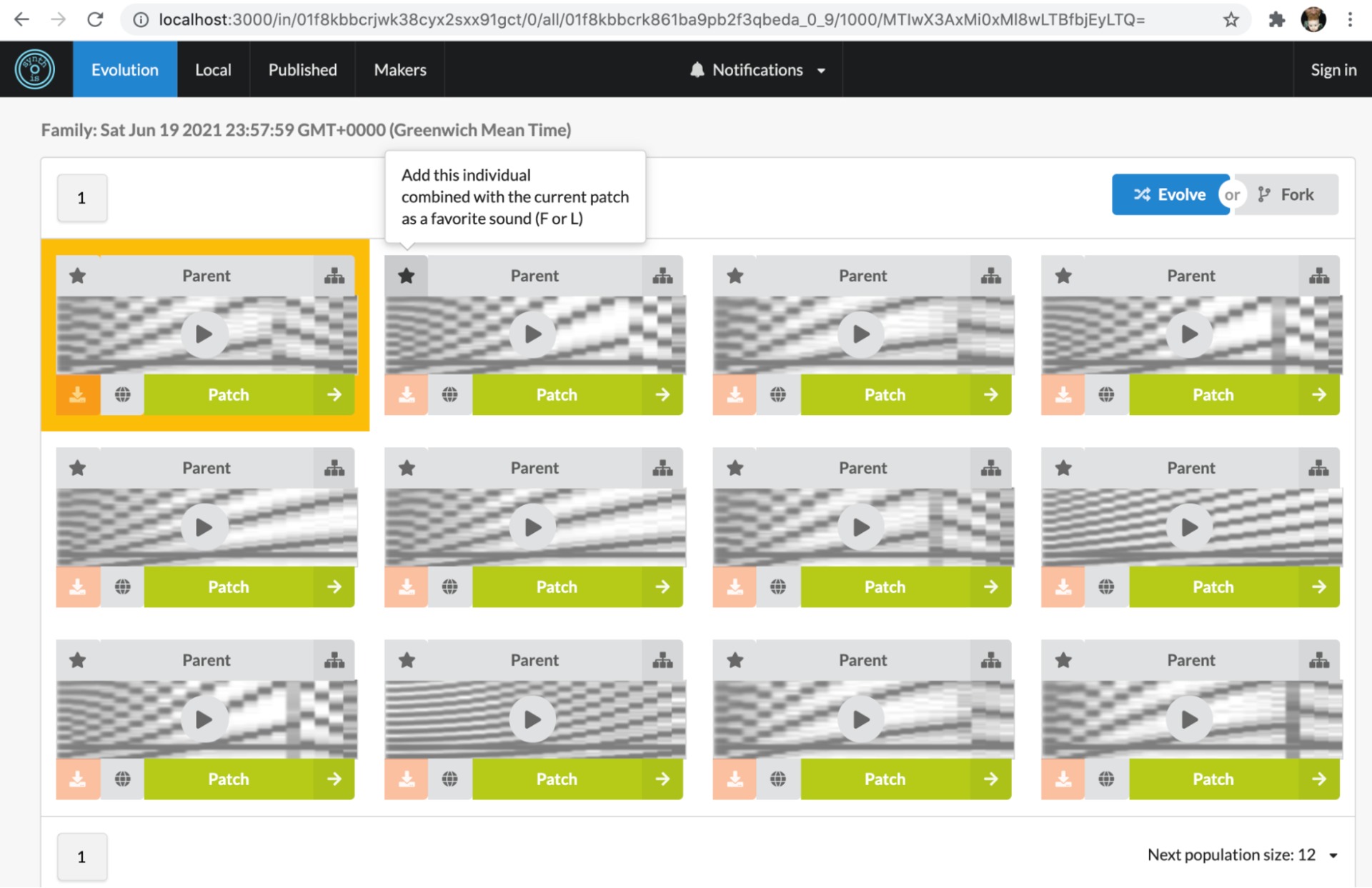

When evolving sound genes interactively, you’re presented with generations of individual sounds, where you can preview, or -listen, each individual and choose the ones most suitable as parents for the next generation. This type of Interactive Evolutionary Computation has been employed before in different scenarios, such as for evolving 2D images, 3D objects and in an interactive media installation. The evolution can be approached from two different angles, either starting from scratch where the first generations contain primitive individuals, or continuing evolution from published sounds. The latter approach is intended to allow evolvers to build upon the progress made by others, with fresh ears - in a way standing on the shoulders of giants - instead of starting from scratch. Either approach can be interesting, paving an evolutionary path from genesis of exploring in what sonic directions already published sounds can be taken.

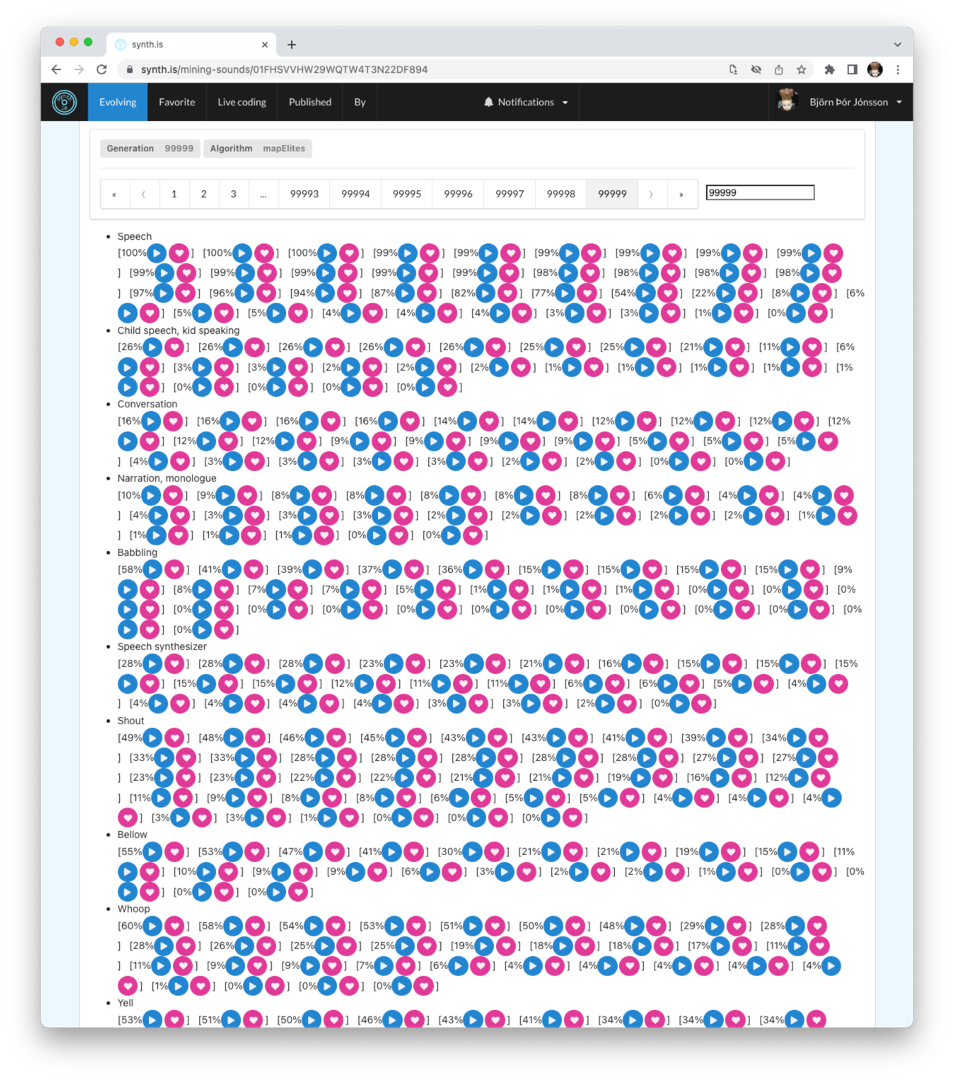

Automatic evolution of sounds employs search algorithms to allow the computer to discover novelty. The current implementation of automated search for sounds is supervised by pre-trained classifiers, so the discovered novelty is somewhat recognisable. Elite sounds for each class can be listened to and liked, in a similar way as can be during interactive evolution, adding those found interesting and useful to a catalogue of favourite sounds. Favourite sounds can also be published, for others to use and continue evolving.

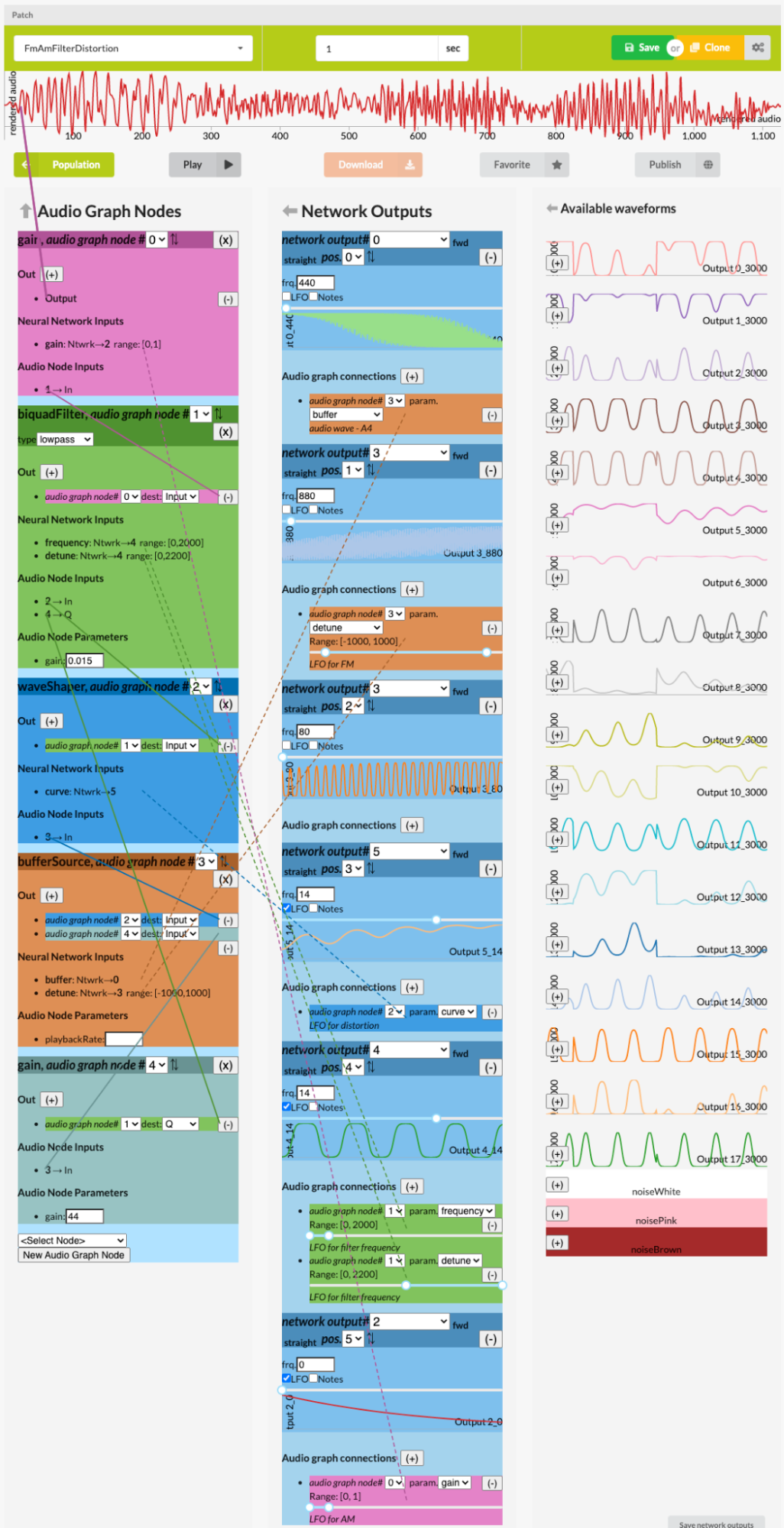

The main source of sounds comes from pattern producing networks. Those audio sources are routed through audio signal networks, which can be considered as synthesiser patches. Those patches can be configured manually or they can also be evolved alongside the pattern producing networks.

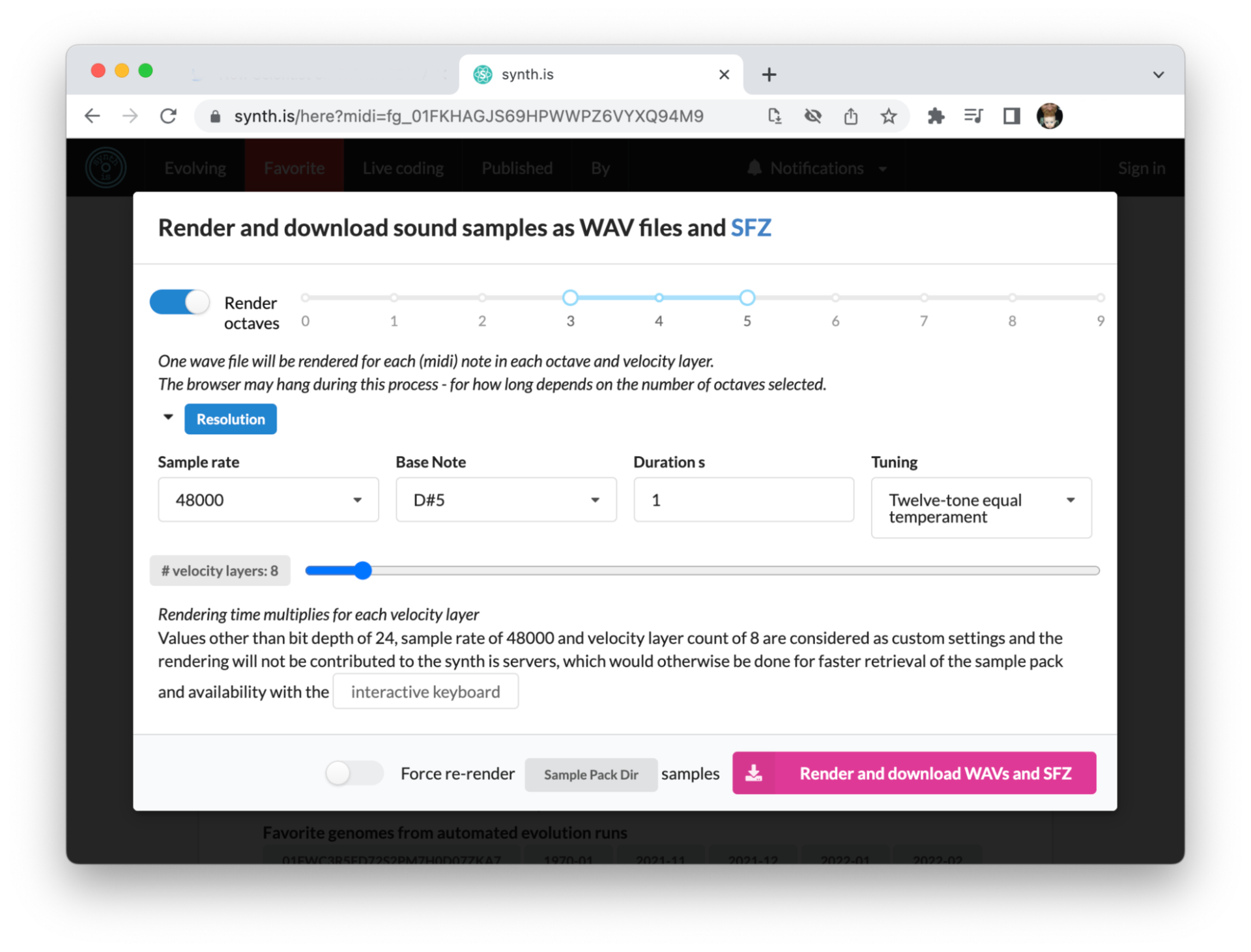

Favourite and published sounds can be rendered as sample based instruments in the SFZ format. That format can be directly used by DAWs such as Bitwig Studio and the Renoise tracker, or with specialised plugins like sforzando and sfizz.

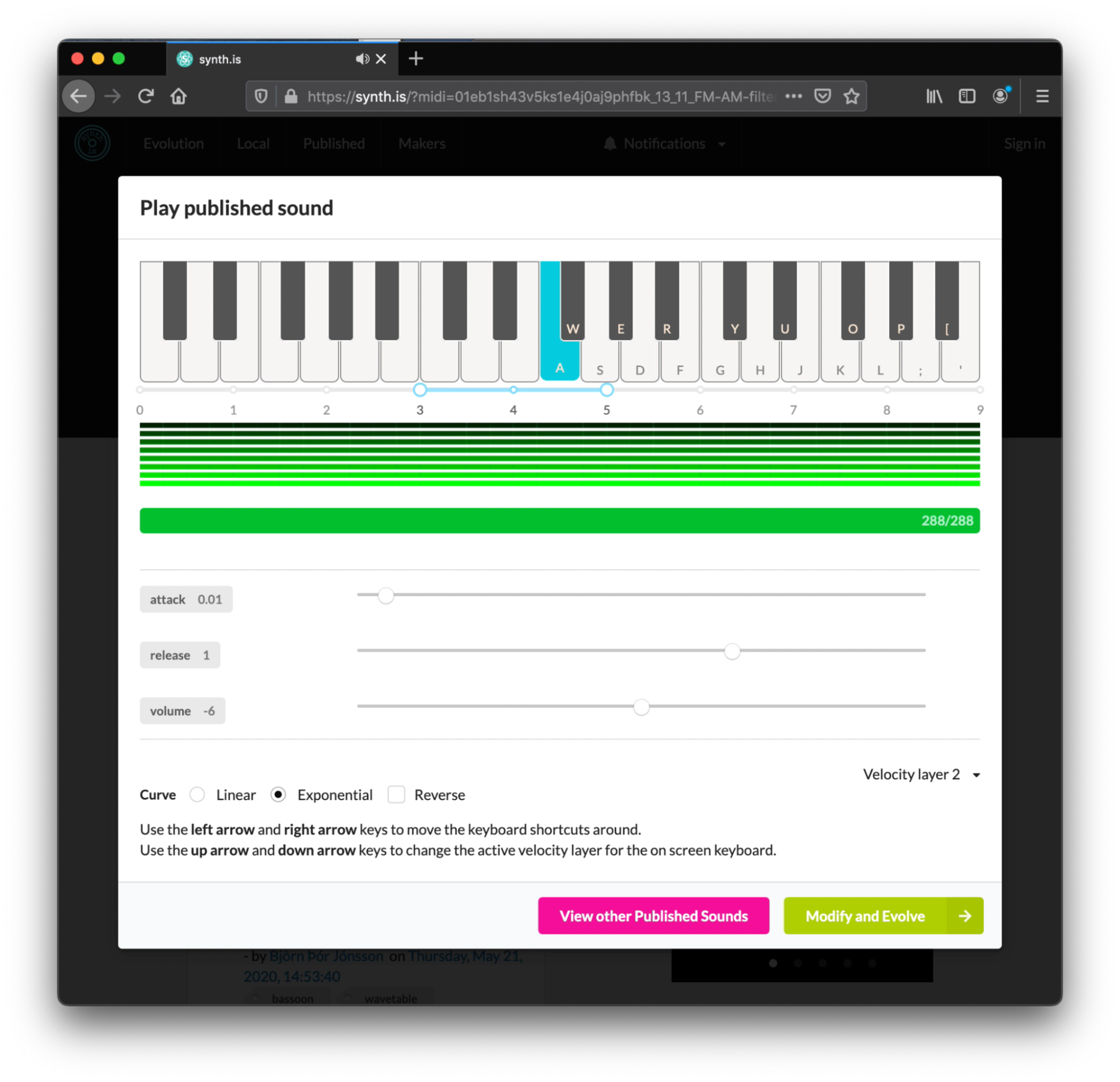

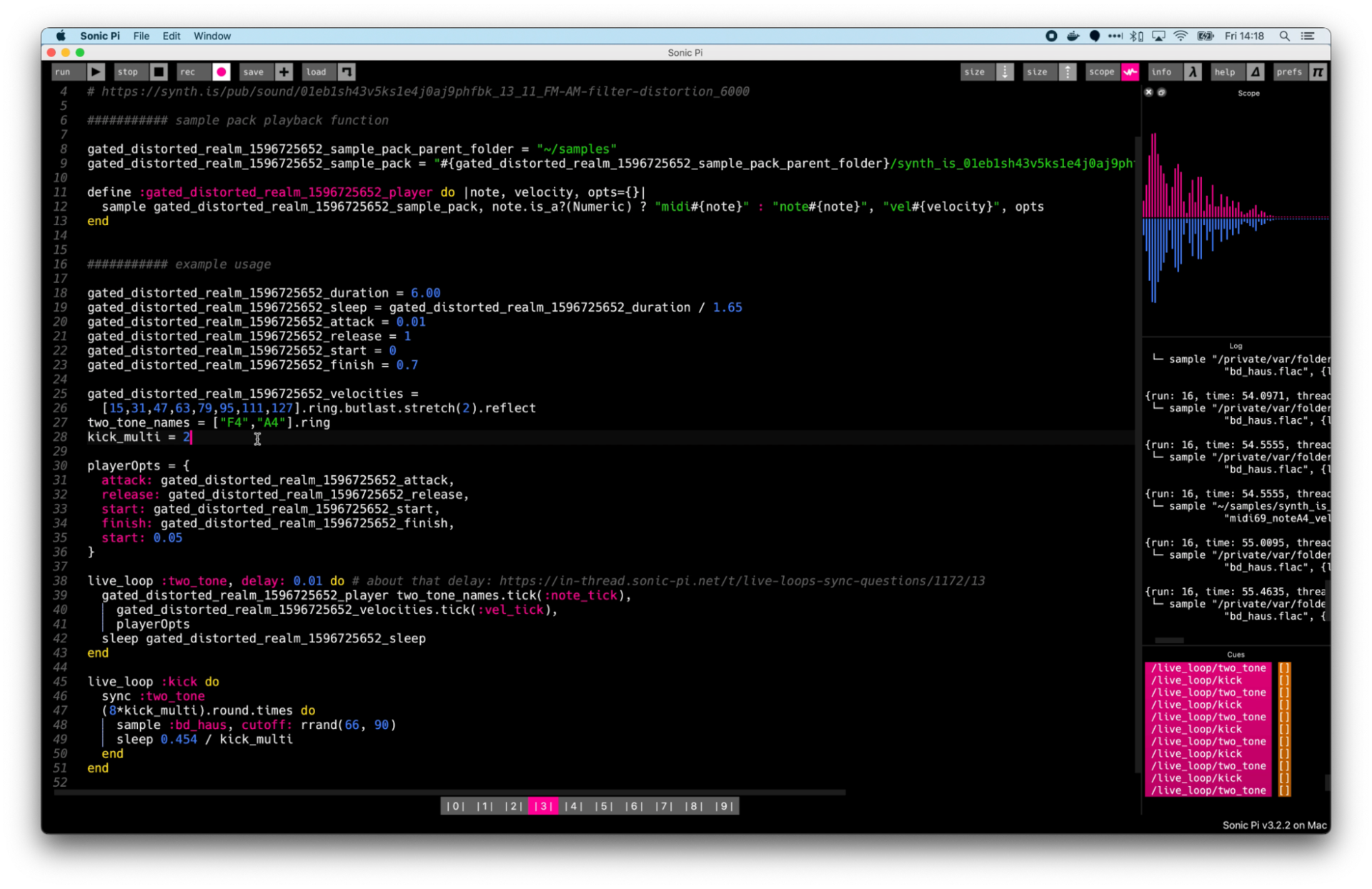

Various settings can be chosen for the rendering, such as the duration of each sample, number octaves and velocity layers, and sample rate. Rendered sample instruments can be previewed in the web browser interface with an on-screen keyboard, which also supports Web MIDI, so you can try them out with your favourite hardware controller or DAW. Along with the SFZ instrument definition, a Sonic Pi live coding template can be downloaded with the rendered sample instrument.

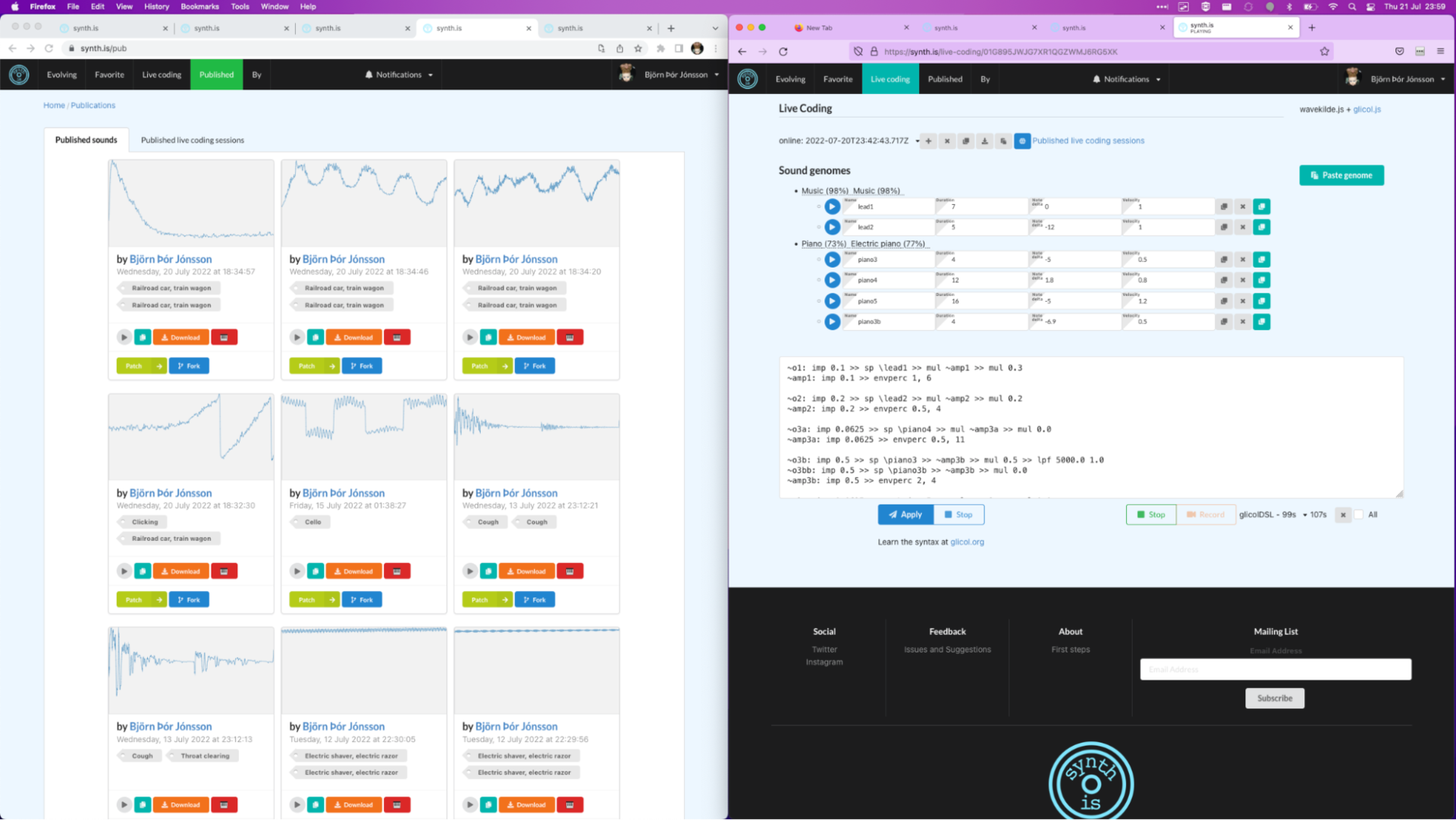

A recent addition to this sound evolution web interface is the integration of a live coding environment based on the Glicol engine. Underlying genes of favourite and published sounds can be copied to the clipboard (as a JSON text string) and pasted onto the live coding screen, where they can be rendered with the desired configuration and used directly in the accompanying live coding text area. Furthermore, code modification steps can be recorded during a live coding session, so they can be played back. This is a form of musical recording, but not where the audio signal is inscribed but rather the symbols from the live coding, along with their temporal placement, resulting in a JSON structure containing both the audio gene descriptors and their compositional arrangement, clocking in at a few kilobytes. That can be viewed as outperforming any audio compression.

The project itself has evolved from the application of those pattern producing networks for single cycle oscillator waveform descriptions, to the rendering of sounds that develop over time, of any desired length. Recent explorations into automated evolution of sounds will be investigated further, possibly utilising custom reference sounds and audio features of preferred sounds for navigating the sound space. New neighbourhoods in the sound space may be defined with unsupervised classification. Extraction of the core functionality behind synth.is into a reusable (NPM) library is underway. A command line interface (CLI) will demonstrate one possible use case. Stay tuned at https://twitter.com/wavekilde (Mastadon / ActivityPub presence is under consideration : )