Experiencing Ambisonics Binaurally

During the MCT2022 Physical-virtual communication course we have had two sessions where we explored multichannel and ambisonic decoding. The first session, on February 27th, was mainly about recording a four channel A-format stream with an Ambisonic 360 sound microphone. We converted the A-format mic signal into a full-sphere surround sound format which was then decoded to 8 loudspeakers layout we placed evenly across the portal space. We have used the AIIRADecoder from the free and open source IEM plug-in suite. Although the setup was not ideal, mostly because of the LOLA connection between the Trondheim and Oslo portals, and the fact that the loudspeakers were not at the same level of the listener’s head, we did manage to get a 3D spatial sound stream between the two campuses.

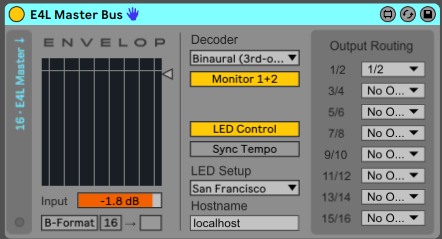

For our second session on April 24th, we focused on decoding binaurally the input from four large diaphragm condenser microphones placed in a square formation. It required us to use headphones for a better perception of the sound stream and enhanced immersive environment. The decoding was done this time with E4L (Envelop for Live) free collection of spatial audio production tools. We created four audio channels and placed the E4L Source Panner device on each of the audio tracks. On a fifth audio track, we set the E4L Master Bus device and directed all output from the four microphones into that channel. You can see the setup we have used in Ableton Live in the pictures below.

We were given the time to experiment with this setup. Several class members have started to whisper, talk loudly or sing into their dedicated microphones, trying to create unique sonic material for the listeners who wore headphones in Trondheim and Oslo. I seized on the opportunity to play the saxophone with this ambisonic setup and resorted to the Giant Steps melody which seemed to be a recurring theme whenever I am being asked to play something (have you checked out my (Giant Steps Player already?).

I stood in the middle of the four-microphone setup and played into each of the mics at a time. During the first round of the melody, I played segments of the song into each different mic, and for the second round of the tune, I played (almost) each note into a different mic. That resulted in an audio clip where you can hear me play parts of the melody in four positions (Front-Right, Front-Left, Back-Right, Back-Left). I would recommend listening to this audio clip with headphones to be able to clearly identify the direction I am playing from (from the listeners perspective).

When listening to it again in a quiet environment at home, I now wish I had more time to experiment with playing longer tones while moving between the microphones (maybe a ballade would fit better next time?). I wanted to hear the movement of my sound in the spatial environment.

In any case, I decided to continue the extermination at home with several sound effects like footsteps, a clock ticking and writing marker. I placed all sound samples on an audio track containing the E4L Source Panner device and mapped the Azimuth knob to a physical knob on my midi controller.

Give it a listen (with headphones of course) and see what you think! The Envelop for Live free tool pack has some great collection of devices which can really utilize space as a compositional element, and it would be interesting to see how this type of devices can elevate my artistic output in the future.