The HySax - Augmented saxophone meant for musical performance

Backgroud

As a saxophonist, a jazz musician and an improviser, I sense a growing need in an interactive music system to augment the harmonic and sonic possibilities of the saxophone. A lot of important work has been done in the last 20 years in the field of instruments expansion, increasing both the sound and ways of interaction with the instrument. Recent work in the fields has been presented at NIME in 2006 with the Electronically-augmented saxophone, in 2008 with the Gluisax and the utilization of open sound control (OSC), the Gest-O Sax in 2012, using gestures mapped to signal processing parameters. The most recent work has been presented in 2019 with the HypeSax - Saxophone acoustic augmentation (Flores et al.) The HypeSax holds many unique features and creative design but the one that excites the most is the built-in speaker within the saxophone bell, advancing into ‘instrumental augmentation as a fully integrated acoustic/electric hybridization with a standalone system’.

The idea behind my interactive music system, the HySax, is to create a background or an accompaniment layer that would interact with the player by allowing body gestures to control sound parameters of a synth pad and delay parameters of the saxophone sound.

The system

This interactive music system that I worked on contain three sensors:

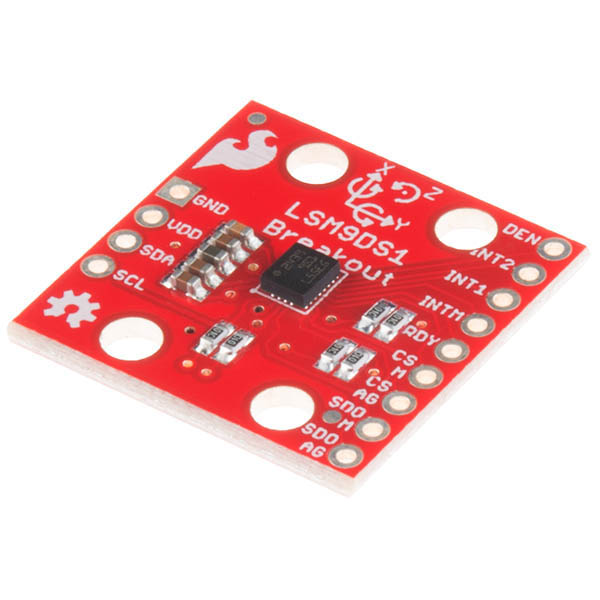

- 9DoF IMU – A versatile, motion-sensing system-in-a-chip which houses a 3-axis accelerometer, 3-axis gyroscope, and 3-axis magnetometer that gives us nine degrees of freedom.

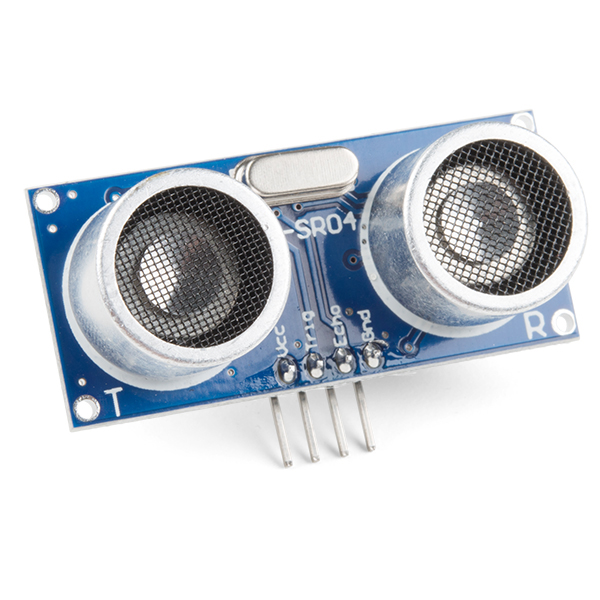

- Ultrasonic Distance Sensor

- Rotary Knob

‘Some players have spare bandwidth, some do not’ (Cook, P.R.,). I had to carefully consider this when planning my system. When it comes to the saxophone, the right hand is significantly more available than the left hand while playing. One can produce notes with only the left hand, but the opposite is not very common in traditional saxophone playing.

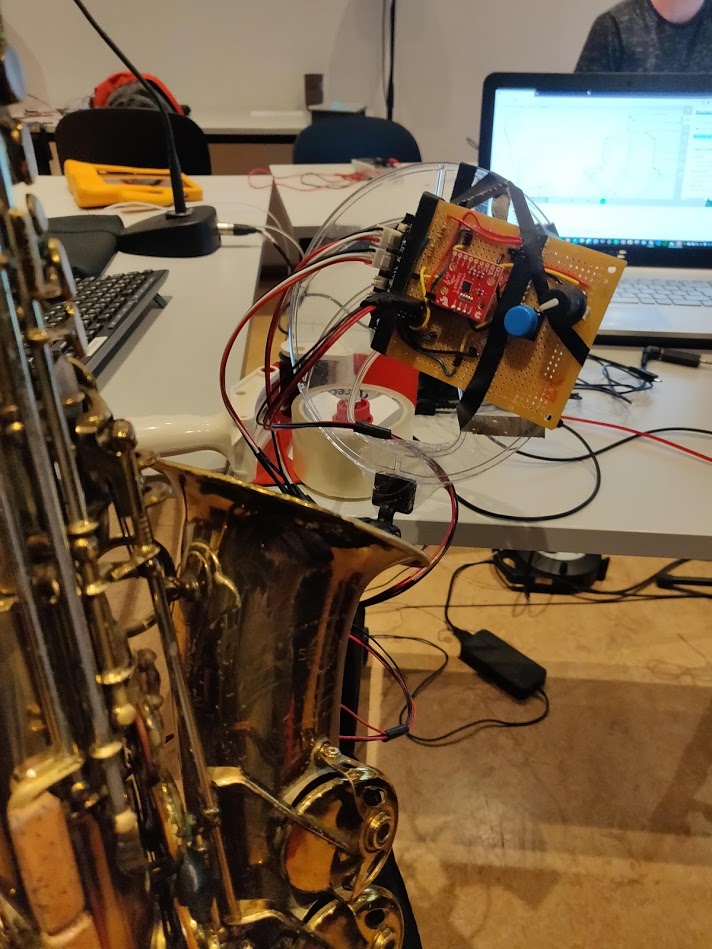

The system utilizes both the right hand of the player, together with gestural body movements. The position of the player is being tracked by the 9DoF sensor, measuring essentially the player’s posture on three-axis. When distancing the saxophone away from the body, the system measures the distance between the two via the ultrasonic distance sensor. During troubleshooting I have realized that clothes do not reflect the ultrasound back so well, so I am improvising by wearing a Tupperware box lid. For sound generation I am using the saxophone’s acoustic sound with a Korg contact mic connected to the minijack mic input on the Bela, and a Pd patch containing four sine oscillators producing a pre-determined chord. The chord starts and stops playing with a push of a button. The standalone Bela unit is mounted on a saxophone sound deflector/reflector (made by JazzLab) attached to the saxophone’s bell in a way that is facing the player and visible to both the player and the audience. The Rotary knob is located on the top right corner of the unit allowing for easy access with the right hand. To the left of the rotary Knob, there is the push button. The 9DoF sensor is located on the unit as well.

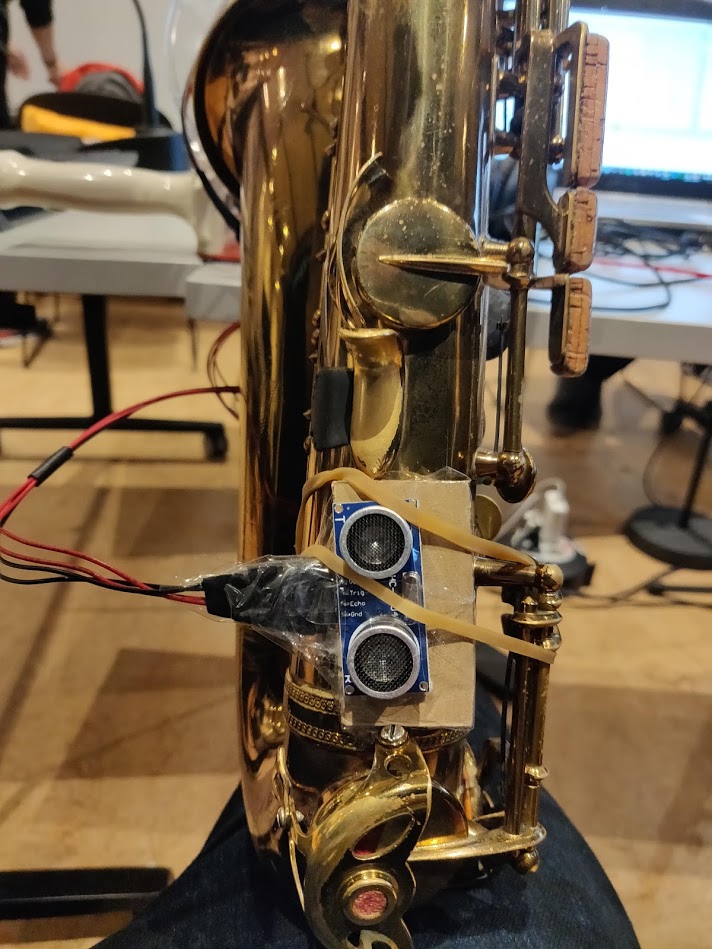

The Ultrasonic distance sensor is mounted on the saxophones body, under the right thumb. Placing the distance sensor there allows for maximum distance between the saxophone and the player, allowing for a longer movement producing higher range of input readings from the sensor.

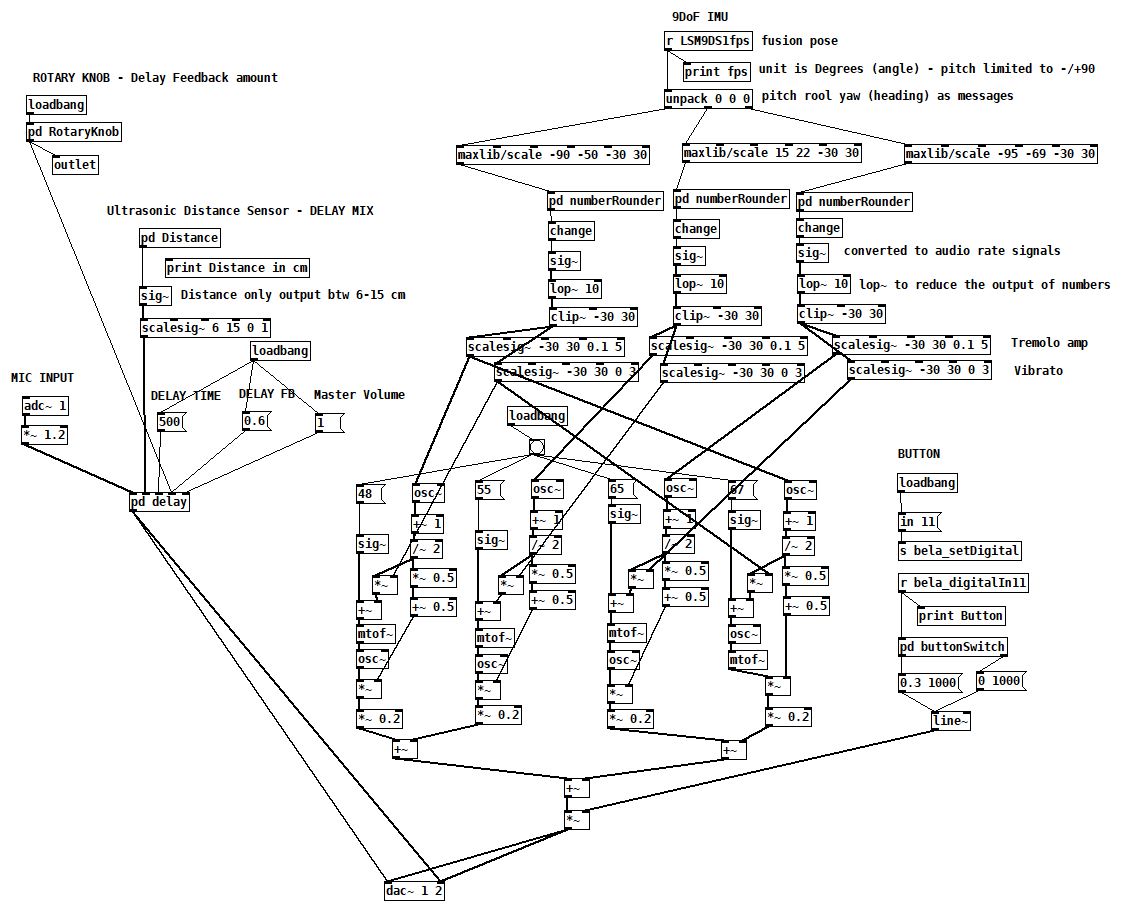

Pure Data Patch

The software is based on a Pure Data patch which is uploaded to the Bela Board.

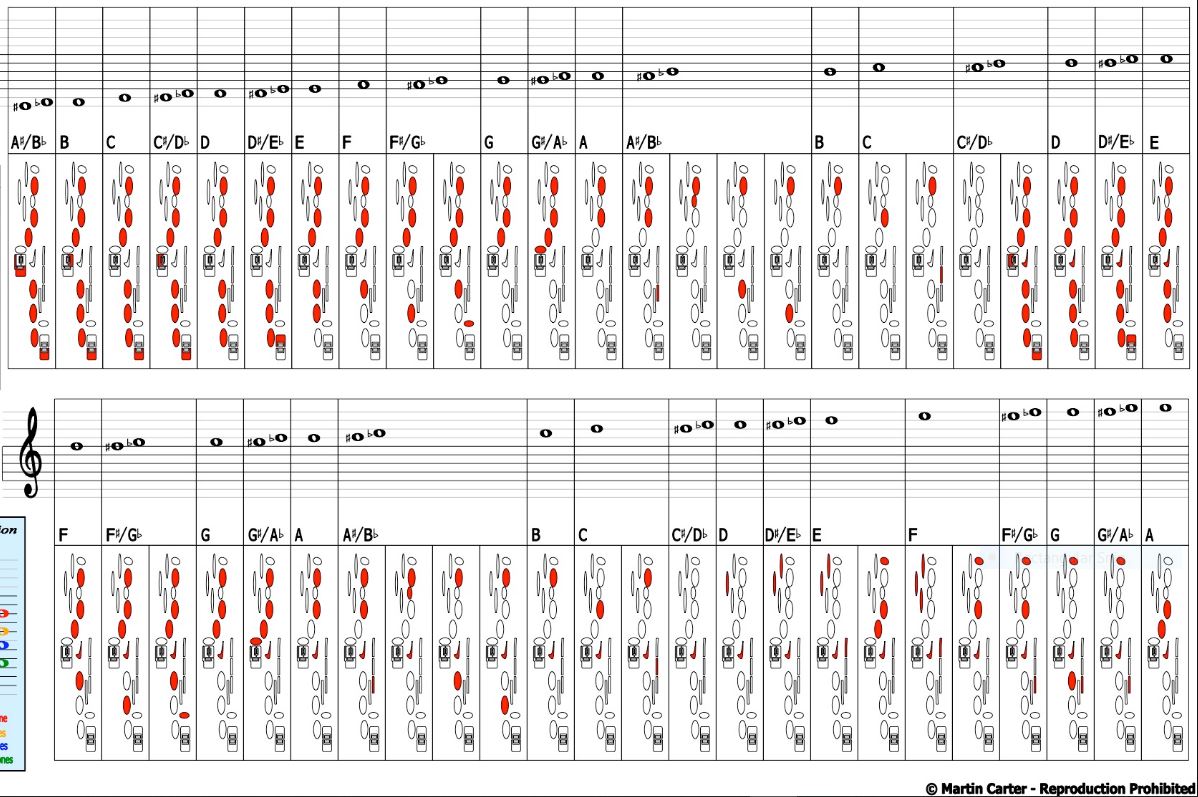

The mic input is connected to a delay line. The delay feedback amount is mapped to the Rotary knob. The ultrasonic distance sensor which is scaled to except only readings ranging between 6 to 15 centimeters (estimated min/max distance from the sax) is mapped to control the delay mix. When the sensor is close to the players body, the amount of delay in the mix is minimal to none (0). As the saxophone being pushed away from the body, the delay in the mix is at its maximum (1) (one-to-one mapping). As previously mentioned, with a push of a button, four sine oscillators are playing a chord. The chord is being faded in and out over a one second time frame to make it easy on the ear. Some maneuvering had to be done to cancel the initial bang when starting the system. The fusion pose readings from the 9DoF sensor are mapped to control both the amplitude of each of the oscillators (tremolo), as well as the pitch modulation (vibrato) (one-to-many mapping). Additional tuning within the scaling objects still need to be done to achieve better results.

Evaluation

In a more abstract level, the attributes that are being suggested by Hunt and Kirk, of characteristic of real-time multiparametric control systems are mostly being met with my IMS:

- There is no fixed ordering to the human–computer dialogue. (if you don’t consider my push button)

- There is no single permitted set of options (e.g., choices from a menu) but rather a series of continuous controls.

- There is an instant response to the user’s movements.

- The control mechanism is a physical and multiparametric device which must be learned by the user until the actions become automatic.

- Further practice develops increased control intimacy and, thus, competence of operation.

- The human operator, once familiar with the system, is free to perform other cognitive activities while operating the system.

Evaluating this prototype of a system is a difficult task in such an early stage, but from a view point of the performer, myself, in terms of interaction, the system does allow for further exploration. In a moment where my body posture fulfill my sonic desire, I can freeze my movement and explore more settle/minor movements. The down side of that is that I am not able to then to control the delay amount which is mapped to the distance sensor. In terms of learnability, the system is fairly easy to grasp, understand and play with, but to be able to control it to its fullest, more calibration and scaling needs to be done to.

In terms of expressivity, the system is not yet fine-tuned to produce the exact required frequency and amplitude modulation, therefore, expressivity is limited. But expressive instrument usually means ‘’an instrument that affords expression’’ meaning ‘’an instrument that enables the player to be expressive’’ (Jordà Puig, S.) and personally I feel free to be expressive with the HySax. As mentioned by Notto Thelle during our evaluation session at the workshop yesterday ‘’ There is much potential to be expressive with this system in combination with a saxophone’’. I wanted to create a background pad layer that will vibrate together with the saxophone once I find the right posture but at the moment it is too unpredictable. The one chord pad is also quite limiting and more variation in tonality, voicings, chord type and sound texture are in need to give this prototype of the HySax a real value.