Chance Operations, Rudimentary Pure Data (PD), and a Bunch of Spinning in Circles

This is Sweaty Music

In recent weeks the MCT 2020 intake has been exploring physical computing ideas and tools. As a concluding activity, each one of us was instructed to develop a composition or tool utilizing some of the materials about which we’d learned. While in more normal times that might mean hands-on manipulation of specialized equipment, the Covid era forced some real adjustments. Individual projects instead became an exercise in determining what could be done with a phone, ipad, or whatever else each student might have in his or her home. Necessity is the mother of invention, of course.

For me, it was clear that this assignment was a chance to compose something quirky and outside of my traditional comfort zone in acoustic art music. While electronics have not been explicitly my musical enemy historically, neither have they been an ally.

The Composition:

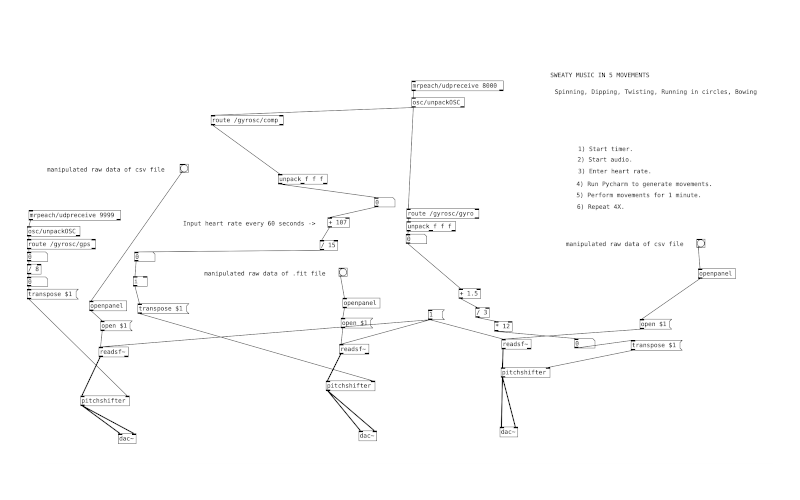

I decided to produce a five-minute chance composition structured around pitch shifting in three musical elements (or voices) created via manipulation of non-musical data. Less technically, I wanted to build the composition solely around materials and gestures coming from my own physical motion (because I’ll take any excuse I can get to run in circles). The data I used came from a Garmin running watch and consisted of a .fit file and a .csv file generated on a run in Bymarka and subsequently stretched and altered for aural aesthetic purposes. The pitch variations in these voices were then controlled by different types of Gyrosc motion data generated by the movement of my phone. Since the phone was worn in a tight vest pocket on my body, the pitch-controlling motions were essentially the motions of my torso. One voice of the texture was functionally static, with pitch level assigned by GPS latitudinal data from an ipad, while the other two ‘melodic’ voices were modified by compass data and gyroscopic data.The compass voice was additionally modified by inputted heart rate data drawn throughout the performance from the running watch responsible for the original files. If perceived as two voices engaged in counterpoint over a drone accompaniment, the piece makes a weird sort of sense as stochastic polyphony. At least that’s what I told myself…

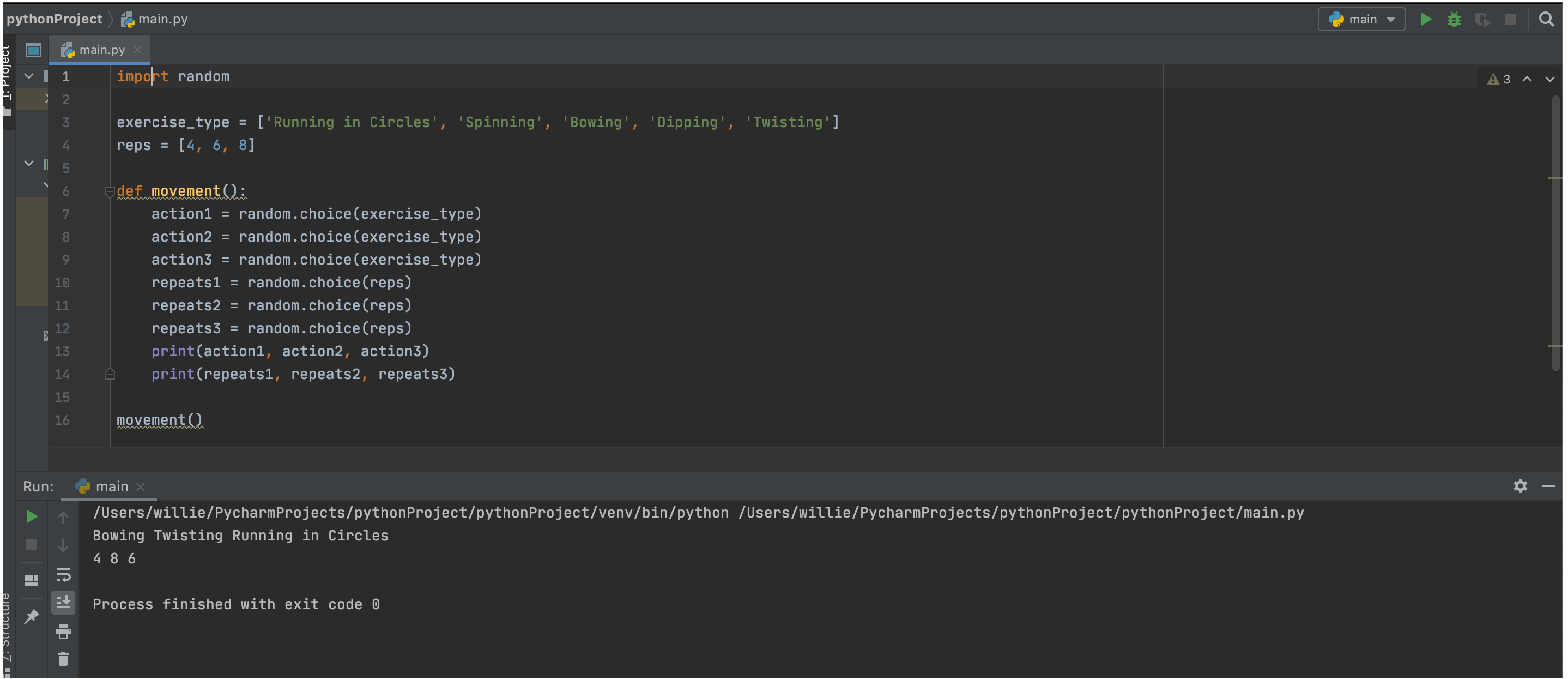

For the selection of movements (essentially the score in this case) I chose five bodily movements and three numbers of iterations that would likely yield compelling sounds and then wrote a simple program for random selection of these variables. A more PD-capable human would likely have been able to plant this code in the Pure Data ‘score’, but for my purposes having it in Python was acceptable.

To perform the piece (see the ‘score’ image above for further context), the performer (me!) starts the audio files, enters his or her heart rate in the relevant PD object and runs the python program to randomly determine the first minute of motions. This sequence of heart rate, python program, and enthusiastic movements is then repeated four additional times or until the audio completes (human motions may lead to inexact 1-minute intervals).

The Performance:

Sound ridiculous? Yes, it does! However, as an exercise in process/chance-driven music, I actually felt that the composition could succeed if performed in the proper way (maybe not in a dimly lit living room). Enough elements are performer-specific (location, relative fitness, body shape and motion) that there would certainly be a level of audible, varied personality in further performances by different individuals.

Enjoy this footage of a 1-minute subset of this piece. The sounds are fairly compelling, and how often do you get to watch a “composer” run in circles in the name of art?