Flexi-tone

Flexi-tone

Expression pedals are a useful tool in the repertoire for performers of Live Electronic Music. They offer the player the ability to control audio effect parameters in an expressive way whilst continuing to play their instrument.

Flexi-tone is an intelligent expression pedal. It has the ability to capture complex foot gestures over 3 dimensions and maps these to multiple audio effect parameters using machine learning techniques. The system uses a real-time implementation of a machine learning model performing a regression task. This creates what is known as generative mapping between gesture and sound. The model is trained on a user-created dataset making the system adaptive to the user. Flexi-tone is designed to enable the user to control multiple audio effect parameters as a highly expressive part of their performance.

(More videos at the bottom of this page!)

Physical Interface

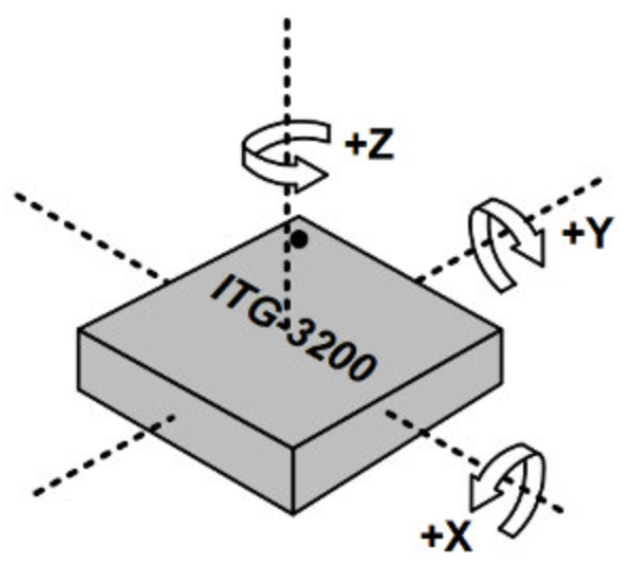

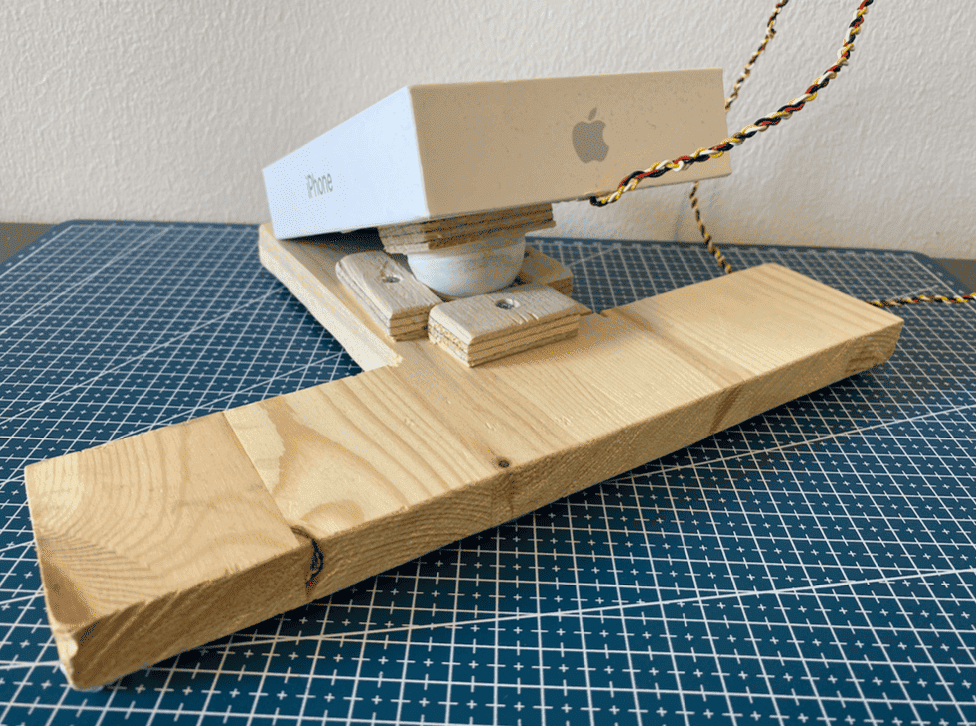

Flexi-tone is inspired by existing expression pedal interfaces in that it features a foot-pad and a base plate. Where Flexi-tone differs is that it uses a ball and socket type joint, fashioned by cutting a ping-pong ball in half, to enable rotational movements on three axis, like a gyroscope as illustrated in the image below.

Technical Stuff

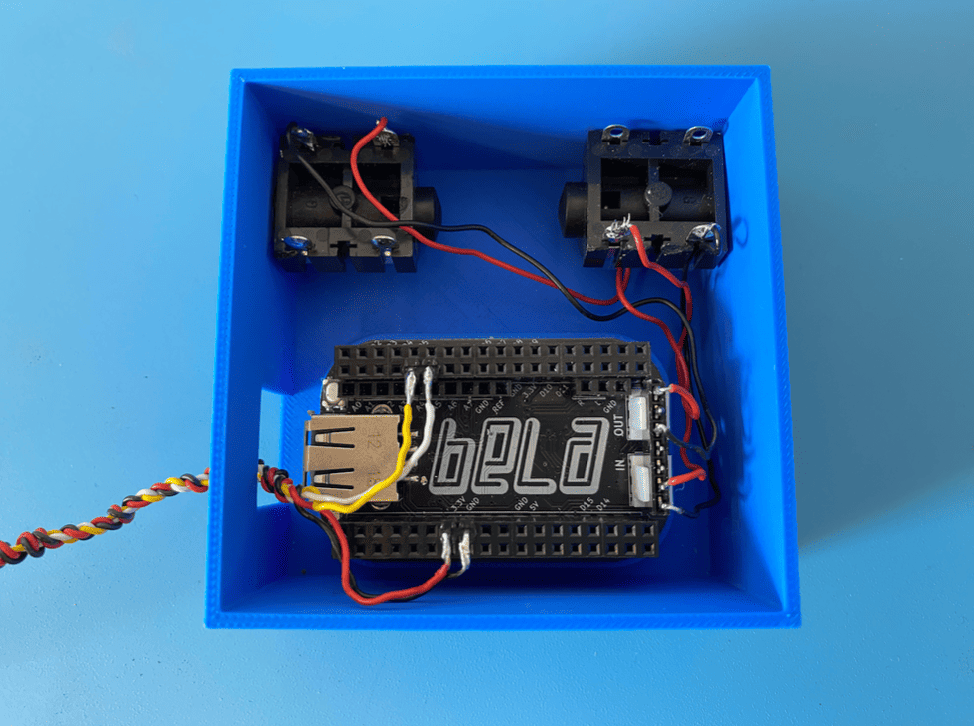

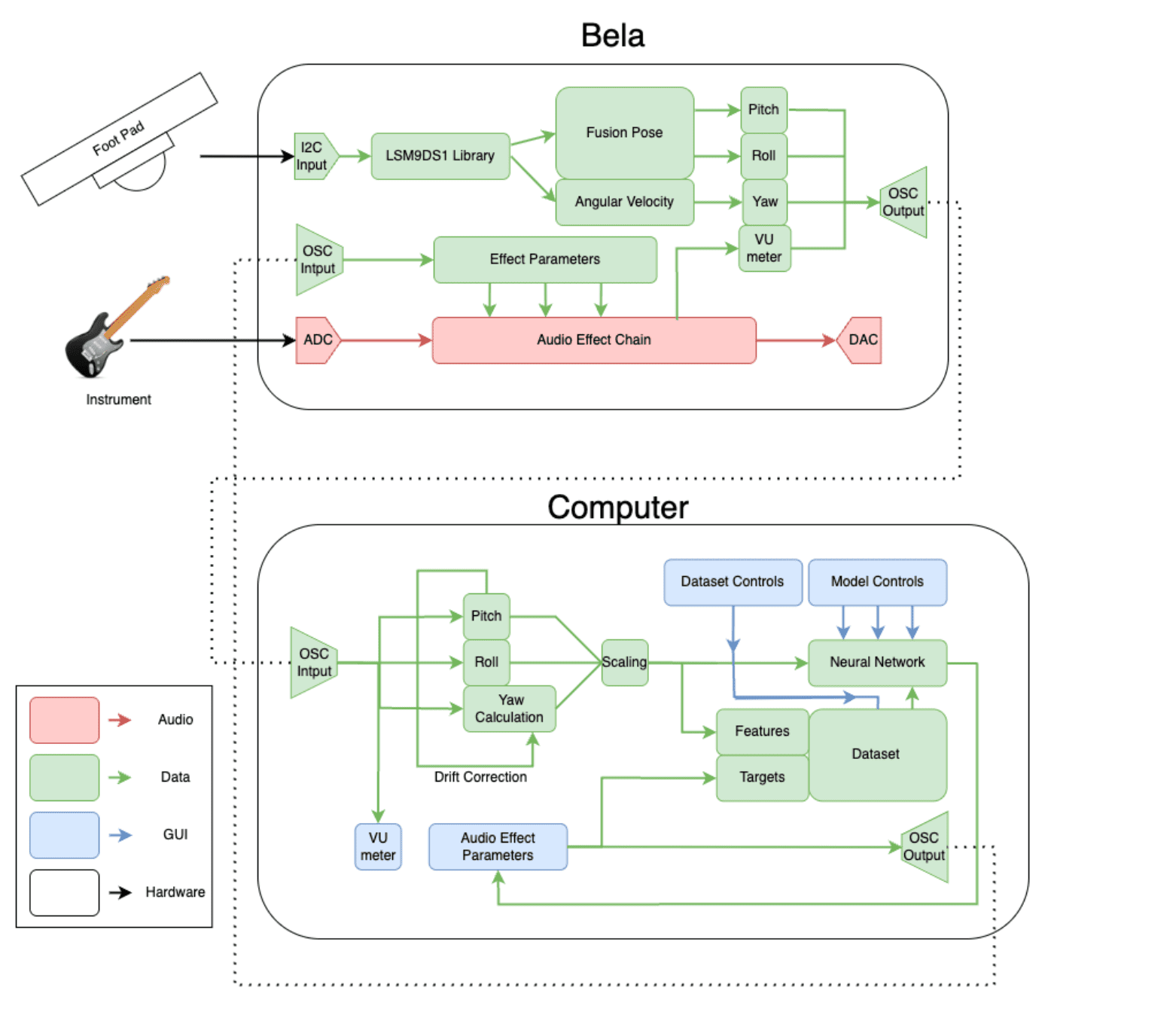

Foot gestures are captured using an Inertial Measurement Unit (IMU). Flexi-tone uses a LSM9DS1 which outputs (amongst other things) the fusion pose estimation of orientation. ‘Fusion’ pose as this algorithm fuses different sensor types to keep the measurement accurate over time. The LSM9DS1 is connected to a Bela mini device. This is a single board computer that handles incoming sensor data alongside all audio processing, including effects. Bela can run programs written in C++, SuperCollider, Csound and, as in Flexi-tone, Pure Data. An enclosure for the Bela mini was 3D-printed as shown below. This enclosure also facilitated 1/4 inch jack sockets to be connected to the audio I/O of the bela, meaning instruments such as electric guitar could be connected directly to the device.

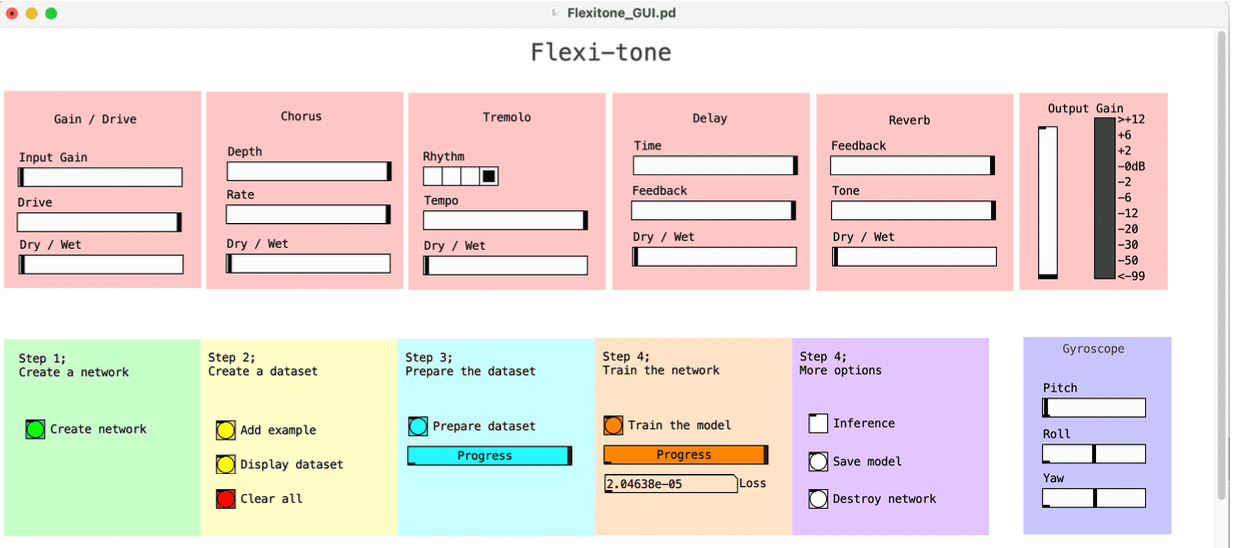

The Pure Data patch running on the Bela communicates with a laptop via a Open Sound Control (OSC) messages over a wired USB connection. On the laptop, a second Pure Data patch is running which handles all the machine learning elements as well as acting as a GUI (Graphic User Interface) for the device. A technical diagram of the full system and the computer-side GUI are shown below.

A user study was carried out assessing the functionality pf Flexi-tone and its ability to foster high expressivity in electric guitar performance. You can read more about the study in my master’s thesis available here. For now, enjoy some more demonstration videos!