NTNU Oceans

Table of Contents

Problem

The aim of this project is to conceptualize and implement an installation in which visitors can interactively “see” and “hear” the status of oceans and seas worldwide.

Proposal

Through conversations with professor Murat Van Ardelan, we have learned about the increasing dangers of the mercury reserves in the permafrost in Siberia and parts of Canada. Tons of mercury could potentially be released if the global temperatures rise and the ice in the ground melts. The consequences of these mercury reserves ending up in our food chain could become a natural disaster for animal life and human beings. Articles with extensive information on the topic: [1], [2], [3].

Our proposed solution is an interactve installation that aims to bring attention to the topic of mercury pollution through sonification and visualisation. The visitors of the installation can control different parameters in a dataset by moving physical objects and observe how these changes affect the auditory and visual output.

Inspiration

The idea behind the use of light in our installation is inspired by the installation artists Olafur Eliasson and James Turrell; using strong lighting with vibrant colors to transform the essence of a space.

Turrell’s installation at Ekeberg, Oslo.

The installation is meant to evoke great feelings without saying anything explicitly itself, letting the interpretation and emotions come from within each participant, thereby making it more genuine and relatable.

Deployment of the installation

The installation will be a structure with white fabric on all sides and lamps and speakers on the outside, enabling us to change the color of the walls and project sound through the walls, without revealing the equipment outside.

The frame

We have decided on using 2x2” wooden beams, but stage truss or aluminum pipes will be a good option, if you have the budget.

The fabric

After experimenting with different fabrics, we landed a quite thick, synthetic voile. This fabric is cheap, available in large quantities and is both sound and light -transparent enough to let the sound from the loudspeakers to come though and diffuse the light from the lamps on the walls.

The loudspeakers

Our music is made to be played back on a multi-speaker system of at least four speakers, but more could be added for greater effect. The most basic setup would consist of four speakers and one subwoofer placed in the corner, outside the box.

Small Genelec studio monitors seem to have become the industry standard due to their compact size, high performance and convenience of being able to mount on a regular mic-stand, but buying them in large quantities can be prohibitively expensive.

The computer

A key point is setting it up with a good boot-script that automatically boots the computer with the correct software and settings, but also installing a remote desktop solution for support when we cannot be there in person.

The lights

As long as they fulfill these requirements, most modern LED-lights with DMX512 or ArtNet -control should work fine:

- Has to have DMX 512 control with at least RGB-mix (UV would be great for added effect).

- Has to be able to run for extended periods of time without failure.

- Has to have pretty decent output with a wide angle or is cheap enough to buy many.

The projector

If you wish to deploy this installation with a graphic display, a projector is needed. From our testing and experience, a short-throw projector capable of outputting at least 3000 ansi-lumen is preferred. A high-end LED projector would be optimal due to both low heat, low noise and a long life.

Lights and color theory

We initially intended to use the built-in DMX control in either Ableton Live or Qlab, but deemed them too restrictive. In order to make the experience exciting and beautiful. The by far most common platform in the professional industry is the MA lighting “Grand MA” -platform, they have a free alternative called MA onPC.Together with a USB to DMX-node this would be sufficient.

Creating movement in the lights

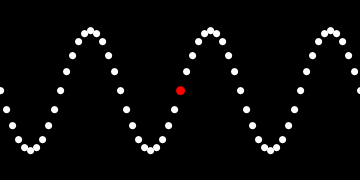

We wanted to create a dynamic experience. By controlling each light individually, we are able to create both movement and color palettes. By selecting all the lights and applying a sinusoidal dimming chase, the lights will dim up and down as if they were points on a sine wave.

Imagine each fixture being a point on the sine wave.

Triggering the different states of the lighting installation is done by assigning cues in the lighting software containing the color palettes and chases to be initiated by MIDI messages from the Max MSP patch.

Implementing color palettes

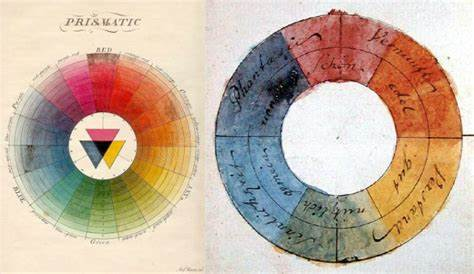

In order to really take advantage of color in communicating emotions, you generally end up with a much greater impact using color palettes than single colors. The emotional connotations from movies and pop-art are so engrained in our culture that almost everyone has a relation to them:

What does these palettes make you feel?

Example one

Example two

Notice that even though they use very similar tones, one is very harmonious and the other is very dissonant. This is accomplished by having the colors intentionally match or not match each other according to the classic color theory developed by Goethe and Newton.

Example one

Nice and happy.

Example two

Not so much.

There are endless examples of this on the internet and you can easily make your own with the color picker tool in almost any image editor (Gimp and Adobe XD are good, free ones).

Color Palettes From Famous Movies Show How Colors Set The Mood Of A Film

These 50+ movie color palettes show how to effectively use color in film - DIY Photography

Sound

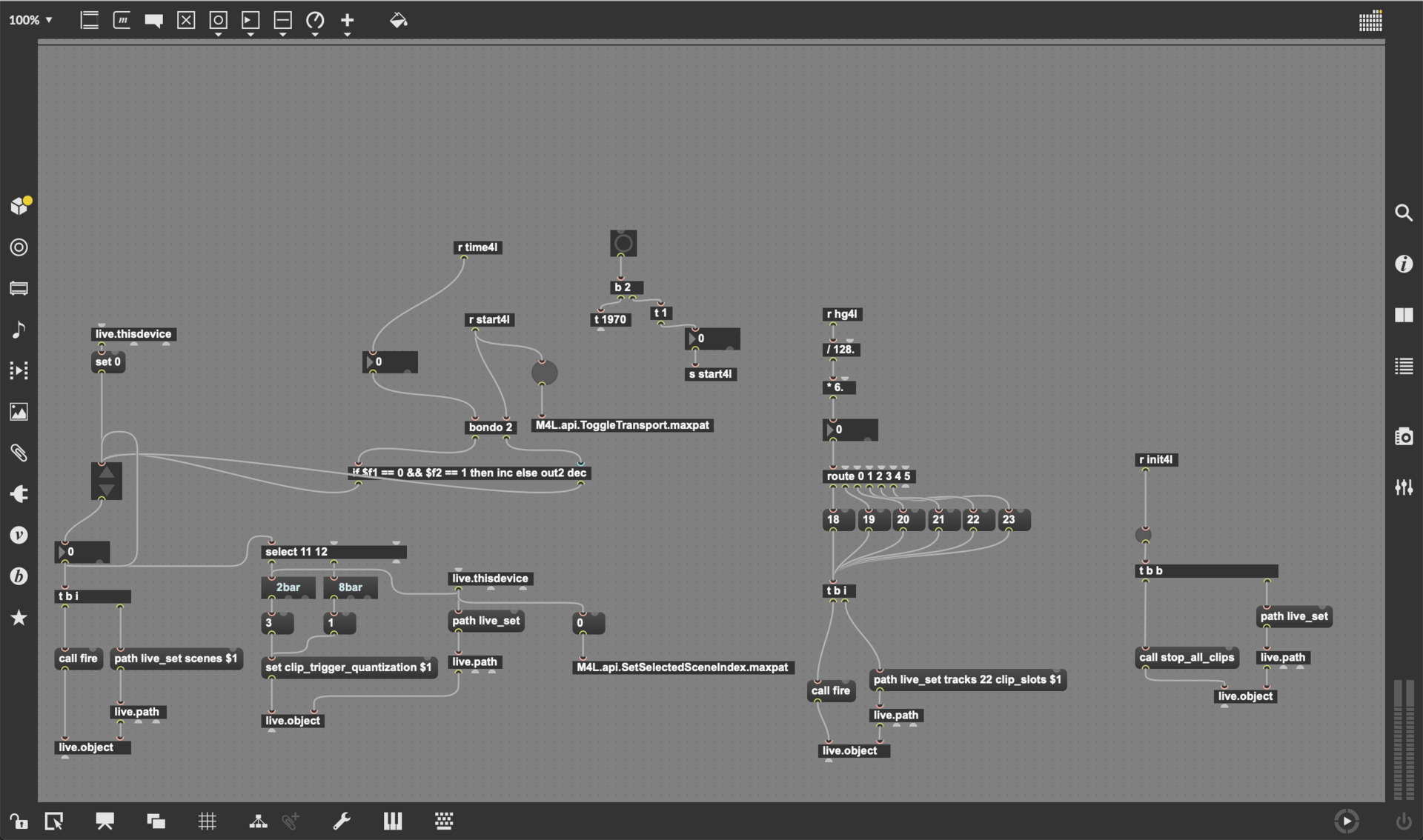

The integration between the Max-patch and Ableton Live is done by accessing Ableton Live’s API. The Live Object Model (LOM) is a road map which illustrates the path to accessing different parameters in Ableton Live. By using the built-in Live-objects in Max for Live these parameters can very easily be accessed and mapped to different aspects of the main patch.

The Ableton project takes advantage of Ableton’s session view. Every scene in the project represents a year in time. The scenes are triggered by interaction with the max-patch. Whenever a year change triggers an event in the max patch a scene above or below the current scene is triggered, depending on whether the year slider has been moved forward or back in time. The global quantization in Ableton is also being controlled by the Max for Live patch. The global quantization controls when the scene changes will happen. To make the scene changes happen smoothly the global quantization is set to two bars for the first 11 scenes and 8 bars for the remaining.

In the Ableton project, we have created a separate track where all the samples for the sonification are located. These samples get triggered separately from the rest of the project and there is no quantization on these samples, so the playback is instantaneous. This was a deliberate choice to accentuate the interaction between the user and the auditory feedback.

Max for Live

The [OCEANS] Max for Live device is designed as a framework tool, helping make the sometimes complicated process of data-sonification and interaction a lot more straightforward.

Input data-sets are primarily indexed by the time of recording, then sub-indexed by location of observation - collecting the time-indexed data as parent-groups, and distributing nine location-indexed sub-sets to a 3x3 grid of [x, y]-coordinates (see the figure above). Four fixed variables are then monitored by three color-coded objects as they move around within the [x, y]-space.

If the patch registers an object as being on a node-point, the output will accurately reflect the location-indexed data mapped to that node. When moving between the nodes, however, a bi-linear interpolation makes sure the numbers glide fluidly between their fixed value-set states. Moving between the time-indexed parent-groups, set all nine nodes time-traveling in uniform motion from one temporal representation to another (see the figure below).

Events can be mapped to trigger when specific objects visit specific location-nodes at specific time-indexes. A directly editable cell-matrix - its rows and columns reflecting time - space - and color of object - makes composing event-sets quick and intuitive.

Together with the movement of the object-monitored values, these events can be sent a) internally within Ableton Live - by Max for Live devices (oceansLIVEAPI.amxd) global [sends] and [receives], or b) externally to other hardware or software - by MIDI-note, or control-messages.

Implemented in the patch is a TUIO tracking of Reactable-markers as well as a bi-directional communication with the OceanSC TouchOSC layout. With the possibility to import data-files by drag-and-drop - and mapping values to be monitored through direct editing - the [OCEANS] Max for Live device makes a thorough effort to have exploring sonification be as accessible as possible.

Tracking

We ended up using fiducial markers through the reacTIVision framework for tracking objects. We strongly considered using Pozyx, as it’s supposedly more rigid and accurate. However, Covid-19 made it difficult to access any other equipment than what we had in our homes.

The reacTIVision framework consists of a computer vision engine (a desktop application), and a client application in Max to receive OSC messages from the engine.

The computer vision engine is programmed to recognize a pre-defined set of fiducial markers, each with a unique ID. For our purpose, we would select three of these markers and attached a printed copy of them to three boxes, as shown in the figure below, which we in turn would place in the installation room.

To be able to use the reacTIVision framework in stable way, we have made a wrapper around it to control the outcome of the tracking in a way that makes more sense for our installation. Two of these extensions are demonstrated visually. The one-to-many control was implemented to support a varying number of visitors in the installation. Once one of the trackers is detected as idle, another one controls its parameter representation. Exactly how this implementation is done can be seen in the source code.

Evaluation of the proposed solution

As a consequence of the ongoing lockdown, the development of this installation has been hampered. The lack of a proper testing environment has resulted in a somewhat theoretical framework for an installation, even though we have tried to make sure that the parts fit together. As an example, testing the tracking within the proper installation environment

We have also yet to sit down with Murat Van Ardelan for a round data analysis, and to incorporate his analysis of the data in the final aesthetic shaping of our installation. We recognize this as one of the most important aspects that we would need to do before deploying the installation. As of now, the data that we use to parametrise our installation is based on a dataset that we have interpreted freely.

References

- Schuster, Paul F., et al. Permafrost stores a globally significant amount of mercury. Geophysical Research Letters 45.3 (2018): 1463-1471. URL

- Soerensen, A. et al. (2016). A mass budget for mercury and methylmercury in the Arctic Ocean. Global Biogeochemical Cycles, 30(4), 560-575. URL

- Mu, C., Schuster, P. F., Abbott, B. W., Kang, S., Guo, J., Sun, S., Wu, Q., & Zhang, T. (2020). Permafrost degradation enhances the risk of mercury release on Qinghai-Tibetan Plateau. Science of the Total Environment, 708, 135127. URL